AI Research

Artificial intelligence-integrated video analysis of vessel area changes and instrument motion for microsurgical skill assessment

We employed a combined AI-based video analysis approach to assess the microvascular anastomosis performance by integrating VA changes and instrument motion. By comparing technical category scores with AI-generated parameters, we demonstrated that the parameters from both AI models encompassed a wide range of technical skills required for microvascular anastomosis. Furthermore, ROC curve analysis indicated that integrating parameters from both AI models improved the ability to distinguish surgical performance compared to using a single AI model. A distinctive feature of this study was the integration of multiple AI models that incorporated both tools and tissue elements.

AI-based technical analytic approach

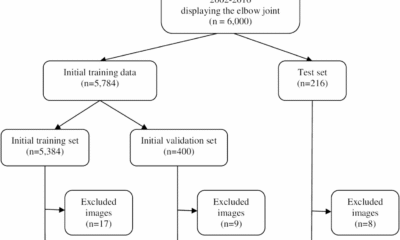

Traditional criteria-based scoring by multiple blinded expert surgeons was a highly reliable method for assessing surgeon performance with minimal interrater bias (Fig. 2 and Supplementary Table 1). However, the significant demand for human expertise and time makes real-time feedback impractical during surgery and training10,11,18. A recent study demonstrated that self-directed learning using digital instructional materials provides non-inferior outcomes in the initial stages of microsurgical skill acquisition compared to traditional instructor-led training28. However, direct feedback from an instructor continues to play a critical role when progressing toward more advanced skill levels and actual clinical practice.

AI technology can rapidly analyze vast amounts of clinical data generated in modern operating theaters, offering real-time feedback capabilities. The proposed method’s reliance on surgical video analysis makes it highly applicable in clinical settings18. Moreover, the manner in which AI is utilized in this study addresses concerns regarding transparency, explainability, and interpretability, which are fundamental risks associated with AI adoption. One anticipated application is AI-assisted devices that can promptly provide feedback on technical challenges, allowing trainees to refine their surgical skills more effectively29,30. Additionally, an objective assessment of microsurgical skills could facilitate surgeon certification and credentialing processes within the medical community.

Theoretically, this approach could help implement a real-time warning system, alerting surgeons or other staff when instrument motion or tissue deformation exceeds a predefined safety threshold, thereby enhancing patient safety17,31. However, a large dataset of clinical cases involving adverse events such as vascular injury, bypass occlusion, and ischemic stroke would be required. For real-time clinical applications, further data collection and computational optimization are necessary to reduce processing latency and enhance practical usability. Given that our AI model can be applied to clinical surgical videos, future research could explore its utility in this context.

Related works: AI-integrated instrument tracking

To contextualize our results, we compared our AI-integrated approach with recent methods implementing instrument tracking in microsurgical practice. Franco-González et al. compared stereoscopic marker-based tracking with a YOLOv8-based deep learning method, reporting high accuracy and real-time capability32. Similarly, Magro et al. proposed a robust dual-instrument Kalman-based tracker, effectively mitigating tracking errors due to occlusion or motion blur33. Koskinen et al. utilized YOLOv5 for real-time tracking of microsurgical instruments, demonstrating its effectiveness in monitoring instrument kinematics and eye-hand coordination34.

Our integrated AI model employs semantic segmentation (ResNet-50) for vessel deformation analysis and a trajectory-tracking algorithm (YOLOv2) for assessment of instrument motion. The major advantage of our approach is its comprehensive and simultaneous evaluation of tissue deformation and instrument handling smoothness, enabling robust and objective skill assessment even under challenging conditions, such as variable illumination and partial occlusion. YOLO was selected due to its computational speed and precision in real-time object detection, making it particularly suitable for live microsurgical video analysis. ResNet was chosen for its effectiveness in detailed image segmentation, facilitating accurate quantification of tissue deformation. However, unlike three-dimensional (3D) tracking methods32, our current method relies solely on 2D imaging, potentially limiting depth perception accuracy.

These comparisons highlight both the strengths and limitations of our approach, emphasizing the necessity of future studies incorporating 3D tracking technologies and expanded datasets to further validate and refine AI-driven microsurgical skill assessment methodologies.

Future challenges

Microvascular anastomosis tasks typically consist of distinct phases, including vessel preparation, needle insertion, suture placement, thread pulling, and knot tying. As demonstrated by our video parameters for each surgical phase (phases A–D), a separate analysis of each surgical phase is essential to enhance skill evaluation and training efficiency. However, our current AI model does not have the capability to automatically distinguish these surgical phases.

Previous studies utilizing convolutional neural networks (CNN) and recurrent neural networks (RNN) have demonstrated high accuracy in recognizing surgical phases and steps, particularly through the analysis of intraoperative video data35,36. Khan et al. successfully applied a combined CNN-RNN model to achieve accurate automated recognition of surgical workflows during endoscopic pituitary surgery, despite significant variability in surgical procedures and video appearances35. Similarly, automated operative phase and step recognition in vestibular schwannoma surgery further highlights the ability of these models to handle complex and lengthy surgical tasks36. Such methods could be integrated into our current AI framework to segment and individually evaluate each distinct phase of microvascular anastomosis, enabling detailed performance analytics and precise feedback.

Furthermore, establishing global standards for video recording is critical for broadly implementing and enhancing computer vision techniques in surgical settings. Developing guidelines for video recording that standardize resolution, frame rate, camera angle, illumination, and surgical field coverage can significantly reduce algorithmic misclassification issues caused by shadows or instrument occlusion18,37. Such standardization ensures consistent data quality, crucial for training accurate and widely applicable AI models across diverse clinical settings 37. These guidelines would facilitate large-scale data sharing and collaboration, substantially improving the reliability and effectiveness of AI-based surgical assessment tools globally.

Technical consideration

The semantic segmentation AI models were designed to assess respect for tissue during the needle manipulation process24. As expected, the Max-ΔVA correlated with respect for tissue in Phase B (from needle insertion to extraction). Proper needle extraction requires following its natural curve to avoid tearing the vessel wall6,7, and these technical nuances were well captured by these parameters. Additionally, the No. of TDE correlated with respect for tissue in Phases C, indicating that even during the process of pulling the threads, surgeons must exercise caution to prevent thread-induced vessel wall injury6,7. These parameters also correlated with instrument handling, efficiency, suturing technique and overall performance—an expected finding, as proper instrument handling and suturing technique are fundamental to respecting tissue. Thus, the technical categories are interrelated and mutually influential.

Trajectory-tracking AI models were designed to assess motion economy and the smoothness of surgical instrument movements25. Motion economy can be represented by the PD during a procedure. The smoothness and coordination of movement are frequently assessed using jerk-based metrics, where jerk is defined as the time derivative of acceleration. Since these jerk indexes are influenced by both movement duration and amplitude, we utilized the NJI, first proposed by Flash and Hogan38. The NJI is calculated by multiplying the jerk index by [(duration interval)5/(path length)2], with lower values indicating smoother movements. The dimensionless NJI has been used as a quantitative metric to evaluate movement irregularities in various contexts, such as jaw movements during chewing39,40, laparoscopic skills41, and microsurgical skills16,25. In this study, the Rt-PD and Lt-NJI correlated with a broad range of technical categories. Despite their distinct roles in microvascular anastomosis, coordinated bimanual manipulation is essential for optimal surgical performance6,7. With regard to Rt-NJI, these trends were particularly evident in Phases C and D, highlighting the importance of the motion smoothness in thread pulling and tying knots in determining overall surgical proficiency.

Overall, integrating these parameters enabled a comprehensive assessment of complex microsurgical skills, as each parameter captured different technical aspects. Despite its effectiveness, the model still exhibited some degree of misclassification when differentiating between good and poor performance. Notably, procedural time—a key determinant of surgical performance24,25—was intentionally excluded from the analysis. Although further exploration of additional parameters remains essential, integrating procedural time could significantly improve the classification accuracy.

This study employed the Stanford Microsurgery and Resident Training scale10,11 as a criteria-based objective assessment tool, as it covers a wide range of microsurgical technical aspects. Future research incorporating leakage tests or the Anastomosis Lapse Index13, which identifies ten distinct types of anastomotic errors, could provide deeper insights into the relationship between the quality of the final product and various technical factors.

Limitations

As mentioned above, a fundamental technical limitation of this analytical approach is the lack of 3D kinematic data, particularly in the absence of depth information. Another constraint was that when the surgical tool was outside the microscope’s visual field, kinematic data of the surgical instrument could not be captured25. Additionally, the semantic segmentation model occasionally misclassified images containing shadows from surgical instruments or hands24. To mitigate this issue, future studies should expand the training dataset to include shadowed images, thereby improving model robustness. Given that the AI model in this study utilized the ResNet-50 and YOLOv2 networks, further investigation is warranted to optimize network architecture selection. Exploring alternative deep learning models or fine-tuning existing architectures could further improve the accuracy and generalizability of surgical video analysis18.

Our study had a relatively small sample size with respect to the number of participating surgeons, although it included surgeons with a diverse range of skills. Moreover, we did not evaluate the data from repeated training sessions to estimate the learning curve or determine whether feedback could enhance training efficacy. Future studies should evaluate the impact of AI-assisted feedback on the learning curve of surgical trainees and assess whether real-time performance tracking leads to more efficient skill acquisition.

AI Research

Commanders vs. Packers props, SportsLine Machine Learning Model AI picks: Jordan Love Over 223.5 passing yards

The NFL Week 2 schedule gets underway with a Thursday Night Football matchup between NFC playoff teams from a year ago. The Washington Commanders battle the Green Bay Packers beginning at 8:15 p.m. ET from Lambeau Field in Green Bay. Second-year quarterback Jayden Daniels led the Commanders to a 21-6 opening-day win over the New York Giants, completing 19 of 30 passes for 233 yards and one touchdown. Jordan Love, meanwhile, helped propel the Packers to a dominating 27-13 win over the Detroit Lions in Week 1. He completed 16 of 22 passes for 188 yards and two touchdowns.

NFL prop bettors will likely target the two young quarterbacks with NFL prop picks, in addition to proven playmakers like Terry McLaurin, Deebo Samuel and Josh Jacobs. Green Bay’s Jayden Reed has been dealing with a foot injury, but still managed to haul in a touchdown pass in the opener. The Packers enter as a 3.5-point favorite with Green Bay at -187 on the money line. The over/under is 48.5 points. Before betting any Commanders vs. Packers props for Thursday Night Football, you need to see the Commanders vs. Packers prop predictions powered by SportsLine’s Machine Learning Model AI.

Built using cutting-edge artificial intelligence and machine learning techniques by SportsLine’s Data Science team, AI Predictions and AI Ratings are generated for each player prop.

For Packers vs. Commanders NFL betting on Monday Night Football, the Machine Learning Model has evaluated the NFL player prop odds and provided Bears vs. Vikings prop picks. You can only see the Machine Learning Model player prop predictions for Washington vs. Green Bay here.

Top NFL player prop bets for Commanders vs. Packers

After analyzing the Commanders vs. Packers props and examining the dozens of NFL player prop markets, the SportsLine’s Machine Learning Model says Packers quarterback Love goes Over 223.5 passing yards (-112 at FanDuel). Love passed for 224 or more yards in eight games a year ago, despite an injury-filled season. In 15 regular-season games in 2024, he completed 63.1% of his passes for 3,389 yards and 25 touchdowns with 11 interceptions.

In a 30-13 win over the Seattle Seahawks on Dec. 15, he completed 20 of 27 passes for 229 yards and two touchdowns. Love completed 21 of 28 passes for 274 yards and two scores in a 30-17 victory over the Miami Dolphins on Nov. 28. The model projects Love to pass for 259.5 yards, giving this prop bet a 4.5 rating out of 5. See more NFL props here, and new users can also target the FanDuel promo code, which offers new users $300 in bonus bets if their first $5 bet wins:

How to make NFL player prop bets for Washington vs. Green Bay

In addition, the SportsLine Machine Learning Model says another star sails past his total and has nine additional NFL props that are rated four stars or better. You need to see the Machine Learning Model analysis before making any Commanders vs. Packers prop bets for Thursday Night Football.

Which Commanders vs. Packers prop bets should you target for Thursday Night Football? Visit SportsLine now to see the top Commanders vs. Packers props, all from the SportsLine Machine Learning Model.

AI Research

Oklahoma considers a pitch from a private company to monitor parolees with artificial intelligence

Oklahoma lawmakers are considering investing in a new platform that aids in parole and probation check-ins through monitoring with artificial intelligence and fingerprint and facial scans.

The state could be the first in the nation to use the Montana-based company Global Accountability’s technology for parole and probation monitoring, said CEO Jim Kinsey.

Global Accountability is also pitching its Absolute ID platform to states to prevent fraud with food stamp benefits and track case workers and caregivers in the foster care system.

A pilot program for 300 parolees and 25 to 40 officers would cost Oklahoma around $2 million for one year, though the exact amount would depend on the number of programs the state wants to use the platform for, Kinsey said.

The Oklahoma Department of Corrections already uses an offender monitoring platform with the capability for check-ins using facial recognition, a spokesperson for the agency said in an email. Supervising officers can allow certain low-level offenders with smartphones to check in monthly through a mobile app instead of an office visit.

The state agency is “always interested in having conversations with companies that might be able to provide services that can create efficiencies in our practices,” the spokesperson said in a statement.

States like Illinois, Virginia and Idaho have adopted similar technology, though Global Accountability executives say their platform is unique because of its combination of biometrics, location identification and a feature creating virtual boundaries that send an alert to an officer when crossed.

The Absolute ID platform has the capacity to collect a range of data, including location and movement, but states would be able to set rules on what data actually gets captured, Kinsey said.

During an interim study at the Oklahoma House of Representatives in August, company representatives said their technology could monitor people on parole and probation through smartphones and smartwatches. Users would have to scan their face or fingerprint to access the platform for scheduled check-ins. The company could implement workarounds for certain offenders who can’t have access to a smartphone.

There are 428 people across the state using ankle monitors, an Oklahoma Department of Corrections spokesperson said. The agency uses the monitors for aggravated drug traffickers, sex offenders and prisoners participating in a GPS-monitored reentry program.

“That is a working technology,” said David Crist, lead compliance officer for Global Accountability. “It’s great in that it does what it should do, but it’s not keeping up with the needs.”

The Absolute ID platform uses artificial intelligence to find patterns in data, like changes in the places a prisoner visits or how often they charge their device, Crist said. It can also flag individuals for review by an officer based on behaviors like missing check-ins, visiting unauthorized areas or allowing their device to die.

Agencies would create policies that determine potential consequences, which could involve a call or visit from an officer, Crist said. He also said no action would be taken without a final decision from a supervising officer.

“Ultimately, what we’re trying to do is reduce some of the workload of officers because they can’t be doing this 24/7,” Crist said. “But some of our automation can. And it’s not necessarily taking any action, but it is providing assistance.”

Parolees and probationers can also text message and call their supervising officers through the platform.

The state could provide smartphones or watches to people on parole or probation or require them to pay for the devices themselves, said Crist. He also said the state could make prisoners’ failure to carry their phone with them or pay their phone bill a violation of parole.

Rep. Ross Ford, R-Broken Arrow, who organized the study, said in an interview with the Frontier he first learned about Global Accountability several years ago and was impressed by their platform.

Ford said he doesn’t see the associated costs for parolees and probationers, like keeping up with phone bills, as a problem.

“I want to help them get back on their feet,” Ford said. “I want to do everything I can to make sure that they’re successful when they’re released from the penitentiary. But you have to also pay your debt to society too and part of that is paying fees.”

Support Independent Oklahoma Journalism

The Frontier holds the powerful accountable through fearless, in-depth reporting. We don’t run ads — we rely on donors who believe in our mission. If that’s you, please consider making a contribution.

Your gift helps keep our journalism free for everyone.

Ford said he thinks using the platform to monitor parole, probation and food stamp benefits could help the state save money. He’s requested another interim study on using the company’s technology for food stamp benefits, but a date hasn’t been posted yet.

Other legislators are more skeptical of the platform. Rep. Jim Olsen, R-Roland, said he thought the platform could be helpful, but he doesn’t see a benefit to Oklahoma being an early adopter. He said he’d like to let software companies work out some of the kinks first and then consider investing when the technology becomes less expensive.

Rep. David Hardin, R-Stilwell, said he remains unconvinced by Global Accountability’s presentation. He said the Department of Corrections would likely need to request a budget increase to fund the program, which would need legislative approval. Unless the company can alleviate some of his concerns, he said he doubts any related bill would pass the Public Safety committee that he chairs.

“You can tell me anything,” Hardin said. “I want to see what you’re doing. I want you to prove to me that it’s going to work before I start authorizing the sale of taxpayer money.”

Related stories

AI Research

Research Solutions Launches AI Copyright Tool for Scientific Research

Solution Enables Corporate Researchers To Safely Use Generative AI Tools With Journal Articles Through Integrated Rights Management And Publisher Partnerships

The AI Rights add-on transforms Research Solutions’ Article Galaxy platform into a comprehensive solution for AI rights verification and acquisition, providing instant clarity on usage permissions and seamless access to acquire necessary rights. With direct partnerships with major publishers, the solution enables researchers to confidently analyze scientific literature with enterprise AI platforms like Microsoft Copilot, ChatGPT, and Claude while maintaining full copyright compliance.

“Our customers have been clear: they need AI capabilities to accelerate their research, but they cannot risk non-compliance,” said Roy W. Olivier, CEO of Research Solutions. “This launch delivers on our commitment to eliminate friction in the research workflow while creating sustainable value for publishers. We’re solving a compliance problem while enabling a new era of AI-powered scientific research.”

Research teams confront several complex obstacles when attempting to integrate AI tools into their workflows. Most publishers explicitly prohibit the use of their content in AI applications, yet no streamlined mechanism exists for acquiring necessary permissions. Research Solutions’ AI Rights add-on solves this through several key innovations:

- Comprehensive Rights Management: Users can manage all AI rights sources through a single interface—whether through open access licenses, Reproduction Rights Organization agreements (RROs), direct publisher relationships, or Article Galaxy marketplace acquisition

- Instant Rights Verification: Users immediately see AI usage permissions for any article, removing guesswork and compliance uncertainty

- One-Click Rights Acquisition: Missing permissions can be purchased directly through the Article Galaxy interface with transparent pricing from participating publishers

- Retroactive Rights Purchase: Organizations can acquire AI rights for articles previously purchased, enabling immediate compliance for existing content libraries

- Organization-Wide Licensing: AI Rights acquired apply across the entire organization, eliminating per-use restrictions and ongoing compliance concerns

“The combination of generative AI and scientific literature creates unprecedented opportunities for accelerating discovery, but only when researchers can access content legally and efficiently,” said Chris Bendall, VP of Product Strategy at Research Solutions. “We’ve built a solution that makes AI analysis of scientific content both legally compliant and operationally seamless—turning what was previously a compliance risk into a competitive advantage.”

About Research Solutions

Research Solutions (NASDAQ: RSSS) is a vertical SaaS and AI company that simplifies research workflow for academic institutions, life science companies, and research organizations worldwide. As one of the only publisher-independent marketplaces for scientific, technical, and medical (STM) content, the company uniquely combines AI-powered tools—including an intelligent research assistant and full-text search capabilities—with seamless access to both open access and paywalled research. The platform enables organizations to discover, access, manage, and analyze scientific literature more efficiently, accelerating the pace of scientific discovery.

SOURCE Research Solutions, Inc. | LinkedIn | Facebook | X

For more information, visit https://www.researchsolutions.com.

![]() View original content to download multimedia:https://www.prnewswire.com/news-releases/research-solutions-unveils-ai-rights-add-on-to-ensure-copyright-safe-ai-use-of-scientific-literature-302554006.html

View original content to download multimedia:https://www.prnewswire.com/news-releases/research-solutions-unveils-ai-rights-add-on-to-ensure-copyright-safe-ai-use-of-scientific-literature-302554006.html

SOURCE Research Solutions, Inc.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi