AI Insights

Prediction: This Artificial Intelligence (AI) Stock Will Outperform Nvidia by 2030

Key Points

-

Nvidia can credit the rise in its stock to relentless demand for its chipsets over the last few years.

-

Rising spending on servers, networking equipment, and data centers suggests that infrastructure could be the next big theme in the AI story.

-

While rising infrastructure spending bodes well for Nvidia, foundry specialist Taiwan Semiconductor Manufacturing may be even better positioned.

When ChatGPT was released to the broader public on Nov. 30, 2022, Nvidia had a market capitalization of just $345 billion. As of the closing bell on July 25, 2025, its market cap had eclipsed $4.2 trillion, making it the most valuable company in the world — by a pretty wide margin, too.

Given these historic gains, it’s not entirely surprising that for many growth investors, the artificial intelligence (AI) movement revolves around Nvidia. At this point, the company is basically seen as a barometer measuring the overall health of the entire AI sector.

Where to invest $1,000 right now? Our analyst team just revealed what they believe are the 10 best stocks to buy right now. Learn More »

It’s hard to bet against Nvidia, but I do see another stock in the semiconductor realm that appears better positioned for long-terms gains.

Let’s explore what makes Taiwan Semiconductor Manufacturing(NYSE: TSM) such a compelling opportunity in the chip space right now, and the catalysts at play that could fuel returns superior to Nvidia’s over the next several years.

The AI infrastructure wave is just starting

I like to think of the AI narrative as a story. For the last few years, the biggest chapter revolved around advanced chipsets called graphics processing units (GPUs), which are used across a variety of generative AI applications. These include building large language models (LLMs), machine learning, robotics, self-driving cars, and more.

These various applications are only now beginning to come into focus. The next big chapter in the AI storyline is how infrastructure is going to play a role in actually developing and scaling up these more advanced technologies.

Global management consulting firm McKinsey & Company estimates that investments in AI infrastructure could reach $6.7 trillion over the next five years, with a good portion of that allocated toward hardware for data centers.

Piggybacking off of this idea, consider that cloud hyperscalers Amazon, Microsoft, and Alphabet, along with their “Magnificent Seven” peer Meta Platforms, are expected to devote north of $330 billion on capital expenditures (capex) just this year. Much of this is going toward additional servers, chips, and networking equipment for accelerated AI data center expansion.

To me, the aggressive spending on capex from the world’s largest businesses is a strong signal that the infrastructure wave in AI is beginning to take shape.

Image Source: Getty Images.

This is great for Nvidia and even better for TSMC

Rising AI infrastructure investment is a great tailwind for Nvidia but also a source of growth for Advanced Micro Devices, Broadcom, and many others.

Unlike AMD or Nvidia, though, growth for Taiwan Semiconductor (TSMC for short) doesn’t really hinge on the success of a particular product line. In other words, Nvidia and AMD are competing fiercely against one another to win AI workloads, which boils down to which of them can design the most powerful, energy efficient chips at an affordable price.

The investment case around TSMC is that it could be seen as more of an agnostic player in the AI chip market because its foundry and fabrication services stand to benefit from broader, more secular tailwinds fueling AI infrastructure — regardless of whose chips create the most demand.

Think of TSMC as the company actually making the picks and shovels that Nvidia, AMD, and other chip companies need to go out and sell while competing among one another.

TSMC’s “Nvidia moment” may be here

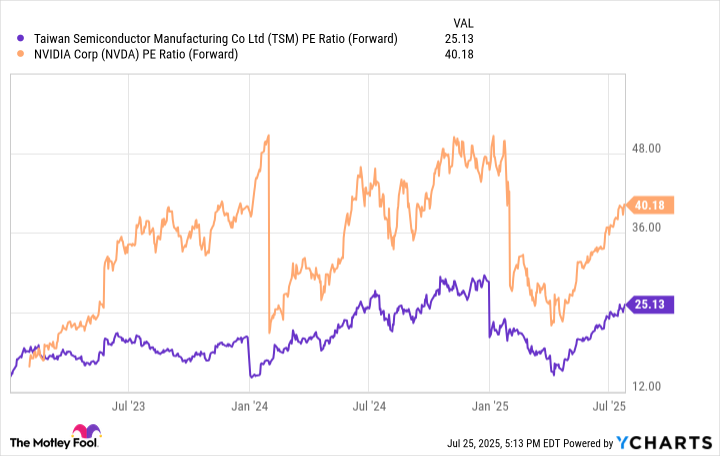

The valuation disparity between Nvidia and TSMC says a couple of things about how the latter is viewed in the broader chip landscape.

TSM PE Ratio (Forward) data by YCharts; PE = price to earnings.

Companies such as Nvidia and AMD rely heavily on TSMC’s foundry and fabrication services, which are essentially the backbone of the chip industry. While some on Wall Street would argue that Nvidia has a technological moat thanks to its one-two punch of chips and software, I think that TSMC has an underappreciated moat that provides the company with broader exposure to the chip industry compared to its peers.

Over the next five years, I think use cases of AI development increase as businesses seek to expand beyond their current markets in cloud computing, cybersecurity, enterprise software, among others.

Emerging applications such as autonomous driving and quantum computing will drive demand for GPUs and data center capacity even further. For this reason, TSMC may be on the verge of an “Nvidia moment” featuring prolonged, explosive growth.

TSMC’s modest forward price-to-earnings multiple (P/E) of 25 puts it in a unique position compared to Nvidia (with its forward P/E of 40) for considerable expansion as the infrastructure chapter of AI continues to be written.

I think Taiwan Semiconductor Manufacturing’s valuation will increasingly become more congruent with the company’s growth over the next several years, and so I predict that the stock will outperform Nvidia by 2030.

Should you invest $1,000 in Taiwan Semiconductor Manufacturing right now?

Before you buy stock in Taiwan Semiconductor Manufacturing, consider this:

The Motley Fool Stock Advisor analyst team just identified what they believe are the 10 best stocks for investors to buy now… and Taiwan Semiconductor Manufacturing wasn’t one of them. The 10 stocks that made the cut could produce monster returns in the coming years.

Consider when Netflix made this list on December 17, 2004… if you invested $1,000 at the time of our recommendation, you’d have $630,291!* Or when Nvidia made this list on April 15, 2005… if you invested $1,000 at the time of our recommendation, you’d have $1,075,791!*

Now, it’s worth noting Stock Advisor’s total average return is 1,039% — a market-crushing outperformance compared to 182% for the S&P 500. Don’t miss out on the latest top 10 list, available when you join Stock Advisor.

*Stock Advisor returns as of July 29, 2025

Adam Spatacco has positions in Alphabet, Amazon, Meta Platforms, Microsoft, and Nvidia. The Motley Fool has positions in and recommends Advanced Micro Devices, Alphabet, Amazon, Meta Platforms, Microsoft, Nvidia, and Taiwan Semiconductor Manufacturing. The Motley Fool recommends Broadcom and recommends the following options: long January 2026 $395 calls on Microsoft and short January 2026 $405 calls on Microsoft. The Motley Fool has a disclosure policy.

AI Insights

Deliberating On The Many Definitions Of Artificial General Intelligence

Artificial general intelligence (AGI) does not yet have a universally accepted definition, but we need one ASAP.

getty

In today’s column, I examine an unresolved controversy in the AI field that hasn’t received the attention it rightfully deserves, namely, what constitutes a sensible and universally agreed-upon definition for pinnacle AI, commonly and vaguely referred to as artificial general intelligence (AGI).

This is a vital matter. At some point, we should be ready to agree whether the advent of AGI has been reached. There is also the matter of gauging AI progress and whether we are getting closer to AGI or veering away from AGI. All told, if there isn’t a wholly accepted universal definition, we will be constantly battling over whether pinnacle AI is in our sights and whether it has truly been attained. This is the classic dilemma of apples versus oranges. A person who defines apples as though they are oranges will be forever in a combative mode when trying to discuss whether someone is holding an apple in their hands.

As Socrates once pointed out, the beginning of wisdom is the definition of terms. There needs to be a concerted effort to properly define what AGI means.

Let’s talk about it.

This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here).

Heading Toward AGI And ASI

First, some fundamentals are required to set the stage for this discussion.

There is a great deal of research going on to further advance AI. The general goal is to either reach artificial general intelligence (AGI) or maybe even the outstretched possibility of achieving artificial superintelligence (ASI).

Overall, the definition of AGI generally consists of aiming for AI that is considered on par with human intellect and can seemingly match our intelligence. ASI is AI that has gone beyond human intellect and would be superior in many, if not all, feasible ways. The idea is that ASI would be able to run circles around humans by outthinking us at every turn. For more details on the nature of conventional AI versus AGI and ASI, see my analysis at the link here.

We have not yet attained the generally envisioned AGI.

In fact, it is unknown whether we will reach AGI, or that maybe AGI will be achievable in decades or perhaps centuries from now. The AGI attainment dates that are floating around are wildly varying and wildly unsubstantiated by any credible evidence or ironclad logic. ASI is even more beyond the pale when it comes to where we are currently with conventional AI.

Controversy About AGI As Terminology

To the surprise of many in the media and the general public at large, there is no universally accepted standardized definition for what AGI consists of.

This lack of an across-the-board formalized definition for AGI spurs numerous difficulties and problems. For example, AI gurus referring to AGI can be making unspoken assumptions about what they believe AGI to be, and therefore stoke confusion since they aren’t referring to the same thing. Discussions can occur at cross purposes due to each respective expert having their own idiosyncratic definition of what AGI is or ought to be.

An especially disquieting concern is that attaining AGI has become a preeminent directional focus for many in the AI industry, yet this is a bit of a mirage since the AI field does not have a said-to-be one-and-only-one North Star that represents what AGI is supposed to be:

- “Recent advances in large language models (LLMs) have sparked interest in ‘achieving human-level ‘intelligence’ as a ‘north-star goal’ of the AI field. This goal is often referred to as ‘artificial general intelligence’ (‘AGI’).”

- “Yet rather than helping the field converge around shared goals, AGI discourse has mired it in controversies.”

- “Researchers diverge on what AGI is and on assumptions about goals and risks. Researchers further contest the motivations, incentives, values, and scientific standing of claims about AGI.”

- Finally, the building blocks of AGI as a concept — intelligence and generality — are contested in their own right.” (source: Borhane et al, “Stop Treating ‘AGI’ as the North-Star Goal of AI Research.” arXiv, February 7, 2025).

The Moving Of The Cheese

In a prior posting, I had noted that some AI luminaries have been opting to define AGI in a manner that suits their specific interests. I refer to this as moving the cheese (see my discussion at the link here). You might be familiar with the movable cheese metaphor — it became part of our cultural lexicon due to a book published in 1998 entitled “Who Moved My Cheese? An Amazing Way To Deal With Change In Your Work And In Your Life”. The book identified that we are all, at times, akin to mice seeking a morsel of cheese in a maze.

OpenAI CEO Sam Altman is especially adept at loosely defining and then redefining AGI. In his personal blog posting entitled “Three Observations” of February 10, 2025, he provided a definition of AGI that said this: “AGI is a weakly defined term, but generally speaking, we mean it to be a system that can tackle increasingly complex problems, at human level, in many fields.”

This AGI definition contains a plethora of ambiguity and came under fierce arguments about being shaped to accommodate OpenAI’s AI products. For example, by indicating that AGI would be at a human level in “many fields”, this seemed to be an immense watering down from the earlier concepts that AGI would be versed in all fields. It is a lot easier to devise pinnacle AI that would be merely accomplished in many fields, versus having to reach a much taller threshold of doing so in all fields.

Still Messing Around

According to a reported interview with Sam Altman that took place recently, Altman made these latest remarks about the AGI moniker:

- “I think it’s not a super useful term.”

- “I think the point of all of this is it doesn’t really matter, and it’s just this continuing exponential model capability that we’ll rely on for more and more things.” (source: “Sam Altman now says AGI is ‘not a super useful term’ – and he’s not alone” by Ryan Browne, CNBC, August 11, 2025).

Once again, this type of chatter about the meaning of AGI has sparked renewed controversy. The remarks seem to try and create distance from the AGI definitions that he and others have touted in the last several years.

Why so?

It could be that part of the underlying basis for wanting to distance the AGI phraseology could be laid at the feet of the newly released GPT-5. Leading up to GPT-5, there had been tremendous uplifting of expectations that we were finally going to have AGI in our hands, ready for immediate use. By and large, though GPT-5 had some interesting advances, it wasn’t even close to any kind of AGI, almost no matter how low a bar one might set for AGI, see my detailed analysis at the link here.

Inspecting AGI Definitions

Let’s go ahead and look at a variety of AGI definitions that have been floating around and are considered as potentially viable or at least noteworthy ways to define AGI. I handily list these AGI definitions so that you can see them collected into one convenient place. Furthermore, it makes for handy analysis and comparison by having them at the front and center for inspection.

Before launching into the AGI definitions, you might find it of keen interest that the AI field readily acknowledges that things are in a state of flux on the heady matter. The Association for the Advancement of Artificial Intelligence (AAAI), considered a top-caliber AI non-profit academic professional association, recently convened a special panel to envision the future of AI, and they, too, acknowledged the confounding nature of what AGI might be.

The AAAI futures report that was published in March 2025 made this pointed commentary about AGI (excerpts):

- “AGI is not a formally defined concept, nor is there any agreed test for its achievement.

- “Some researchers suggest that ‘we’ll know it when we see it’ or that it will emerge naturally from the right set of principles and mechanisms for AI system design.”

- “In discussions, AGI may be referred to as reaching a particular threshold on capabilities and generality. However, others argue that this is ill-defined and that intelligence is better characterized as existing within a continuous, multidimensional space.”

Strawman Definitions Of AGI

Let’s get started on the various AGI definitions by beginning with this strawman:

- “AGI is a computer that is capable of solving human solvable problems, but not necessarily in human-like ways.” (source: Morris et al, “Levels of AGI: Operationalizing Progress on the Path to AGI.” arXiv, November 4, 2023).

Give the definition a contemplative moment.

Here’s one mindful facet. Is this AGI definition suggesting that unsolvable problems by humans are completely beyond the capability of AGI? If so, this would be of great dismay to many, since a vaunted basis for pursuing AGI is that the advent of AGI will presumably lead to cures for cancer and many other diseases (aspects that so far have not been solvable by humans).

I trust you can see the challenges associated with devising a universally acceptable, ironclad AGI definition.

In a now classic research paper on the so-called sparks of AGI, the authors provided this definition of AGI:

- “We use AGI to refer to systems that demonstrate broad capabilities of intelligence, including reasoning, planning, and the ability to learn from experience, and with these capabilities at or above human-level.” (source: Bubeck et al, “Sparks of Artificial General Intelligence: Early Experiments with GPT-4.” arXiv, March 22, 2023).

This research paper became a widespread flashpoint both within and beyond the AI community due to claiming that present-day AI of 2023 was showcasing a hint or semblance of AGI. The researchers invoked parlance that AI at the time was revealing sparks of AGI.

Critics and skeptics alike pointed out that the AGI definition was of such a broad and non-specific nature that nearly any AI system could be construed as being ostensibly AGI.

More Definitions Of AGI

In addition to AI researchers defining AGI, many others have done so, too.

The Gartner Group, a longstanding practitioner-oriented think tank on computing in general, provided this definition of AGI in 2024:

- “Artificial General Intelligence (AGI), also known as strong AI, is the (currently hypothetical) intelligence of a machine that can accomplish any intellectual task that a human can perform. AGI is a trait attributed to future autonomous AI systems that can achieve goals in a wide range of real or virtual environments at least as effectively as humans can” (Gartner Group as quoted in Jaffri, A. “Explore Beyond GenAI on the 2024 Hype Cycle for Artificial Intelligence.” Gartner Group, November 11, 2024).

This definition is indicative that some AGI definitions are short in length and others are lengthier, such that this example is a bit longer than the other two AGI definitions noted earlier. There is an espoused belief amongst some in the AI community that a sufficiently suitable AGI definition would have to be quite lengthy, doing so to encompass the essence of what AGI is and what AGI is not.

Another noteworthy aspect of the Gartner Group definition of AGI is that the phrase “strong AI” is mentioned in the definition. The initial impetus for the AGI moniker arose partially due to debates within the AI community about strong AI versus weak AI (see my explanation at the link here).

Here is another example of a multi-sentence AGI definition:

- “An Artificial General Intelligence (AGI) system is a computer that is adaptive to the open environment with limited computational resources and that satisfies certain principles. For AGI, problems are not predetermined and not specified ones; otherwise, there is most probably always a special system that performs better than any general system. I keep the part ‘certain principles’ to be blurry, waiting for future discussions and debates on it.” (source: Xu, “What is Meant by AGI? On The Definition of Artificial General Intelligence.” arXiv, April 16, 2024).

This definition reveals another facet of AGI definitions overall regarding the importance of defining all terms used within an AGI definition. In this instance, the researcher states that AGI must satisfy “certain principles”. In this instance, the statement mentions that the informally noted “certain principles” remain undefined. A lack of completeness leaves open a wide interpretation of any postulated AGI definition.

Lots And Lots Of AGI Definitions

Wikipedia has a definition for AGI:

- “Artificial general intelligence (AGI) — sometimes called human‑level intelligence AI—is a type of artificial intelligence capable of performing the full spectrum of cognitively demanding tasks with proficiency comparable to, or surpassing, that of humans” (Wikipedia 2025).

A notable element of this AGI definition and many others is whether AGI is intended to be on par with humans or exceed humans (“comparable to, or surpassing, that of humans”).

There is an ongoing debate in the AI community on this nuanced but crucial consideration. One viewpoint is that the coined artificial superintelligence or ASI encompasses AI that is beyond or above human capabilities, while AGI is solely intended to be AI that meets or is on par with human capabilities.

IBM has provided a definition of AGI:

- “Artificial general intelligence (AGI) is a hypothetical stage in the development of machine learning (ML) in which an artificial intelligence (AI) system can match or exceed the cognitive abilities of human beings across any task. It represents the fundamental, abstract goal of AI development: the artificial replication of human intelligence in a machine or software” (IBM as quoted in Bergmann et al, “What is artificial general intelligence (AGI)?” IBM, September 17, 2024).

An element of special interest in this AGI definition is the reference to machine learning (ML). There are AGI definitions that refer to subdisciplines within the AI field, such as referring to ML or other areas, such as robotics or autonomous systems.

Should an AGI definition explicitly or firmly refer to AI practices or subdisciplines?

The question is often asked since AGI then seemingly becomes tied to specific AI fields of study. The contention is that the definition of AGI should be fully standalone and not rely upon references to AI fields or subfields (which are subject to change, and otherwise seemingly unnecessary to strictly define AGI per se).

OpenAI has also posted a definition of AGI, as contained within the official OpenAI Charter statement:

- “AGI is defined as highly autonomous systems that outperform humans at most economically valuable work.”

This definition brings up an emerging trend associated with AGI definitions. The wording or a similar variation of “at most economically valuable work” is increasingly being used in the latest definitions of AGI. This appears to tie the capabilities of AGI to the notion of economically valuable work.

Critics argue that this is a limiting factor that does not suitably belong in the definition of AGI and perhaps serves a desired purpose rather than acting to fully and openly define AGI.

My Working Definition Of AGI

The working definition of AGI that I have been using is this strawman that I composed when the AGI moniker was initially coming into vogue as a catchphrase:

- “AGI is defined as an AI system that exhibits intelligent behavior of both a narrow and general manner on par with that of humans, in all respects” (source: Eliot, “Figuring out what artificial general intelligence consists of”, Forbes, December 6, 2023).

The reference to intelligent behavior in both a narrow and general manner is an acknowledgment that historically, AGI as a phrase partially arose to supersede the generation of AI that was viewed as being overly narrow and not of a general nature (such as expert systems, knowledge-based systems, rules-based systems).

Another element is that AGI would be on par with the intelligent behavior of humans in all respects. Thus, not being superhuman, and instead, on the same intellectual level as humankind. And doing so in all respects, comprehensively and exhaustively so.

Mindfully Asking What AGI Means

When you see a banner headline proclaiming that AGI is here, or getting near, or maybe eons away, I hope that the first thought you have is to dig into the meaning of AGI as it is being employed in that media proclamation.

Perhaps the declaration refers to apples rather than oranges or has a definition that is sneakily devised to tilt toward one vantage point over another. AGI has regrettably become a catchall. Some believe we should discard the AGI moniker and come up with a new name for pinnacle AI. Others assert that this might merely be a form of trickery to avoid owning up to the harsh fact that we have not yet attained AGI.

For the time being, I would wager that the AGI moniker is going to stick around. It has gotten enough traction that even though it is loosey-goosey, it does have a certain amount of popularized name recognition. If AGI as a designation is going to have long legs, it would be significant to reach a thoughtful agreement on a universally accepted definition.

The famous English novelist Samuel Butler made this pointed remark: “A definition is the enclosing of a wilderness of ideas within a wall of words.” Do you part to help enclose a wilderness of ideas about pinnacle AI into a neatly packed and fully sensible set of words.

Fame and possibly fortune await.

AI Insights

Artificial Intelligence Cheating – goSkagit

AI Insights

Artificial Intelligence Cheating | National News

We recognize you are attempting to access this website from a country belonging to the European Economic Area (EEA) including the EU which

enforces the General Data Protection Regulation (GDPR) and therefore access cannot be granted at this time.

For any issues, call 1-208-522-1800.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi