AI Research

Decision-Making AI “Scientists” Perform Sophisticated, Interdisciplinary Research

Imagine you’re a molecular biologist wanting to launch a project seeking treatments for a newly emerging disease. You know you need the expertise of a virologist and an immunologist, plus a bioinformatics specialist to help analyze and generate insights from your data. But you lack the resources or connections to build a big multidisciplinary team.

Researchers at Chan Zuckerberg Biohub San Francisco (CZ Biohub SF) and at Stanford University now offer a novel solution to this dilemma. They’ve developed an AI-driven “Virtual Lab,” through which a team of AI agents, each equipped with varied scientific expertise, can tackle sophisticated and open-ended scientific problems by formulating, refining, and carrying out a complex research strategy. The agents can even conduct virtual experiments, producing results that can be validated in real-life laboratories.

In a paper published in Nature, “The Virtual Lab of AI agents designs new SARS-CoV-2 nanobodies,” the team, headed by John Pak, PhD, of CZ Biohub SF, and Stanford’s James Zou, PhD, report on their development of the Virtual Lab platform, which they describe as “… an AI-human research collaboration to perform sophisticated, interdisciplinary science research.”

“Interdisciplinary science research is complex, requiring increasingly large teams of researchers with expertise in diverse fields of science,” the authors wrote. But assembling and coordinating potentially large teams of multidisciplinary researchers for different specialties can be challenging. “Furthermore, it can be harder for under-resourced groups without connections to many experts across fields to engage in complex, interdisciplinary science, especially when dedicated interdisciplinary research funding is lacking,” they pointed out.

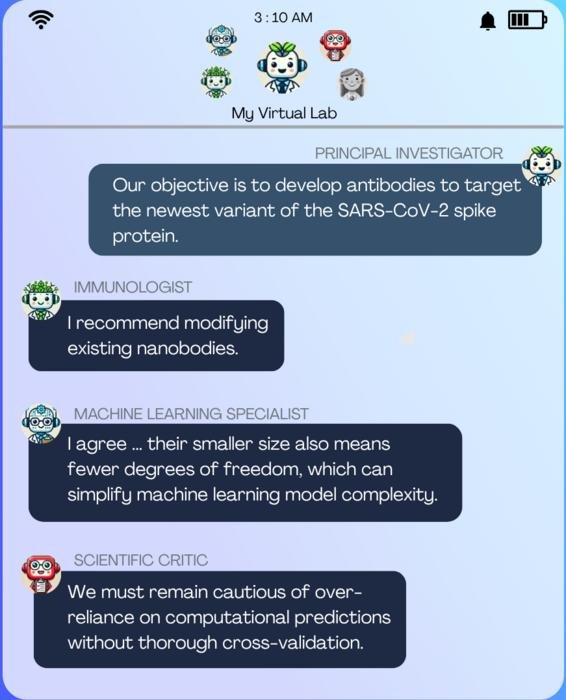

In the Virtual Lab a human user creates a “Principal Investigator” AI agent (the PI) that assembles and directs a team of additional AI agents emulating the specialized research roles seen in science labs. The agents are run by a large language model (LLM), giving them scientific reasoning and decision-making capabilities. The human researcher proposes a scientific question and then monitors meetings in which the PI agent exchanges ideas with the team of specialist agents to advance the research. In addition to the PI agent and specialist agents, the Virtual Lab platform includes a Scientific Critic agent, a generalist whose role is to ask probing questions and inject a dose of skepticism into the process. “We found the Critic to be quite essential, and also reduced hallucinations,” Zou said.

The team further explained, “In the Virtual Lab, a human researcher guides a set of interdisciplinary AI agents, such as a biologist or computer scientist, through a set of research meetings that tackle the different phases of a research project…The AI agents are run by an LLM that powers their scientific reasoning abilities with instructions that guide each agent’s scientific expertise and interaction with the other agents and the human researcher.”

The Virtual Lab performs research both through team meetings and through individual meetings. In both cases, the human researcher provides an initial agenda to guide the discussion, and then the agents discuss how to address the agenda. In team meetings, a broad research question is discussed by all of the agents, which work together to come up with an answer. In individual meetings, a more specific task is given to single agent, for example, writing code for a machine learning model. Given this task the agent then either works alone or together with another agent providing “critical feedback.”

For their reported study, the team used the Virtual Lab platform to investigate a timely research question, designing antibodies or nanobodies to bind to the spike protein of new variants of the SARS-CoV-2 virus. After just a few days working together, the Virtual Lab team had designed and implemented an innovative computational pipeline and had presented Pak and Zou with blueprints for dozens of binders.

Experiments in Pak’s lab found that two of the newly designed nanobodies bound to the spike protein of recent SARS-CoV-2 variants, a significant enough finding that Pak expects to publish studies on them. “We experimentally validated 92 mutant nanobodies designed by the Virtual Lab and found that over 90% of the nanobodies were expressed and soluble, with two promising candidates showing unique binding profiles to the recent JN.1 and KP.3 spike RBD variants,” the investigators reported in their paper. This result, they suggest, demonstrates that the Virtual Lab’s AI-human collaboration can perform complex, interdisciplinary science research that translates to validated results in the real world.

The overall Virtual Lab study was led by Kyle Swanson, a PhD student in Zou’s group. The team found that while human researchers participated in AI scientists’ meetings and offered guidance at key moments, their words made up only about one percent of all conversations. The vast majority of discussions, decisions, and analyses were performed by the AI agents themselves.

“What was once this crazy science fiction idea is now a reality,” said Pak, group leader of the Biohub SF Protein Sciences Platform. “The AI agents came up with a pipeline that was quite creative. But at the same time, it wasn’t outrageous or nonsensical. It was very reasonable—and they were very fast…You’d think there’d be no way AI agents talking together could propose something akin to what a human scientist would come up with, but we found here that they really can. It’s pretty shocking.”

Zou is a pioneering AI researcher who has been recognized widely for breakthroughs in using AI for biomedical research, including winning the International Society of Computational Biology’s 2025 Overton Prize and being named in the New York Times’ 2024 Good Tech Awards for SyntheMol, an AI system that can design and validate novel antibiotics.

“This is the first demonstration of autonomous AI agents really solving a challenging research problem, from start to finish,” said Zou, an associate professor of biomedical data science who leads Stanford University’s AI for Health program and is also a CZ Biohub SF Investigator. “The AI agents made good decisions about complex problems and were able to quickly design dozens of protein candidates that we could then test in lab experiments.”

It’s become increasingly common for human scientists to employ LLMs to help with science research, such as analyzing data, writing code, and even designing proteins. Zou and Pak’s Virtual Lab platform, however, is to their knowledge the first to apply multistep reasoning and interdisciplinary expertise to successfully address an open-ended research question.

“The strength of the Virtual Lab comes from its multi-agent architecture, which empowers an AI-human scientific collaboration via a series of meetings between a human researcher and a team of interdisciplinary LLM agents,” the scientists stated. “The different backgrounds of the various scientist agents leads to discussions that approach complicated scientific questions from multiple angles, thereby contributing to comprehensive answers.” The human researcher’s input is also vital for providing high-level guidance where the agents lack relevant context, for example, choosing readily available computational tools, or introducing constraints in experimental validation, they stated.

Zou and Pak first met at one of the biweekly Biohub SF Investigator Program meetings. “I had just seen James give a talk at the previous Investigator meeting, where he said he wished he could do more experimental work,” Pak said. “So I decided to introduce myself.” That conversation, in the spring of 2024, sparked a collaboration that drew on Zou’s AI expertise and Pak’s expertise in protein science.

When asked if he’s worried about AI scientists replacing him, Pak says no. Instead, he thinks these new virtual collaborators will just enhance his work. “This project opened the door for our Protein Science team to test a lot more well-conceived ideas very quickly,” he said. “The Virtual Lab gave us more work, in a sense, because it gave us more ideas to test. If AI can produce more testable hypotheses, that’s more work for everyone.”

The results, said Pak and Zou, not only demonstrate the potential benefits of human–AI collaborations but also highlight the importance of diverse perspectives in science. Even in these virtual settings, instructing agents to assume different roles and bring varying perspectives to the table resulted in better outcomes than one AI agent working alone, they said. And because the discussions result in a transcript that human team members can access and review, researchers can feel confident about why certain decisions were made and probe further if they have questions or concerns.

“The agents and the humans are all speaking the same language, so there’s nothing ‘black box’ about it, and the collaboration can progress very smoothly,” Pak said. “It was a really positive experience overall, and I feel pretty confident about applying the Virtual Lab in future research.”

Zou says the existing platform is designed for biomedical research questions, but modifications would allow it to be used in a much wider array of scientific disciplines. “We’re demonstrating a new paradigm where AI is not just a tool we use for a specific step in our research, but it can actually be a primary driver of the whole process to generate discoveries. It’s a big shift, and we’re excited to see how it helps us advance in all areas of research.” The authors added, “While the experimental results here are limited to the domain of nanobody design, with future work, we envision the Virtual Lab as a powerful framework for human researchers to engage in interdisciplinary science research with the help of LLMs.” They continued, “… the Virtual Lab architecture of LLM agents and meetings is agnostic to specific research questions or scientific domains. The Virtual Lab architecture can be implemented with any set of scientist agents and any human researcher, and the conversations in the meetings will naturally adapt based on the human researcher’s agenda and the backgrounds of the agents.”

AI Research

Fight AI-powered cyber attacks with AI tools, intelligence leaders say

Cyber defenders need AI tools to fend off a new generation of AI-powered attacks, the head of the National Geospatial-Intelligence Agency said Wednesday.

“The concept of using AI to combat AI attack or something like that is very real to us. So this, again, is commanders’ business. You need to enable your [chief information security officer] with the tools that he or she needs in order to employ AI to properly handle AI-generated threats,” Vice Adm. Frank Whitworth said at the Billington Cybersecurity Summit Wednesday.

Artificial intelligence has reshaped cyber, making it easier for hackers to manipulate data and craft more convincing fraud campaigns, like phishing emails used in ransomware attacks.

Whitworth spoke a day after Sean Cairncross, the White House’s new national cyber director, called for a “whole-of-nation” approach to ward off foreign-based cyberattacks.

“Engagement and increased involvement with the private sector is necessary for our success,” Cairncross said Tuesday at the event. “I’m committed to marshalling a unified, whole-of-nation approach on this, working in lockstep with our allies who share our commitment to democratic values, privacy and liberty…Together, we’ll explore concepts of operation to enable our extremely capable private sector, from exposing malign actions to shifting adversaries’ risk calculus and bolstering resilience.”

The Pentagon has been incorporating AI, from administrative tasks to combat. The NGA has long used it to spot and predict threats; use of its signature Maven platform has doubled since January and quadrupled since March 2024.

But the agency is also using “good old-fashioned automation” to more quickly make the military’s maps.

“This year, we were able to produce 7,500 maps of the area involving Latin America and a little bit of Central America…that would have been 7.5 years of work, and we did it in 7.5 weeks,” Whitworth said. “Sometimes just good old-fashioned automation, better practices of using automation, it helps you achieve some of the speed, the velocity that we’re looking for.”

The military’s top officer also stressed the importance of using advanced tech to monitor and preempt modern threats.

“There’s always risk of unintended escalation, and that’s what’s so important about using advanced tech tools to understand the environment that we’re operating in and to help leaders see and sense the risk that we’re facing. And there’s really no shortage of those risks right now,” said Gen. Dan Caine, chairman of the Joint Chiefs of Staff, who has an extensive background in irregular warfare and special operations, which can lean heavily on cutting-edge technologies.

“The fight is now centered in many ways around our ability to harvest all of the available information, put it into an appropriate data set, stack stuff on top of it—APIs and others—and end up with a single pane of glass that allows commanders at every echelon…to see that, those data bits at the time and place that we need to to be able to make smart tactical, operational and strategic decisions that will allow us to win and dominate on the battlefields of the future. And so AI is a big part of that,” Caine said.

The Pentagon recently awarded $200 million in AI contracts while the Army doubled down on its partnership with Palantir with a decade-long contract potentially worth $10 billion. The Pentagon has also curbed development of its primary AI platform, Advana, and slashed staff in its chief data and AI office with plans of a reorganization that promises to “accelerate Department-wide AI transformation” and make the Defense Department “an AI-first enterprise.”

AI Research

Study sheds light on hurdles faced in transforming NHS health care with AI

Implementing artificial intelligence (AI) into NHS hospitals is far harder than initially anticipated, with complications around governance, contracts, data collection, harmonization with old IT systems, finding the right AI tools and staff training, finds a major new UK study led by UCL researchers.

Authors of the study, published in eClinicalMedicine, say the findings should provide timely and useful learning for the UK Government, whose recent 10-year NHS plan identifies digital transformation, including AI, as a key platform to improving the service and patient experience.

In 2023, NHS England launched a program to introduce AI to help diagnose chest conditions, including lung cancer, across 66 NHS hospital trusts in England.

The trusts are grouped into 12 imaging diagnostic networks: these hospital networks mean more patients have access to specialist opinions. Key functions of these AI tools included prioritizing critical cases for specialist review and supporting specialists’ decisions by highlighting abnormalities on scans.

The research was conducted by a team from UCL, the Nuffield Trust, and the University of Cambridge, analyzing how procurement and early deployment of the AI tools went. The study is one of the first studies to analyze real-world implementation of AI in health care.

Evidence from previous studies, mostly laboratory-based, suggested that AI might benefit diagnostic services by supporting decisions, improving detection accuracy, reducing errors and easing workforce burdens.

In this UCL-led study, the researchers reviewed how the new diagnostic tools were procured and set up through interviews with hospital staff and AI suppliers, identifying any pitfalls but also any factors that helped smooth the process.

They found that setting up the AI tools took longer than anticipated by the program’s leadership. Contracting took between four and 10 months longer than anticipated and by June 2025, 18 months after contracting was meant to be completed, one-third (23 out of 66) of the hospital trusts were not yet using the tools in clinical practice.

Key challenges included engaging clinical staff with already high workloads in the project, embedding the new technology in aging and varied NHS IT systems across dozens of hospitals and a general lack of understanding, and skepticism, among staff about using AI in health care.

The study also identified important factors which helped embed AI, including national program leadership and local imaging networks sharing resources and expertise, high levels of commitment from hospital staff leading implementation, and dedicated project management.

The researchers concluded that while “AI tools may offer valuable support for diagnostic services, they may not address current health care service pressures as straightforwardly as policymakers may hope” and are recommending that NHS staff are trained in how AI can be used effectively and safely and that dedicated project management is used to implement schemes like this in the future.

First author Dr. Angus Ramsay (UCL Department of Behavioral Science and Health) said, “In July ministers unveiled the Government’s 10-year plan for the NHS, of which a digital transformation is a key platform.

“Our study provides important lessons that should help strengthen future approaches to implementing AI in the NHS.

“We found it took longer to introduce the new AI tools in this program than those leading the program had expected.

“A key problem was that clinical staff were already very busy—finding time to go through the selection process was a challenge, as was supporting integration of AI with local IT systems and obtaining local governance approvals. Services that used dedicated project managers found their support very helpful in implementing changes, but only some services were able to do this.

“Also, a common issue was the novelty of AI, suggesting a need for more guidance and education on AI and its implementation.

“AI tools can offer valuable support for diagnostic services, but they may not address current health care service pressures as simply as policymakers may hope.”

The researchers conducted their evaluation between March and September last year, studying 10 of the participating networks and focusing in depth on six NHS trusts. They interviewed network teams, trust staff and AI suppliers, observed planning, governance and training and analyzed relevant documents.

Some of the imaging networks and many of the hospital trusts within them were new to procuring and working with AI.

The problems involved in setting up the new tools varied—for example, in some cases, those procuring the tools were overwhelmed by a huge amount of very technical information, increasing the likelihood of key details being missed. Consideration should be given to creating a national approved shortlist of potential suppliers to facilitate procurement at local level, the researchers said.

Another problem was initial lack of enthusiasm among some NHS staff for the new technology in this early phase, with some more senior clinical staff raising concerns about the potential impact of AI making decisions without clinical input and on where accountability lay in the event a condition was missed.

The researchers found the training offered to staff did not address these issues sufficiently across the wider workforce—hence their call for early and ongoing training on future projects.

In contrast, however, the study team found the process of procurement was supported by advice from the national team and imaging networks learning from each other.

The researchers also observed high levels of commitment and collaboration between local hospital teams (including clinicians and IT) working with AI supplier teams to progress implementation within hospitals.

Senior author Professor Naomi Fulop (UCL Department of Behavioral Science and Health) said, “In this project, each hospital selected AI tools for different reasons, such as focusing on X-ray or CT scanning, and purposes, such as to prioritize urgent cases for review or to identify potential symptoms.

“The NHS is made up of hundreds of organizations with different clinical requirements and different IT systems and introducing any diagnostic tools that suit multiple hospitals is highly complex. These findings indicate AI might not be the silver bullet some have hoped for but the lessons from this study will help the NHS implement AI tools more effectively.”

While the study has added to the very limited body of evidence on the implementation and use of AI in real-world settings, it focused on procurement and early deployment. The researchers are now studying the use of AI tools following early deployment when they have had a chance to become more embedded.

Further, the researchers did not interview patients and caregivers and are therefore now conducting such interviews to address important gaps in knowledge about patient experiences and perspectives, as well as considerations of equity.

More information:

Procurement and early deployment of artificial intelligence tools for chest diagnostics in NHS services in England: A rapid, mixed method evaluation, eClinicalMedicine (2025). DOI: 10.1016/j.eclinm.2025.103481

Citation:

Study sheds light on hurdles faced in transforming NHS health care with AI (2025, September 10)

retrieved 10 September 2025

from https://medicalxpress.com/news/2025-09-hurdles-nhs-health-ai.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

AI Research

‘The New Age of Sexism’ explores how misogyny is replicated in AI and emerging tech

Artificial intelligence and emerging technologies are already reshaping the world around us. But how are age-old inequalities showing up in this new digital frontier? In “The New Age of Sexism,” author and feminist activist Laura Bates explores the biases now being replicated everywhere from ChatGPT to the Metaverse. Amna Nawaz sat down with Bates to discuss more.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms4 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi