A technology called Dynamic Targeting could enable spacecraft to decide, autonomously and within seconds, where to best make science observations from orbit.

In a recent test, NASA showed how artificial intelligence-based technology could help orbiting spacecraft provide more targeted and valuable science data. The technology enabled an Earth-observing satellite for the first time to look ahead along its orbital path, rapidly process and analyze imagery with onboard AI, and determine where to point an instrument. The whole process took less than 90 seconds, without any human involvement.

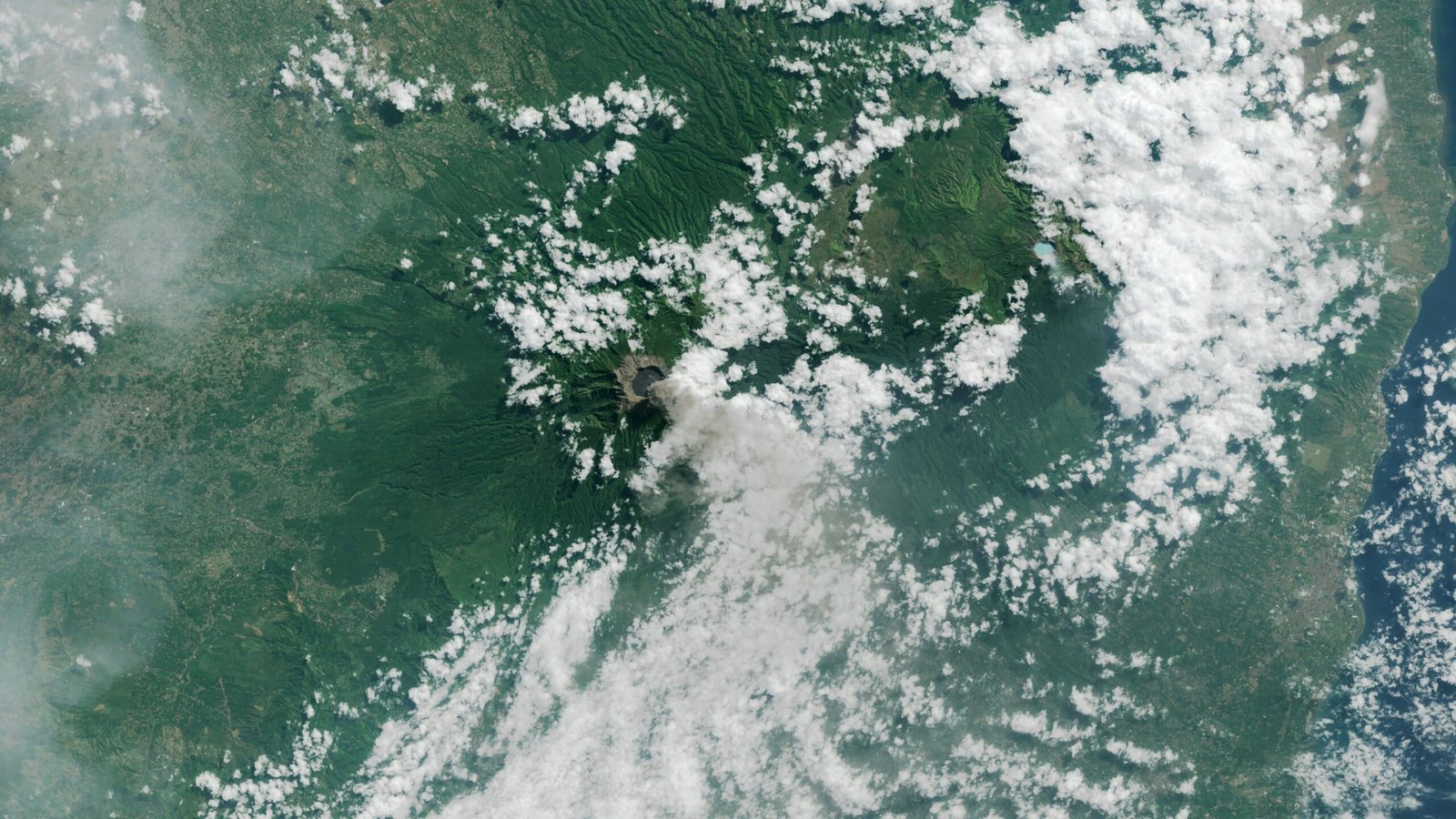

Called Dynamic Targeting, the concept has been in development for more than a decade at NASA’s Jet Propulsion Laboratory in Southern California. The first of a series of flight tests occurred aboard a commercial satellite in mid-July. The goal: to show the potential of Dynamic Targeting to enable orbiters to improve ground imaging by avoiding clouds and also to autonomously hunt for specific, short-lived phenomena like wildfires, volcanic eruptions, and rare storms.

“The idea is to make the spacecraft act more like a human: Instead of just seeing data, it’s thinking about what the data shows and how to respond,” says Steve Chien, a technical fellow in AI at JPL and principal investigator for the Dynamic Targeting project. “When a human sees a picture of trees burning, they understand it may indicate a forest fire, not just a collection of red and orange pixels. We’re trying to make the spacecraft have the ability to say, ‘That’s a fire,’ and then focus its sensors on the fire.”

This first flight test for Dynamic Targeting wasn’t hunting specific phenomena like fires — that will come later. Instead, the point was avoiding an omnipresent phenomenon: clouds.

Most science instruments on orbiting spacecraft look down at whatever is beneath them. However, for Earth-observing satellites with optical sensors, clouds can get in the way as much as two-thirds of the time, blocking views of the surface. To overcome this, Dynamic Targeting looks 300 miles (500 kilometers) ahead and has the ability to distinguish between clouds and clear sky. If the scene is clear, the spacecraft images the surface when passing overhead. If it’s cloudy, the spacecraft cancels the imaging activity to save data storage for another target.

“If you can be smart about what you’re taking pictures of, then you only image the ground and skip the clouds. That way, you’re not storing, processing, and downloading all this imagery researchers really can’t use,” said Ben Smith of JPL, an associate with NASA’s Earth Science Technology Office, which funds the Dynamic Targeting work. “This technology will help scientists get a much higher proportion of usable data.”

The testing is taking place on CogniSAT-6, a briefcase-size CubeSat that launched in March 2024. The satellite — designed, built, and operated by Open Cosmos — hosts a payload designed and developed by Ubotica featuring a commercially available AI processor. While working with Ubotica in 2022, Chien’s team conducted tests aboard the International Space Station running algorithms similar to those in Dynamic Targeting on the same type of processor. The results showed the combination could work for space-based remote sensing.

Since CogniSAT-6 lacks an imager dedicated to looking ahead, the spacecraft tilts forward 40 to 50 degrees to point its optical sensor, a camera that sees both visible and near-infrared light. Once look-ahead imagery has been acquired, Dynamic Targeting’s advanced algorithm, trained to identify clouds, analyzes it. Based on that analysis, the Dynamic Targeting planning software determines where to point the sensor for cloud-free views. Meanwhile, the satellite tilts back toward nadir (looking directly below the spacecraft) and snaps the planned imagery, capturing only the ground.

This all takes place in 60 to 90 seconds, depending on the original look-ahead angle, as the spacecraft speeds in low Earth orbit at nearly 17,000 mph (7.5 kilometers per second).

With the cloud-avoidance capability now proven, the next test will be hunting for storms and severe weather — essentially targeting clouds instead of avoiding them. Another test will be to search for thermal anomalies like wildfires and volcanic eruptions. The JPL team developed unique algorithms for each application.

“This initial deployment of Dynamic Targeting is a hugely important step,” Chien said. “The end goal is operational use on a science mission, making for a very agile instrument taking novel measurements.”

There are multiple visions for how that could happen — possibly even on spacecraft exploring the solar system. In fact, Chien and his JPL colleagues drew some inspiration for their Dynamic Targeting work from another project they had also worked on: using data from ESA’s (the European Space Agency’s) Rosetta orbiter to demonstrate the feasibility of autonomously detecting and imaging plumes emitted by comet 67P/Churyumov-Gerasimenko.

On Earth, adapting Dynamic Targeting for use with radar could allow scientists to study dangerous extreme winter weather events called deep convective ice storms, which are too rare and short-lived to closely observe with existing technologies. Specialized algorithms would identify these dense storm formations with a satellite’s look-ahead instrument. Then a powerful, focused radar would pivot to keep the ice clouds in view, “staring” at them as the spacecraft speeds by overhead and gathers a bounty of data over six to eight minutes.

Some ideas involve using Dynamic Targeting on multiple spacecraft: The results of onboard image analysis from a leading satellite could be rapidly communicated to a trailing satellite, which could be tasked with targeting specific phenomena. The data could even be fed to a constellation of dozens of orbiting spacecraft. Chien is leading a test of that concept, called Federated Autonomous MEasurement, beginning later this year.

Melissa Pamer

Jet Propulsion Laboratory, Pasadena, Calif.

626-314-4928

melissa.pamer@jpl.nasa.gov

2025-094