AI Research

Rutgers University’s Miller Center to Help Lead International Summit on Artificial Intelligence

Newswise — Rutgers University’s Miller Center on Policing and Community Resilience is proud to announce its partnership in the upcoming Justice, Public Safety, and Security Artificial Intelligence Summit on Sept. 9 and 10 at the Hyatt Regency in Tysons Corner, Va.

The summit brings together national and international leaders in government, academia, standards organizations and industry to explore the transformative role of artificial intelligence (AI) in the justice and public safety sectors. This two-day event will address the operational, policy and technological dimensions of AI deployment, offering a platform for collaboration, innovation, and responsible integration of emerging tools in the service of community safety.

The Miller Center joins other esteemed partners, including the Integrated Justice Information Systems (IJIS) Institute, Global Consortium of Law Enforcement Training Executives, the University of Ottawa Professional Development Institute and leading international associations in advancing this critical dialogue.

“By helping host this summit, Rutgers University is reaffirming its commitment to ethical innovation, informed policy and evidence-based practices that support both resilience and reform in public institutions, “said Paul Goldenberg, chief advisor of policy and international policing at Rutgers Miller Center.

The summit agenda includes international perspectives and expert-led panels on the role of AI in policing, corrections, courts, prosecution, emergency communications and cybersecurity and features keynote speakers:

Sessions will address legislative frameworks, ethical considerations and the development of AI standards. A technology showcase will highlight real-world applications, while a concluding roundtable will bring together insights and next steps from throughout the public and private sectors.

Visit the event webpage for more information and to register for the summit.

###

About Rutgers University’s Miller Center on Policing and Community Resilience

The Miller Center on Policing and Community Resilience advances research, education and outreach focused on building stronger relationships between communities and public safety institutions. As part of Rutgers University’s dedication to service, innovation and justice, the Center leads initiatives that promote accountability, trust and strategic resilience in policing and community safety.

About the Global Consortium of Law Enforcement Training Executives

Global Consortium for Law Enforcement Training Executives (GCLETE) brings together global police leaders to advance training and professional development in law enforcement.

About the Integrated Justice Information Systems (IJIS) Institute

The IJIS Institute is a nonprofit collaboration network that unites innovative thinkers from the public and private sectors, national practice associations, and academic/research organizations to address public sector mission information sharing, policy, and technology challenges

About the Eagleton Institute of Politics

The Rutgers Miller Center on Policing and Community Resilience is a unit of the Eagleton Institute of Politics at Rutgers University-New Brunswick. The Eagleton Institute studies how American politics and government work and change, analyzes how the democracy might improve and promotes political participation and civic engagement. The Institute explores state and national politics through research, education and public service, linking the study of politics with its day-to-day practice.

AI Research

As they face conflicting messages about AI, some advice for educators on how to use it responsibly

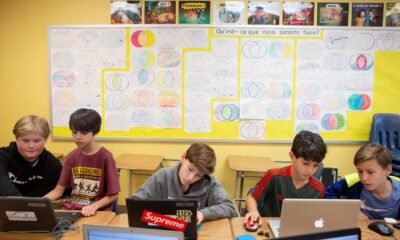

When it comes to the rapid integration of artificial intelligence into K-12 classrooms, educators are being pulled in two very different directions.

One prevailing media narrative stokes such profound fears about the emerging strengths of artificial intelligence that it could lead one to believe it will soon be “game over” for everything we know about good teaching. At the same time, a sweeping executive order from the White House and tech-forward education policymakers paint AI as “game on” for designing the educational system of the future.

I work closely with educators across the country, and as I’ve discussed AI with many of them this spring and summer, I’ve sensed a classic “approach-avoidance” dilemma — an emotional stalemate in which they’re encouraged to run toward AI’s exciting new capabilities while also made very aware of its risks.

Even as educators are optimistic about AI’s potential, they are cautious and sometimes resistant to it. These conflicting urges to approach and avoid can be paralyzing.

Related: A lot goes on in classrooms from kindergarten to high school. Keep up with our free weekly newsletter on K-12 education.

What should responsible educators do? As a learning scientist who has been involved in AI since the 1980s and who conducts nationally funded research on issues related to reading, math and science, I have some ideas.

First, it is essential to keep teaching students core subject matter — and to do that well. Research tells us that students cannot learn critical thinking or deep reasoning in the abstract. They have to reason and critique on the basis of deep understanding of meaningful, important content. Don’t be fooled, for example, by the notion that because AI can do math, we shouldn’t teach math anymore.

We teach students mathematics, reading, science, literature and all the core subjects not only so that they will be well equipped to get a job, but because these are among the greatest, most general and most enduring human accomplishments.

You should use AI when it deepens learning of the instructional core, but you should also ignore AI when it’s a distraction from that core.

Second, don’t limit your view of AI to a focus on either teacher productivity or student answer-getting.

Instead, focus on your school’s “portrait of a graduate” — highlighting skills like collaboration, communication and self-awareness as key attributes that we want to cultivate in students.

Much of what we know in the learning sciences can be brought to life when educators focus on those attributes, and AI holds tremendous potential to enrich those essential skills. Imagine using AI not to deliver ready-made answers, but to help students ask better, more meaningful questions — ones that are both intellectually rigorous and personally relevant.

AI can also support student teams by deepening their collaborative efforts — encouraging the active, social dimensions of learning. And rather than replacing human insight, AI can offer targeted feedback that fuels deeper problem-solving and reflection.

When used thoughtfully, AI becomes a catalyst — not a crutch — for developing the kinds of skills that matter most in today’s world.

In short, keep your focus on great teaching and learning. Ask yourself: How can AI help my students think more deeply, work together more effectively and stay more engaged in their learning?

Related: PROOF POINTS: Teens are looking to AI for information and answers, two surveys show

Third, seek out AI tools and applications that are not just incremental improvements, but let you create teaching and learning opportunities that were impossible to deliver before. And at the same time, look for education technologies that are committed to managing risks around student privacy, inappropriate or wrong content and data security.

Such opportunities for a “responsible breakthrough” will be a bit harder to find in the chaotic marketplace of AI in education, but they are there and worth pursuing. Here’s a hint: They don’t look like popular chatbots, and they may arise not from the largest commercial vendors but from research projects and small startups.

For instance, some educators are exploring screen-free AI tools designed to support early readers in real-time as they work through physical books of their choice. One such tool uses a hand-held pointer with a camera, a tiny computer and an audio speaker — not to provide answers, but to guide students as they sound out words, build comprehension and engage more deeply with the text.

I am reminded: Strong content remains central to learning, and AI, when thoughtfully applied, can enhance — not replace — the interactions between young readers and meaningful texts without introducing new safety concerns.

Thus, thoughtful educators should continue to prioritize core proficiencies like reading, math, science and writing — and using AI only when it helps to develop the skills and abilities prioritized in their desired portrait of a graduate. By adopting ed-tech tools that are focused on novel learning experiences and committed to student safety, educators will lead us to a responsible future for AI in education.

Jeremy Roschelle is the executive director of Digital Promise, a global nonprofit working to expand opportunity for every learner.

Contact the opinion editor at opinion@hechingerreport.org.

This story about AI in the classroom was produced by The Hechinger Report, a nonprofit, independent news organization focused on inequality and innovation in education. Sign up for Hechinger’s weekly newsletter.

AI Research

Now Artificial Intelligence (AI) for smarter prison surveillance in West Bengal – The CSR Journal

AI Research

OpenAI business to burn $115 billion through 2029 The Information

OpenAI CEO Sam Altman walks on the day of a meeting of the White House Task Force on Artificial Intelligence (AI) Education in the East Room at the White House in Washington, D.C., U.S., September 4, 2025.

Brian Snyder | Reuters

OpenAI has sharply raised its projected cash burn through 2029 to $115 billion as it ramps up spending to power the artificial intelligence behind its popular ChatGPT chatbot, The Information reported on Friday.

The new forecast is $80 billion higher than the company previously expected, the news outlet said, without citing a source for the report.

OpenAI, which has become one of the world’s biggest renters of cloud servers, projects it will burn more than $8 billion this year, some $1.5 billion higher than its projection from earlier this year, the report said.

The company did not immediately respond to Reuters request for comment.

To control its soaring costs, OpenAI will seek to develop its own data center server chips and facilities to power its technology, The Information said.

OpenAI is set to produce its first artificial intelligence chip next year in partnership with U.S. semiconductor giant Broadcom, the Financial Times reported on Thursday, saying OpenAI plans to use the chip internally rather than make it available to customers.

The company deepened its tie-up with Oracle in July with a planned 4.5-gigawatts of data center capacity, building on its Stargate initiative, a project of up to $500 billion and 10 gigawatts that includes Japanese technology investor SoftBank. OpenAI has also added Alphabet’s Google Cloud among its suppliers for computing capacity.

The company’s cash burn will more than double to over $17 billion next year, $10 billion higher than OpenAI’s earlier projection, with a burn of $35 billion in 2027 and $45 billion in 2028, The Information said.

-

Business1 week ago

Business1 week agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms4 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi