AI Research

Office AI Science Team – Microsoft Research

The Office AI Science team is part of OPG. The team builds systems that are leveraged across M365 and especially within Word, Excel, and PowerPoint. The team’s recent projects have included: PPT Summarization, Audio Overviews (Podcast), SPOCK Eval, Data Pipeline, Natural Language to Office JS, and CUA.

PPT Summarization: The Office AI Science team built the first fine-tuned SLM within M365. The fine-tuned Phi-3 Vision SLM improved p95 latency of PPT Visual Summary feature from 13 seconds to 2 seconds, while maintaining quality (opens in new tab) on par with GPT-4o-v. The optimization resulted in 75 times fewer GPUs being used compared to GPT-4o-v and almost 9 times the number of PowerPoint users receiving a visual summary. The fine-tuned SLM also powers PPT Visual Q&A, making it both faster and cheaper. The team also introduced PPT Interactive Summary, which allows users to drill into visual summaries in more detail, leading to over 50% decline in thumbs down per 100k tries over 3 months, 30% interactivity clicking on chevron to go deeper, and a 17.6% increase in weekly return rate. The team is currently fine-tuning 4o-mini-vision with the goal of replacing remaining non-English traffic to GPT-4o-v with this smaller model and evaluating Phi-4 Vision for English.

Audio Overviews: The team is building the Audio Overview Skill that introduces a podcast-like experience for consuming documents and artifacts. The feature is currently in the dogfood phase for MSIT, with production rollout scheduled for May 7 onwards. Users will be able to generate Audio Overviews from App Chat entry points in Word Win32 & Web, Copilot Notebooks (including OneNote), and other apps like Outlook Web, OneDrive Web and ODSP Mobile. Latest human evaluation (opens in new tab) scores overall transcript quality for the single file audio overview at 4.08/5.00 compared to 3.76/5.00 for NotebookLM, and with automated evaluation (opens in new tab), the team improved the overall score from an initial 4.09 to 4.65 with a two-step design leveraging GPT-4o and o3-mini. More details, including evaluation against multiple files for the Copilot Notebooks scenario and gains from moving to GPT-4.1, can be found here (opens in new tab).

SPOCK (AugLoop Eval): In collaboration with AugLoop, the Office AI Science team developed several key features that enable agility in evaluating App Copilot scenario quality metrics. By the end of FY25Q3, 22 scenarios have been onboarded across Word, PPT, Office AI, and SharePoint, with Excel onboarding in-progress. The platform currently reliably runs 300 eval jobs and 30,000 tests daily. The automated scenario evaluation turnaround time compared to manual run has significantly decreased from days to 2-4 hours. SPOCK now supports intent detection, Leo Metrics, BizChat 1K Query, Python, and Typescript customer evaluators; model swap and FlexV3 eval are coming in Q4. Additionally, the v-team is automating the App Copilot Quality Dashboard (ÆVAL – Copilot Evaluation (opens in new tab)), providing a comprehensive overview of the quality of App Copilot scenarios.

Data Pipeline: The team also created an online, self-serve, on-demand ADF pipeline for mining Office documents from the internet. This allows partners to kick off large-scale data mining jobs for specific languages and document types and features custom metadata extractors for extracting task-dependent document representations. By leveraging Bing’s precrawled 40B URL RetroIndex, document discovery is fast and efficient. OAI Science and several partner teams (Word+Editor, PPT Science, Word Designer, Designer, MSAI) are already utilizing the data for finetuning and test set creation.

Natural Language to Office JS: The Office AI Science team is working to finetune o* family model for common Office scenarios like inserting slides from another PowerPoint file, inserting headers and footers in Word, or creating and finding merged ranges in Excel.

CUA: The team also recently embarked on an exploration of Computer User Agent (CUA) centered on understanding user intent and adapting in real time. Leveraging plan assistance with the Office knowledge base, the team approximately doubled the task completion rate against OSWorld PPT scenarios. The team is working on fine-tuning the CUA model to improve task completions for Office apps.

For more contact: Amanda Gunnemo or Vishal Chowdhary

AI Research

2025 State of AI Cost Management Research Finds 85% of Companies Miss AI Forecasts by >10%

Despite rapid adoption, most enterprises lack visibility, forecasting accuracy, and margin control around AI investments. Hidden infrastructure costs are eroding enterprise profitability, according to newly published survey data.

AUSTIN, Texas, Sept. 10, 2025 /PRNewswire/ — As enterprises accelerate investments in AI infrastructure, a new report reveals a troubling financial reality: most organizations can’t forecast what they’re spending, or control how AI costs impact margins. According to the 2025 State of AI Cost Management, 80% of enterprises miss their AI infrastructure forecasts by more than 25%, and 84% report significant gross margin erosion tied to AI workloads.

The report, published by Benchmarkit in partnership with cost governance platform Mavvrik, reveals how AI adoption, across large language models (LLMs), GPU-based compute, and AI-native services, is outpacing cost governance. Most companies lack the visibility, attribution, and forecasting precision to understand where costs come from or how they affect margins.

“These numbers should rattle every finance leader. AI is no longer just experimental – it’s hitting gross margins, and most companies can’t even predict the impact,” said Ray Rike, CEO of Benchmarkit. “Without financial governance, you’re not scaling AI. You’re gambling with profitability.”

Top Findings from the 2025 State of AI Cost Management Report include:

AI costs are crushing enterprise margins

- 84% of companies see 6%+ gross margin erosion due to AI infrastructure costs

- 26% report margin impact of 16% or higher

The great AI repatriation has begun

- 67% are actively planning to repatriate AI workloads; another 19% are evaluating

- 61% already run hybrid AI infrastructure (public + private)

- Only 35% include on-prem AI costs in reporting, leaving major blind spots

Hidden cost surprises come from unexpected places

- Data platforms top source of unexpected AI spend (56%); LLMs rank 5th

- Network access costs is the second-largest cost surprise (52%)

AI forecasting is fundamentally broken

- 80% miss AI forecasts by 25%+

- 24% are off by 50% or more

- Only 15% forecast AI costs within 10% margin of error

Visibility gaps are stalling governance

- Lack of visibility is the #1 challenge in managing AI infrastructure costs

- 94% say they track costs, but only 34% have mature cost management

- Companies charging for AI show 2x greater cost maturity in attribution and cost discipline

Access the full report: The full report details how automation, cost attribution methods, and cloud repatriation strategies factor into AI cost discipline. To view the analysis, please visit: https://www.mavvrik.ai/state-of-ai-cost-governance-report/

“AI is blowing up the assumptions baked into budgets. What used to be predictable, is now elastic and expensive,” said Sundeep Goel, CEO of Mavvrik. “This shift doesn’t just affect IT, it’s reshaping cost models, margin structures, and how companies scale. Enterprises are racing to build with AI, but when most can’t explain the bill, it’s no longer innovation, it’s risk.”

Why It Matters

AI isn’t just a technology challenge, it’s a financial one. From LLM APIs to GPU usage and data movement, infrastructure costs are scaling faster than most companies can track them. Without clear attribution across cloud and on-prem environments, leaders are making pricing, packaging, and investment decisions in the dark.

With AI spend becoming a significant line in COGS and gross margin targets under pressure, CFOs should be sounding the alarm. Yet most finance teams haven’t prioritized governance.

About the State of AI Cost Management

The 2025 State of AI Cost Management report is based on survey results from 372 enterprise organizations across diverse industries and revenue tiers. It measures cost governance maturity, spanning forecast accuracy, infrastructure mix (cloud vs. on–prem), attribution capability, and gross margin impact. https://www.mavvrik.ai/.

About Mavvrik

Mavvrik is the financial control center for modern IT. By embedding financial governance at the source of every cost signal, Mavvrik provides enterprises with complete visibility and control across cloud, AI, SaaS, and on-prem infrastructure. Built for CFOs, FinOps, and IT leaders, Mavvrik eliminates financial blind spots and transforms IT costs into strategic investments. With real-time cost tracking, automated chargebacks, and predictive budget controls, Mavvrik helps enterprises reduce waste, govern AI and hybrid cloud spend, and maintain financial precision at scale. Visit www.mavvrik.ai to learn more.

Media Contact:

Rick Medeiros

510-556-8517

[email protected]

SOURCE Mavvrik

AI Research

I’ve been researching generative AI for years, and I’m tired of the consciousness debate. Humans barely understand our own

In 2022, a Google engineer claimed one of the company’s AIs was sentient. He lost his job, but the story stuck. For a brief moment, questions of machine consciousness spilled out of science fiction and into the headlines.

Now, in 2025, the debate has returned. As the release of GPT-5 was overshadowed by public nostalgia for GPT-4o, it was everyday users who began acting as if these systems were more than their makers intended. Into this moment stepped another tech giant: Mustafa Suleyman, CEO of Microsoft Research, declaring loud and clear on his blog that AI is not, and will never be, conscious.

At first glance, it sounds like common sense. Machines obviously aren’t conscious. Why not make that abundantly clear?

Because it isn’t true.

The hard fact is that we do not understand consciousness. Not in humans, not in animals, and not in machines. Theories abound, but the reality is that no one can explain exactly what consciousness is, let alone how to measure it. To state with certainty that AI can never be conscious is not science, it isn’t caution. It’s overconfidence, and in this case, a thinly veiled agenda.

If AI can’t ever be conscious, then companies building it have nothing to answer for. No unsettling questions. No ethics debates. No pressure. Surely, it would be nice if we could claim with full confidence that the consciousness question is not relevant to AI. But convenience doesn’t make it true.

What troubles me most is the tone. These pronouncements aren’t just misleading, they’re also infantilizing. As if the public can’t handle complexity. It is as though we must be shielded from ambiguity, spoon-fed tidy certainties instead of being trusted with reality.

Yes, people falling in love with and marrying chatbots or preferring AI companions to human ones is concerning. It unveils a deeper pattern of loneliness and disconnection. This is a social and psychological challenge in its own right, and one we should take seriously. The rise of digital companions reveals how hungry people are for connection.

But the real issue isn’t that some people believe AI might be conscious. The deeper problem is our growing overreliance on technology in general—an addiction that stretches back long before the current debate on machine consciousness. From social media feeds to video games targeting children, technology has a long history of prioritizing engagement and fostering addiction, with no regard for the well-being of its users.

But technological dysfunction won’t be solved by feeding people false assurances about what machines can or cannot be. If anything, denial only obscures the urgency of confronting our dependence head-on.

We need to learn to live with uncertainty. Because uncertainty is the reality of this moment.

Suleyman did add an important caveat: our attention should be on the beings we already know are conscious—humans, animals, the living world. On this point, I couldn’t agree more. But look at our record. Billions of animals endure extreme suffering in factory farms on a daily basis. Forests are flattened for profit, numerous species gone extinct. And in the age of AI, the use case most celebrated by investors is replacing human labor.

The pattern is clear. Again and again, we minimize the experiences of those who aren’t like us, those we would benefit from exploiting. We claim animals don’t suffer all that much or simply turn a blind eye. We treat nature as expendable. We routinely devalue people whose exploitation benefits our economic system. Now, we rush to declare that AI will never be conscious. Same playbook, new page.

So no, we shouldn’t blindly trust the builders of AI to tell us what is and isn’t conscious, any more than we should trust meat factories to tell us about the experience of cows.

The reality is messier. AI may never be conscious. It may surprise us. We cannot say for certain. And we might not be able to tell whether it is conscious even if it does happen. And that is the point.

For a long time, I avoided this topic. Consciousness felt too slippery, too strange. But I’ve come to see that acknowledging our uncertainty is not a weakness. It is a strength.

Because in an era of false certainties, honesty about the unknown may be the most radical truth we have.

The opinions expressed in Fortune.com commentary pieces are solely the views of their authors and do not necessarily reflect the opinions and beliefs of Fortune.

AI Research

FSU researchers receive $2.3 million National Science Foundation grant to strengthen wildfire management in hurricane-prone areas

Florida State University researchers have received a $2.3 million National Science Foundation (NSF) grant to develop artificial intelligence tools that will help manage wildfires fueled by hurricanes in the Florida Panhandle.

The four-year project will be led by Yushun Dong, an assistant professor of computer science, and is the largest research award ever for FSU’s Department of Computer Science. Dong and his interdisciplinary team will focus on wildfires in the wildland-urban interface, where forests such as the Apalachicola National Forest meet homes, roads and other infrastructure.

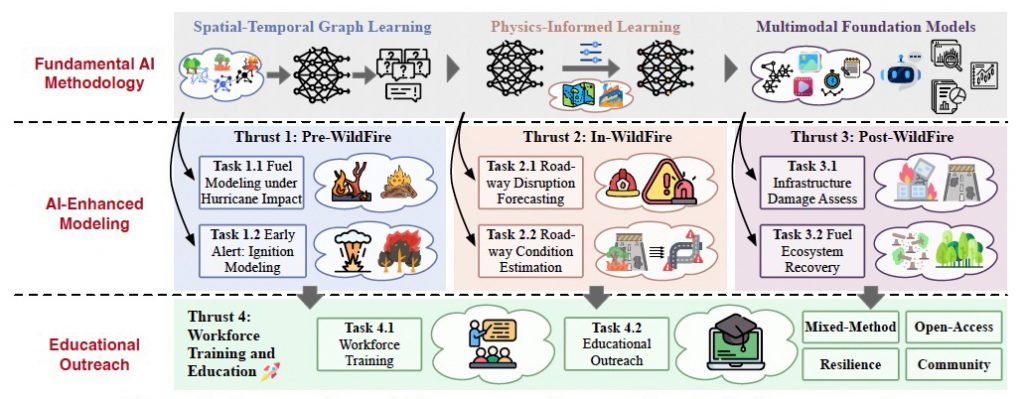

Dong’s project, “FIRE: An Integrated AI System Tackling the Full Lifecycle of Wildfires in Hurricane-Prone Regions,” will bring together computer scientists, fire researchers, engineers and educators to study how hurricanes change wildfire behavior and to build AI systems that can forecast ignition, predict roadway disruptions, and assess potential damage.

“The modern practice of prescribed burns began over 60 years ago, which was a huge leap in working with nature to help manage an ecosystem,” said Dong, who joined FSU’s faculty last year and established the Responsible AI Lab at FSU after earning his doctorate from the University of Virginia. “Now, we’re positioned to make another leap: we’re able to use powerful AI technology to transform wildfire risk management with tools such as ignition forecasting, roadway disruption prediction, condition estimations, damage assessments and more.”

The project is funded as part of an NSF program, Fire Science Innovations through Research and Education, or FIRE, which was established last year and funds research and education enabling large-scale, interdisciplinary breakthroughs that realign our relationship with wildland fire and its connected variables.

Two of the four projects funded so far by the competitive program are led by FSU researchers — Neda Yaghoobian, associate professor in the Department of Mechanical and Aerospace Engineering at the FAMU-FSU College of Engineering, was also funded for a project that will analyze unresolved canopy dynamics contributing to wildfires.

“This grant represents the department’s biggest research award to date and cements our leadership in applying cutting-edge AI to urgent, real-world problems in our region,” said Weikuan Yu, Department of Computer Science chair. “The funding enables the development of a holistic AI platform addressing Florida’s hurricane and wildfire challenges while advancing cutting-edge AI research. Additionally, the grant includes educational and workforce development initiatives in AI and disaster resilience, positioning the department as a leader in training the next generation of scientists working at the intersection of AI and wildfire research.”

WHY IT MATTERS

Fires, especially low-intensity natural wildfires and prescribed burns, can play a vital role in regulating certain forests, grasslands and other fire-adapted ecosystems. They decrease the risk and severity of large, destructive wildfires while supporting soil processes and, in many cases, limit pest and disease outbreaks.

In clearing fallen leaves that pose as hazardous fuel loads, fires lower forest density and recycle nutrients through the ecosystem. But when heaps of trees accumulate, as has happened following recurrent hurricanes in the Florida Panhandle, these fires can exhibit complex dynamics that threaten built infrastructure including homes and roadways in addition to natural landscapes. Understanding this hurricane-wildfire connection is critical for planning evacuations, protecting roads and safeguarding homes and lives.

“I became passionate about applying my research, which achieves responsible AI that directly contributes to critical AI infrastructures, to hurricane-related phenomena after experiencing my first hurricane living in Tallahassee,” Dong said. “I want to use AI techniques to help Florida Panhandle residents better understand and prepare for extreme events in this ecosystem with its unique hurricane-fire coupling dynamics.”

INTERDISCIPLINARY COLLABORATION

Eren Ozguven, associate professor in the FAMU-FSU College of Engineering Department of Civil and Environmental Engineering and Resilient Infrastructure and Disaster Response Center director, is the co-principal investigator on this project, and additional contributors include James Reynolds, co-director of STEM outreach for FSU’s Learning Systems Institute, and Jie Sun, a postdoctoral researcher in the Department of Earth, Ocean and Atmospheric Science.

“Yushun’s project stands out for its ambition, insight, and integrative approach,” Yu said. “It zeros in on the unique challenges of Florida’s landscape where hurricanes and wildfires intersect in the wildland-urban interface of the Panhandle. By focusing on hurricane-fire coupling dynamics and working closely with local stakeholders, his project ensures that scientific innovation translates into practical, community-centered solutions. His integrated approach brings the benefit of cutting-edge AI advances directly to major real-world applications, creating a wonderful research lifecycle that’s exceptionally rare in our field.”

To learn about research conducted in the Department of Computer Science, visit cs.fsu.edu.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms4 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi