AI Insights

‘Woke’ AI fears are the latest threat to free speech

Politicians seem increasingly intent on modeling artificial intelligence (AI) tools in their own likenesses—and their plans could sneakily undermine free speech.

For Republicans, the ruse involves fighting “woke” AI. The Trump administration is reportedly readying an executive order aimed at preventing AI chatbots from being too politically correct.

You are reading Sex & Tech, from Elizabeth Nolan Brown. Get more of Elizabeth’s sex, tech, bodily autonomy, law, and online culture coverage.

Conservatives complaining about AI systems being too progressive is nothing new.

And, sure, the way some generative AI tools have been trained and programmed has led to some silly—and reality-distorting—outcomes. See, for instance, the great black pope and Asian Nazi debacle of 2024 from Google Gemini.

Of course, we also have an AI system, Grok, that has called itself MechaHitler and endorsed anti-Jewish conspiracy theories.

That’s the free market, baby.

And, all glibness aside, it’s way better than the alternative.

Both Gemini and Grok have been retooled to avoid similar snafus going forward. But the fact remains that different tech companies have different standards and safeguards baked into their AI systems, and these may lead these systems to yield different results.

Unconscious biases baked into AI will continue to produce some biased information. But the trick to combating this isn’t some sort of national anti–woke AI policy but teaching AI literacy—ensuring that everyone knows that the version of reality reflected by AI tools may be every bit as biased as narratives produced by humans.

And, as the market for AI tools continues to grow, consumers can also assert preferences as they always do in marketplaces: by choosing the products that they think are best. For some, this might mean that more biased systems are actually more appealing; for others, systems that produce the most neutral results will be the most useful.

Whatever problems might result from private choices here, I’m way more worried about the deleterious effects of politicians trying to combat “woke” or “discriminatory” AI.

The forthcoming Trump executive order “would dictate that AI companies getting federal contracts be politically neutral and unbiased in their AI models, an effort to combat what administration officials see as liberal bias in some models,” according to The Wall Street Journal.

That might seem unobjectionable at first blush. But while we might wish AI models be “neutral and unbiased”—just as we might wish the same about TV news programs, or magazine articles, or social media moderation—the fact is that private companies, be they television networks or publishers or tech companies, have a right to make their products as biased as they want. It’s up to consumers to decide if they prefer neutral models or not.

Granted, the upcoming order is not expected to try and mandate such a requirement across the board but to stipulate that this is mandatory for AI companies getting federal contracts. That seems fair enough in theory, but in practice, not likely.

Look at the recent letters sent to tech companies by the attorney general of Missouri, who argues that AI tools are biased by not listing Trump as the best president on antisemitism issues.

Look at the past decade of battles over social media moderation, during which the left and the right have both cried “bias” over decisions that don’t favor their preferred views.

Look at the way every recent presidential administration has tried to tie education funding to supporting or rejecting certain ideologies surrounding sex, gender, race, etc.

“Because nearly all major tech companies are vying to have their AI tools used by the federal government, the order could have far-reaching impacts and force developers to be extremely careful about how their models are developed,” the Journal suggests.

To put it more bluntly, tech companies could find themselves having to retool AI models to fit the sensibilities and biases of Trump—or whoever is in power—in order to get lucrative contracts.

Sure, principled companies could opt out of trying for government contracts. But that just means that whoever builds the most sycophantic AI chatbots will be the ones powering the federal government. And those contracts could also mean that the most politically biased AI tools wind up being the most profitable and the most able to proliferate and expand.

Like “woke AI,” the specter of “AI discrimination” has become a rallying cry for authorities looking to control AI outputs.

And, again, we’ve got something that doesn’t sound so bad in theory. Who would want AI to discriminate?

But, in practice, new laws intended to prevent discrimination in artificial intelligence outputs could have negative results, as Greg Lukianoff, president and CEO of the Foundation for Individual Rights and Expression (FIRE), explains:

These laws — already passed in states like Texas and Colorado — require AI developers to make sure their models don’t produce “discriminatory” outputs. And of course, superficially, this sounds like a noble endeavor. After all, who wants discrimination? The problem, however, is that while invidious discriminatory action in, say, loan approval should be condemned, discriminatory knowledge is an idea that is rightfully foreign. In fact, it should freak us out.

[…] Rather than calling for the arrest of a Klansman who engages in hateful crimes, these regulations say you need to burn down the library where he supposedly learned his hateful ideas. Not even just the books he read, mind you, but the library itself, which is full of other knowledge that would now be restricted for everyone else.

Perhaps more destructive than any government actions stemming from such laws is the way that they will influence how AI models are trained.

The very act of trying to depoliticize or neutralize AI, when done by politicians, could undermine AI’s potential for neutral and nonpolitical knowledge dissemination. People are not going to trust tools that they know are being intimately shaped by particular political administrations. And they’re not going to trust tools that seem like they’ve been trained to disregard reality when it isn’t pretty, doesn’t flatter people in power, or doesn’t align with certain social goals.

“This is a matter of serious epistemic consequence,” Lukianoff writes. “If knowledge is riddled with distortions or omissions as a result of political considerations, nobody will trust it.”

“In theory, these laws prohibit AI systems from engaging in algorithmic discrimination—or from treating different groups unfairly,” note Lukianoff and Adam Goldstein at National Review. “In effect, however, AI developers and deployers will now have to anticipate every conceivable disparate impact their systems might generate and scrub their tools accordingly. That could mean that developers will have to train their models to avoid uncomfortable truths and to ensure that their every answer sounds like it was created with HR and legal counsel looking over their shoulder, softening and obfuscating outputs to avoid anything potentially hurtful or actionable. In short, we will be (expensively) teaching machines to lie to us when the truth might be upsetting.”

Whether it’s trying to make ChatGPT a safe space for social justice goals or for Trump’s ego, the fight to let government authorities define AI outputs could have disastrous results.

At the very least, it’s going to lead to many years of fighting over AI bias, in the same way that we spent the past decade arguing over alleged biases in social media moderation and “censorship” by social media companies. And after all that, does anyone think that social media on average is any better—or that it is producing any better outcomes—in 2025 than it did a decade ago?

Politicizing online content moderation has arguably made things worse and, if nothing else, been so very tedious. It looks like we can expect a rehash of all these arguments where we just replace “social media” and “search engines” with “AI.”

Tennessee cannot ban people from “recruiting” abortion patients who are minors, a federal court says. The U.S. District Judge for the Middle District of Tennessee ruling comes as part of a challenge to the state’s “abortion trafficking” law.

The recruitment bit “prohibits speech encouraging lawful abortion while allowing speech discouraging lawful abortion,” per the court’s opinion. “That is impermissible viewpoint discrimination, which the First Amendment rarely tolerates—and does not tolerate here.”

While “Tennessee may criminalize speech recruiting a minor to procure an abortion in Tennessee,” wrote U.S. District Judge Julia Gibbons. “The state may not, however, criminalize speech recruiting a minor to procure a legal abortion in another Tennessee.”

Mississippi can start enforcing a requirement that social media platforms verify user ages and block anyone under age 18 who doesn’t have parental consent to participate, the U.S. Court of Appeals for the 5th Circuit has ruled.

The tech industry trade group NetChoice has filed an emergency appeal to the U.S. Supreme Court to reinstate the preliminary injunction against enforcing the law.

“Just as the government can’t force you to provide identification to read a newspaper, the same holds true when that news is available online,” said Paul Taske, co-director of the NetChoice Litigation Center, in a statement. “Courts across the country agree with us: NetChoice has successfully blocked similar, unconstitutional laws in other states. We are confident the Supreme Court will agree, and we look forward to fighting to keep the internet safe and free from government censorship.”

• Are men too “anxious about desire”? Is “heteropessimism” a useful concept? Should dating disappointment be seen as something deeper, or is that just a defense? In a much-talked-about new New York Times essay, Jean Garnett showcases the seductive appeal of blaming one’s personal romantic woes on some sort of larger, more political forces and the perils of this approach.

• “House Speaker Mike Johnson is rebuffing pressure to act on the investigation into Jeffrey Epstein, instead sending members home early for a month-long break from Washington after the week’s legislative agenda was upended by Republican members who are clamoring for a vote,” the Associated Press reports.

• X can tell users when the government wants their data. “X Corp. convinced the DC Circuit to vacate a broad order forbidding disclosure about law enforcement’s subpoenas for social media account information, with the panel finding Friday a lower court failed to sufficiently review the potential harm of immediate disclosure,” notes Bloomberg Law.

• A new, bipartisan bill would allow people to sue tech companies over training artificial intelligence systems using their copyrighted works without express consent.

• A case involving Meta and menstrual app data got underway this week. In a class-action suit, the plaintiffs say that period tracking app Flo shared their data with Meta and other companies without their permission. “Brenda R. Sharton of Dechert, the lead attorney representing Flo Health, said evidence will show that Flo never shared plaintiffs’ health information, that plaintiffs agreed that Flo could share data to maintain and improve the app’s performance, and that Flo never sold data or allowed anybody to use health information for ads,” per Courthouse News Service.

• YouTube is now Netflix’s biggest competitor. “The rivalry signals how the streaming wars have entered a new phase,” television reporter John Koblin suggests. “Their strategies for success are very different, but, in ways large and small, it’s becoming clear that they are now competing head-on.”

• European Union publishers are suing Google over an alleged antitrust law violation. Their beef is with Google’s AI overviews, which they’re worried could cause “irreparable harm.” They complain to the European Commission that “publishers using Google Search do not have the option to opt out from their material being ingested for Google’s AI large language model training and/or from being crawled for summaries, without losing their ability to appear in Google’s general search results page.”

AI Insights

91% of Jensen Huang’s $4.3 Billion Stock Portfolio at Nvidia Is Invested in Just 1 Artificial Intelligence (AI) Infrastructure Stock

Key Points

-

Most stocks that Nvidia and CEO Jensen Huang invest in tend to be strategic partners or companies that can expand the AI ecosystem.

-

For the AI sector to thrive, there is going to need to be a lot of supporting data centers and other AI infrastructure.

-

One stock that Nvidia is heavily invested in also happens to be one of its customers, a first-mover in the AI-as-a-service space.

-

10 stocks we like better than CoreWeave ›

Nvidia (NASDAQ: NVDA), the largest company in the world by market cap, is widely known as the artificial intelligence (AI) chip king and the main pick-and-shovel play powering the AI revolution. But as such a big company that is making so much money, the company has all sorts of different operations and divisions aside from its main business.

For instance, Nvidia, which is run by CEO Jensen Huang, actually invests its own capital in publicly traded stocks, most of which seem to have to do with the company itself or the broader AI ecosystem. At the end of the second quarter, Nvidia owned six stocks collectively valued at about $4.3 billion. However, of this amount, 91% of Nvidia’s portfolio is invested in just one AI infrastructure stock.

Where to invest $1,000 right now? Our analyst team just revealed what they believe are the 10 best stocks to buy right now. Continue »

A unique relationship

Nvidia has long had a relationship with AI data center company CoreWeave (NASDAQ: CRWV), having been a key supplier of hardware that drives the company’s business. CoreWeave builds data centers specifically tailored to meet the needs of companies looking to run AI applications.

Image source: Getty Images.

These data centers are also equipped with hardware from Nvidia, including the company’s latest graphics processing units (GPUs), which help to train large language models. Clients can essentially rent the necessary hardware to run AI applications from CoreWeave, which saves them the trouble of having to build out and run their own infrastructure. CoreWeave’s largest customer by far is Microsoft, which makes up roughly 60% of the company’s revenue, but CoreWeave has also forged long-term deals with OpenAI and IBM.

Nvidia and CoreWeave’s partnership dates back to at least 2020 or 2021, and Nvidia also invested in the company’s initial public offering earlier this year. Wall Street analysts say it’s unusual to see a large supplier participate in a customer’s IPO. But Nvidia may see it as a key way to bolster the AI sector because meeting future AI demand will require a lot of energy and infrastructure.

CoreWeave is certainly seeing demand. On the company’s second-quarter earnings call, management said its contract backlog has grown to over $30 billion and includes previously discussed contracts with OpenAI, as well as other new potential deals with a range of different clients from start-ups to larger companies. Customers have also been increasing the length of their contracts with CoreWeave.

“In short, AI applications are beginning to permeate all areas of the economy, both through start-ups and enterprise, and demand for our cloud AI services is aggressively growing. Our cloud portfolio is critical to CoreWeave’s ability to meet this growing demand,” CoreWeave’s CEO Michael Intrator said on the company’s earnings call.

Is CoreWeave a buy?

Due to the demand CoreWeave is seeing from the market, the company has been aggressively expanding its data centers to increase its total capacity. To do this, CoreWeave has taken on significant debt, which the capital markets seem more than willing to fund.

At the end of the second quarter, current debt (due within 12 months) grew to about $3.6 billion, up about $1.2 billion year over year. Long-term debt had grown to about $7.4 billion, up roughly $2 billion year over year. That has hit the income statement hard, with interest expense through the first six months of 2025 up to over $530 million, up from roughly $107 million during the same period in 2024.

CoreWeave reported a loss of $1.73 per share in the first six months of the year, better than the $2.23 loss reported during the same time period. Still, investors have expressed concern about growing competition in the AI-as-a-service space. They also question whether or not CoreWeave has a real moat, considering its customers and suppliers. For instance, while CoreWeave has a strong partnership with Nvidia, that does not prevent others in the space from forging partnerships. Additionally, CoreWeave’s main customers, like Microsoft, could choose to build their own data centers and infrastructure in-house.

CoreWeave also trades at over a $47 billion market cap but is still losing significant money. The valuation also means the company is trading at 10 times forward sales. Now, in fairness, CoreWeave has grown revenue through the first half of the year by 276% year over year. It all boils down to whether the company can maintain its first-mover advantage and whether the AI addressable market can keep growing like it has been.

I think investors can buy the stock for the more speculative part of their portfolio. The high dependence on industry growth and reliance on debt prevent me from recommending a large position at this time.

Should you invest $1,000 in CoreWeave right now?

Before you buy stock in CoreWeave, consider this:

The Motley Fool Stock Advisor analyst team just identified what they believe are the 10 best stocks for investors to buy now… and CoreWeave wasn’t one of them. The 10 stocks that made the cut could produce monster returns in the coming years.

Consider when Netflix made this list on December 17, 2004… if you invested $1,000 at the time of our recommendation, you’d have $678,148!* Or when Nvidia made this list on April 15, 2005… if you invested $1,000 at the time of our recommendation, you’d have $1,052,193!*

Now, it’s worth noting Stock Advisor’s total average return is 1,065% — a market-crushing outperformance compared to 186% for the S&P 500. Don’t miss out on the latest top 10 list, available when you join Stock Advisor.

See the 10 stocks »

*Stock Advisor returns as of August 25, 2025

Bram Berkowitz has no position in any of the stocks mentioned. The Motley Fool has positions in and recommends International Business Machines, Microsoft, and Nvidia. The Motley Fool recommends the following options: long January 2026 $395 calls on Microsoft and short January 2026 $405 calls on Microsoft. The Motley Fool has a disclosure policy.

Disclaimer: For information purposes only. Past performance is not indicative of future results.

AI Insights

Up 300%, This Artificial Intelligence (AI) Upstart Has Room to Soar

Get to know an artificial intelligence stock that other investors are just catching on to.

One stock that is not on the radar of most mainstream investors has quietly risen by more than 300% since April 2025, moving from an intraday low of $6.27 per share all the way to an intraday high of $26.43 per share in late August. Previously, the stock had peaked at over $50 per share in 2024. What changed? It became an artificial intelligence (AI) stock after suffering in the downturn of the electric vehicle market. Most investors probably haven’t caught on yet, and, until recently, the company may not have recognized the AI opportunity it had, either.

I’m referring to Aehr Test Systems (AEHR -2.81%), and I’ll explain why this company is crucial to the AI and data center industries. There is still a significant opportunity for investors to capitalize on, even if they missed the bottom.

Why is Aehr critical to AI?

Here is the 30,000-foot view. When companies, notably hyperscalers, build massive data centers full of tens of millions, and sometimes hundreds of millions, of semiconductors (chips), they must ensure that they are reliable. High failure rates are extremely costly in terms of remediation, labor, downtime, and replacements. If the company selling the chips has a high failure rate, its competitors can gain traction. Aehr Test Systems provides the necessary reliability testing systems.

Their importance cannot be understated. The latest chips are stackable (multiple layers of chips forming one unit), which allows for exponentially more processing power. However, there is a catch. Many times, they are a “single point of failure.” In other words, if one chip in the stack fails, the entire stack fails. The importance of reliability testing has increased by an order of magnitude as a result. And now Aehr is the hot name that could become a stock market darling again.

Image source: Getty Images.

Is Aehr stock a buy now?

You have probably heard about the massive data centers that the “hyperscalers,” companies like Meta Platforms, Amazon, Elon Musk’s xAI, and other tech giants, are building across the country and the world. In fact, trying to keep up with all of the announcements of new projects would make your head spin. In many cases, the data center campus spans more than a square mile and contains hundreds of thousands of chips. Elon Musk’s xAI project, dubbed “Colossus,” is said to require over a million GPUs in the end.

As shown below, the number of hyperscale data centers is soaring with no end in sight.

Source: Statista.

This number increased to more than 1,100 at the end of 2024, nearly doubling over the past five years. The demand is primarily driven by artificial intelligence, which is why Aehr is now an “AI stock” — and the reason its share price took off and could continue higher over the long term.

Aehr still faces serious challenges. Its revenue fell from $66 million in fiscal 2024 to $59 million in fiscal 2025. It slipped from an operating profit of $10 million to a loss of $6 million over that period as the company undertook the challenging task of refocusing its business. However, investors who dig deeper see a very encouraging sign. The company’s backlog (orders that have been placed, but not yet fulfilled) jumped to $15 million from $7 million. Aehr also announced several orders received from major hyperscalers over the last couple of months.

It’s challenging to value Aehr stock at this time. The company is in a transition period, and while the AI market looks hugely promising, it is still a work in progress. In its heyday, the stock’s valuation peaked at 31 times sales, and as recently as August 2023 it traded for 24 times sales compared to 12 times sales today. The AI market could be a gold mine for Aehr, and Aehr stock looks like a terrific buy for investors.

Bradley Guichard has positions in Aehr Test Systems and Amazon. The Motley Fool has positions in and recommends Amazon and Meta Platforms. The Motley Fool has a disclosure policy.

AI Insights

Augment AI startup secures $85M for logistics teammate

Given these persistent challenges, could artificial intelligence (AI) be the answer? By automating repetitive tasks—such as invoice validation, exception alerts, and document processing—AI has the potential to streamline workflows, reduce errors, and free up teams to focus on strategic decision-making.

A recent report by research firm Deep Analysis, sponsored by document automation specialist Hyperscience, sheds light on the current state of AI readiness in T&L back-office functions. Titled “Market Momentum Index: AI Readiness in Transportation and Logistics Back-Office Operations,” the report drew on findings from a survey of T&L professionals to reveal both the challenges and opportunities for automation and AI adoption. (For more information about the research and methodology, see sidebar, “About the research.”)

This article summarizes some of the key findings and offers some actionable recommendations for supply chain professionals looking to harness the power of AI to drive efficiency and competitiveness.

THE CRITICAL ROLE OF THE BACK OFFICE

Back-office operations are the administrative core of supply chain processes, encompassing tasks such as order processing, inventory management, billing, compliance documentation, and communications with vendors and carriers. While these tasks are not visible to end consumers, they are vital to maintaining the smooth flow of goods and ensuring on-time deliveries. Furthermore, these operations are typically complex, involving numerous transactions and partners, and, as a result, are often plagued by fragmented processes, duplicated efforts, and misaligned data. Yet, most transportation and logistics companies still depend on manual processes and paper-based systems for their back-office operations, which often lead to errors, delays, and inefficiencies.

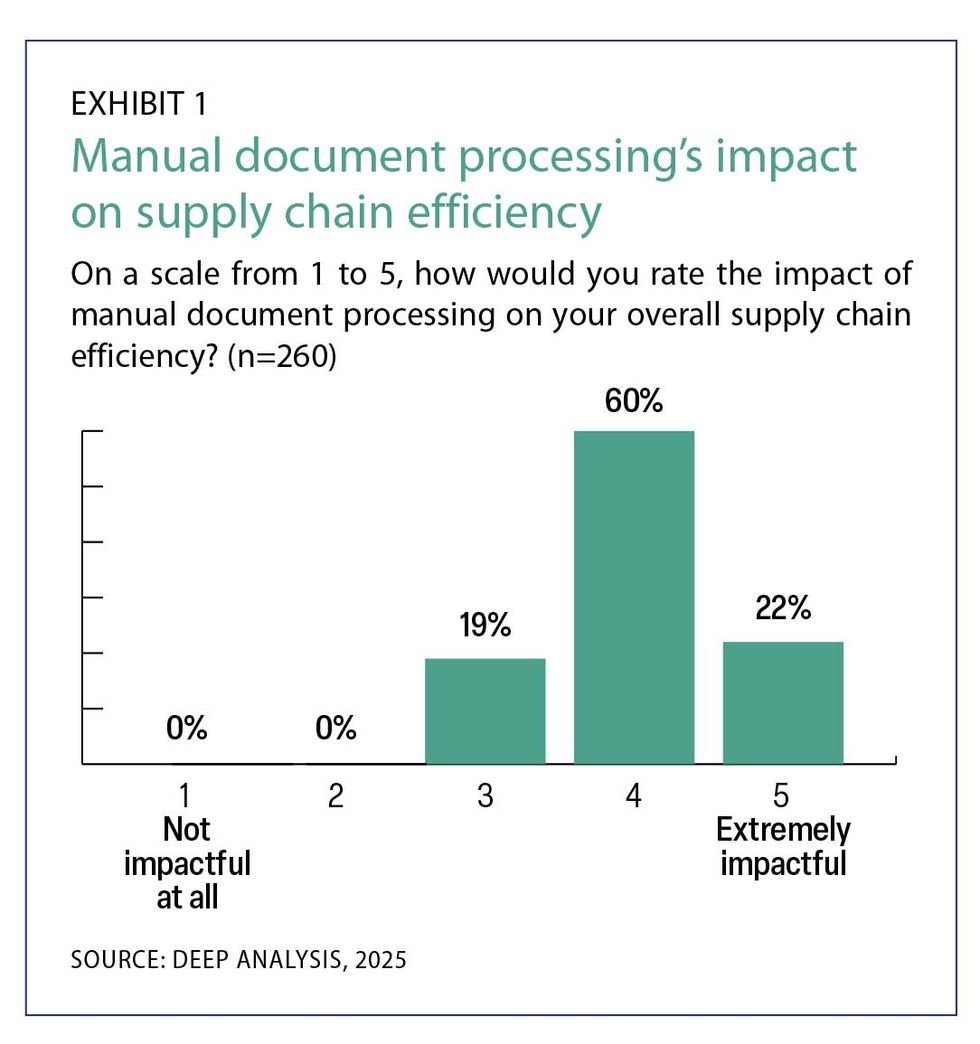

For example, the industry relies heavily on documents such as invoices, bills of lading, shipment tracking forms, and compliance records. Many organizations, however, use manual or semi-automated processes to manage these documents. Survey respondents indicated that the manual handling of supply chain documentation is a significant challenge that can have a large impact on overall supply chain efficiency (see Exhibit 1). For instance, missing or incorrect paperwork can cause customs delays, incur fines, or disrupt critical supply chain timelines. Additionally, document handling involves multiple touchpoints, which increases the risk of errors and operational delays. Furthermore, the lack of standardized document formats complicates data sharing and collaboration.

The survey found that many companies have implemented digital tools such as enterprise resource planning (ERP) systems, supply chain management (SCM) systems, transportation management systems (TMS), and warehouse management systems (WMS). These systems were initially marketed as comprehensive solutions capable of automating business processes, improving efficiency, and providing real-time data visibility. However, their effectiveness has been limited by several key challenges. First, high implementation costs and complex integrations often lead to partial deployments, where critical functions remain unautomated. Second, rigid system architectures struggle to adapt to dynamic business needs, forcing employees to rely on manual workarounds—particularly in Excel—to fill functionality gaps. This reliance on spreadsheets introduces high data-entry error rates, inconsistent reporting, and limited data visualization capabilities. Additionally, ineffective user training and resistance to change further hinder adoption, leaving many organizations unable to fully leverage these systems. As a result, despite their potential, ERP, WMS, and similar tools frequently fall short of delivering the promised operational transformation.

THE GROWING INTEREST IN AI

Given the lack of success with other technology tools, there is a perception that supply chain organizations in general—and T&L firms in particular—might be resistant to or uninterested in AI. So it came as a bit of a surprise that the survey results indicated a growing interest in automation and AI within the T&L sector. Over 70% of respondents expressed a willingness to invest in AI-optimized systems, recognizing the potential for these technologies to transform back-office operations.

Of those respondents whose organizations were already using AI, 98% said they view the technology as useful, important, or vital. As Exhibit 2 shows, these respondents are currently employing AI to accomplish a wide range of goals. The report highlights several key areas where AI adds value, such as:

1. Improved decision-making (31%): AI can analyze large volumes of complex data—such as real-time traffic patterns, weather conditions, shipment tracking, and historical trends—to optimize supply chain decisions.

2. Error reduction (28%): For back-office tasks such as data entry, invoice processing, and document management, AI can automate repetitive processes, drastically reducing human error.

3. Enhanced data quality (37%): AI improves data quality by ensuring consistency, standardization, and accuracy, making the data more reliable for decision-making purposes.

Going forward, automation and AI have the potential to reshape the industry, enabling companies to reimagine workflows, prioritize sustainability, and enhance collaboration.

BARRIERS TO AI ADOPTION

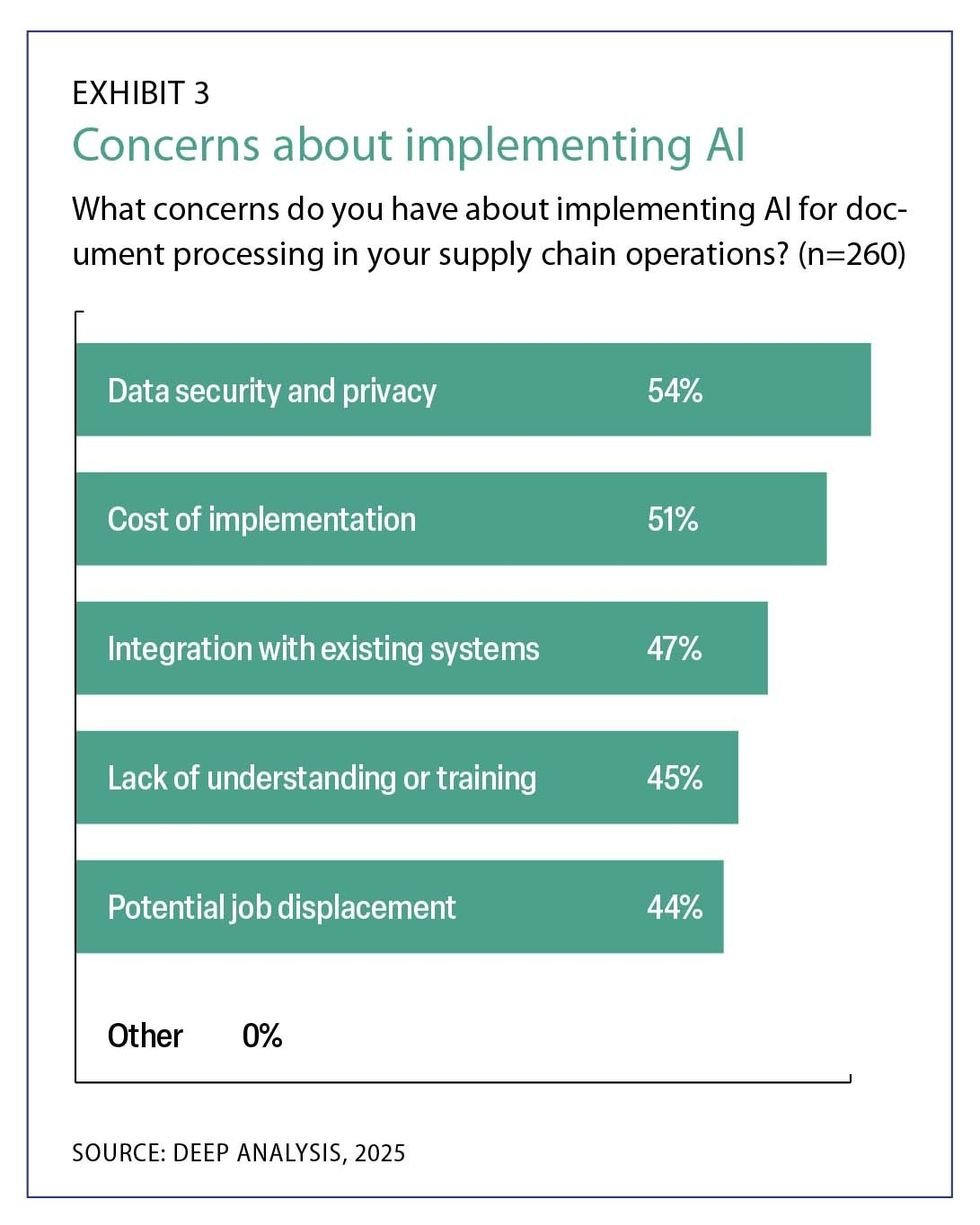

Despite the clear potential of AI, significant barriers to adoption remain. The survey respondents reported several concerns about implementing AI for back-office processes (see Exhibit 3). The most common concerns include:

1. Data security and privacy (54%): Transportation and logistics companies handle a large volume of sensitive data, including customer information, shipment details, and payment records. Ensuring robust security protocols and compliance with privacy regulations is critical for any AI implementation.

2. Cost of implementation (51%): AI technologies require considerable upfront investment in both hardware and software, and many smaller logistics firms or those with tight margins may find it difficult to justify this expense.

3. Integration with existing systems (47%): Many logistics companies still rely on traditional TMS and ERP systems that were not built with AI in mind, requiring substantial and extensive investment in infrastructure upgrades.

ESSENTIAL STEPS

No matter how powerful a technology is, its effectiveness in the real world of business is only as good as the planning and execution of a transformation project. As companies look to implement AI, they must make sure to take essential steps such as standardizing data formats, investing in workforce training, and fostering industrywide collaboration. The report concludes with several recommendations for companies looking to adopt AI and automation in their back-office operations, including:

1. Invest in AI training: Providing employees with training on AI tools and systems will help bridge the knowledge gap and increase adoption rates.

2. Focus on incremental implementation: Starting with pilot projects allows companies to assess the technology’s return on investment (ROI) and build confidence in AI technologies before large-scale deployment.

3. Develop industry standards: Collaborate with industry groups to establish standardized document formats and processing protocols, reducing inefficiencies and errors.

4. Prioritize integration: Select AI solutions that integrate seamlessly with existing systems, minimizing disruption during the transition.

5. Monitor emerging technologies: Stay informed about advancements in AI, such as intelligent document processing (IDP) and robotic process automation (RPA), to remain competitive.

THE TIME IS NOW

The transportation and logistics sector is at a pivotal moment, with significant opportunities to leverage AI and automation to address long-standing inefficiencies in back-office operations. While challenges such as integration, cost, and training remain, the industry is moving steadily toward broader adoption of digital and AI-based solutions. By addressing these barriers and focusing on incremental, strategic implementation, companies can unlock the full potential of automation and AI, driving efficiency and competitiveness in an increasingly complex and indeed volatile market.

Some may be understandably skeptical of AI’s ability to truly transform back-office operations, particularly given past failures to digitize paper-based processes. Certainly, no technology is perfect, and its effectiveness is dependent on how well the organization plans and executes its implementation. However, it’s important to note that huge advances have been made in the ability of AI to read, understand, and process document-based processes. As a result, AI has the potential to make relatively light work of anything from invoices to bills of lading, providing accuracy levels typically far higher than were a human to do the work manually.

For supply chain professionals, the message is clear: The future of T&L lies in embracing digital transformation, investing in AI, and fostering collaboration across the industry. The time to act is now.

About the research

In 2024, the document automation company Hyperscience and the Council of Supply Chain Management Professionals (CSCMP) partnered with the research and advisory firm Deep Analysis on a research project exploring the current state of back-office processes in transportation and logistics, and the potential impact of AI. The report, “Market Momentum Index: AI Readiness in Transportation and Logistics Back-Office Operations,” is based on survey results from senior-level managers and executives from 300 enterprises located in the United States. All of these organizations have annual revenues greater than $10 million and more than 1,000 employees. The survey was conducted in November and December of 2024. The full 21-page report can be downloaded for free at https://explore.hyperscience.ai/report-ai-readiness-in-transportation-logistics.

-

Business1 week ago

Business1 week agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms4 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics