Zhang, C., Zhang, C., Zhang, M. & Kweon, I. S. Text-to-image diffusion model in generative AI: a survey. Preprint at https://doi.org/10.48550/arXiv.2303.07909 (2023).

He, K. et al. A survey of large language models for healthcare: from data, technology, and applications to accountability and ethics. Inf. Fusion 118, 102963 (2025).

Dahl, M., Magesh, V., Suzgun, M. & Ho, D. E. Large legal fictions: profiling legal hallucinations in large language models. J. Legal Anal. 16, 64–93 (2024).

Wu, S. et al. BloombergGPT: a large language model for finance. Preprint at https://doi.org/10.48550/arXiv.2303.17564 (2023).

Fan, A. et al. Large language models for software engineering: survey and open problems. In 2023 IEEE/ACM International Conference on Software Engineering: Future of Software Engineering (ICSE-FoSE) 31–53 (IEEE, 2023).

Jablonka, K. M. et al. 14 examples of how LLMs can transform materials science and chemistry: a reflection on a large language model hackathon. Digit. Discov. 2, 1233–1250 (2023).

Article

Google Scholar

Miret, S. et al. Perspective on AI for accelerated materials design at the AI4Mat-2023 workshop at NeurIPS 2023. Digit. Discov. 3, 1081–1085 (2024).

Article

Google Scholar

Lin, Z. et al. Evolutionary-scale prediction of atomic-level protein structure with a language model. Science 379, 1123–1130 (2023).

Article

MathSciNet

Google Scholar

Hsu, C. et al. Learning inverse folding from millions of predicted structures. In Proc. 39th International Conference on Machine Learning Vol. 162 (eds Chaudhuri, K. et al.) 8946–8970 (PMLR, 2022).

Xu, M., Yuan, X., Miret, S. & Tang, J. ProtST: multi-modality learning of protein sequences and biomedical texts. In International Conference on Machine Learning 38749–38767 (PMLR, 2023).

Cui, H. et al. scGPT: toward building a foundation model for single-cell multi-omics using generative AI. Nat. Methods 21, 1470–1480 (2024).

Dalla-Torre, H. et al. Nucleotide Transformer: building and evaluating robust foundation models for human genomics. Nat. Methods 22, 287–297 (2025).

Trewartha, A. et al. Quantifying the advantage of domain-specific pre-training on named entity recognition tasks in materials science. Patterns 3, 100488 (2022).

Article

Google Scholar

Gupta, T., Zaki, M., Krishnan, N. A. & Mausam, M. MatsSciBERT: a materials domain language model for text mining and information extraction. npj Computat. Mater. 8, 102 (2022).

Article

Google Scholar

Huang, S. & Cole, J. M. BatteryBERT: a pretrained language model for battery database enhancement. J. Chem. Inf. Model. 62, 6365–6377 (2022).

Article

Google Scholar

Devlin, J., Chang, M.-W., Lee, K. & Toutanova, K. BERT: pre-training of deep bidirectional transformers for language understanding. In Proc. 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (eds Burstein, J. et al.) Vol. 1, 4171–4186 (ACL, 2019).

Song, Y., Miret, S. & Liu, B. MatSci-NLP: evaluating scientific language models on materials science language tasks using text-to-schema modeling. In Proc. 61st Annual Meeting of the Association for Computational Linguistics (eds Rogers, A. et al.) Vol. 1, 3621–3639 (ACL, 2023).

Song, Y., Miret, S., Zhang, H. & Liu, B. HoneyBee: progressive instruction finetuning of large language models for materials science. In Findings of the Association for Computational Linguistics: EMNLP 2023 (eds Bouamor, H. et al.) 5724–5739 (ACL, 2023).

Xie, T. et al. DARWINseries: domain specific large language models for natural science. Preprint at https://doi.org/10.48550/arXiv.2308.13565 (2023).

Zaki, M., Jayadeva, J., Mausam, M. & Krishnan, N. A. MaScQA: investigating materials science knowledge of large language models. Digit. Discov. 3, 313–327 (2024).

Bran, M. et al. Augmenting large language models with chemistry tools. Nat. Mach. Intell. 6, 525–535 (2024).

Boiko, D. A., MacKnight, R., Kline, B. & Gomes, G. Autonomous chemical research with large language models. Nature 624, 570–578 (2023).

Article

Google Scholar

Gupta, T. et al. DiSCoMaT: distantly supervised composition extraction from tables in materials science articles. In Proc. 61st Annual Meeting of the Association for Computational Linguistics (eds Rogers, A. et al.) Vol. 1, 13465–13483 (ACL, 2023).

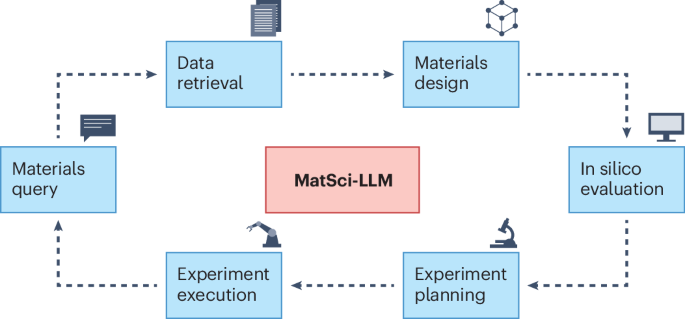

Zhang, H., Song, Y., Hou, Z., Miret, S. & Liu, B. HoneyComb: a flexible LLM-based agent system for materials science. In Findings of the Association for Computational Linguistics: EMNLP 2024 (eds Al-Onaizan, Y. et al.) 3369–3382 (ACL, 2024).

Achiam, J. et al. GPT-4 technical report. Preprint at https://doi.org/10.48550/arXiv.2303.08774 (2023).

Dubey, A. et al. The LLaMA 3 herd of models. Preprint at https://doi.org/10.48550/arXiv.2407.21783 (2024).

Computer, T. RedPajama: an open dataset for training large language models. GitHub https://github.com/togethercomputer/RedPajama-Data (2023).

Wei, J. et al. Chain-of-thought prompting elicits reasoning in large language models. Adv. Neural Inf. Process. Syst. 35, 24824–24837 (2022).

Google Scholar

BehnamGhader, P., Miret, S. & Reddy, S. Can retriever-augmented language models reason? The blame game between the retriever and the language model. In Findings of the Association for Computational Linguistics: EMNLP 2023 (eds Bouamor, H. et al.) 15492–15509 (ACL, 2023).

Buehler, M. J. Generative retrieval-augmented ontologic graph and multiagent strategies for interpretive large language model-based materials design. ACS Eng. Au. 4, 241–277 (2023).

Yoshikawa, N. et al. Large language models for chemistry robotics. Auton. Robots 47, 1057–1086 (2023).

Article

Google Scholar

Ghafarollahi, A. & Buehler, M. J. Automating alloy design and discovery with physics-aware multimodal multiagent AI. Proc. Natl Acad. Sci. USA 122, e2414074122 (2024).

Ghafarollahi, A. & Buehler, M. J. SciAgents: automating scientific discovery through bioinspired multi‐agent intelligent graph reasoning. Adv. Mater. 37, 2413523 (2024).

Ghafarollahi, A. & Buehler, M. J. ProtAgents: protein discovery via large language model multi-agent collaborations combining physics and machine learning. Digit. Discov. 3, 1389–1409 (2024).

Mishra, V. et al. Foundational large language models for materials research. Preprint at https://doi.org/10.48550/arXiv.2412.09560 (2024).

White, A. D. et al. Assessment of chemistry knowledge in large language models that generate code. Digital Discovery 2, 368–376 (2023).

Article

Google Scholar

Mirza, A. et al. A framework for evaluating the chemical knowledge and reasoning abilities of large language models against the expertise of chemists. Nat. Chem. https://doi.org/10.1038/s41557-025-01815-x (2025).

Zhang, D. et al. DPA-2: a large atomic model as a multi-task learner. npj Comput. Mater. 10, 293 (2024).

Jain, A. et al. The Materials Project: a materials genome approach to accelerating materials innovation. APL Mater. 1, 011002, (2013).

Lee, K. L. K. et al. MatSciML: a broad, multi-task benchmark for solid-state materials modeling. In AI for Accelerated Materials Design—NeurIPS 2023 Workshop (NeurIPS, 2023).

Hellwich, K.-H., Hartshorn, R. M., Yerin, A., Damhus, T. & Hutton, A. T. Brief guide to the nomenclature of organic chemistry (IUPAC technical report). Pure Appl. Chem. 92, 527–539 (2020).

Article

Google Scholar

Kearnes, S. M. et al. The Open Reaction Database. J. Am. Chem. Soc. 143, 18820–18826 (2021).

Article

Google Scholar

Mercado, R., Kearnes, S. M. & Coley, C. W. Data sharing in chemistry: lessons learned and a case for mandating structured reaction data. J. Chem. Inf. Model. 63, 4253–4265 (2023).

Article

Google Scholar

Zhao, J., Huang, S. & Cole, J. M. OpticalBERT and OpticalTable-SQA: text-and table-based language models for the optical-materials domain. J. Chem. Inf. Model. 63, 1961–1981 (2023).

Article

Google Scholar

Zhao, J. & Cole, J. M. A database of refractive indices and dielectric constants auto-generated using chemdataextractor. Sci. Data 9, 192 (2022).

Article

Google Scholar

Schilling-Wilhelmi, M. et al. From text to insight: large language models for chemical data extraction. Chem. Soc. Rev. 54, 1125–1150 (2025).

Hira, K., Zaki, M., Sheth, D. B., Mausam, M. & Anoop Krishnan, N. M. Reconstructing materials tetrahedron: challenges in materials information extraction. In AI for Accelerated Materials Design—NeurIPS 2023 Workshop (NeurIPS, 2023).

Olivetti, E. A. et al. Data-driven materials research enabled by natural language processing and information extraction. Appl. Phys. Rev. 7, 041317 (2020).

Article

Google Scholar

Jensen, Z. et al. Discovering relationships between osdas and zeolites through data mining and generative neural networks. ACS Cent. Sci. 7, 858–867 (2021).

Article

Google Scholar

Kim, E. et al. Materials synthesis insights from scientific literature via text extraction and machine learning. Chem. Mater. 29, 9436–9444 (2017).

Article

Google Scholar

Kim, E. et al. Inorganic materials synthesis planning with literature-trained neural networks. J. Chem. Inf. Model. 60, 1194–1201 (2020).

Article

Google Scholar

Wu, Y. et al. An empirical study on challenging math problem solving with GPT-4. Preprint at https://doi.org/10.48550/arXiv.2306.01337 (2023).

Alampara, N., Miret, S. & Jablonka, K. M. MatText: do language models need more than text & scale for materials modeling? In AI for Accelerated Materials Design-Vienna 2024 (NeurIPS, 2024).

Luu, R. K. & Buehler, M. J. BioinspiredLLM: conversational large language model for the mechanics of biological and bio-inspired materials. Adv. Sci. 11, 2306724 (2024).

Article

Google Scholar

Lu, W., Luu, R. K. & Buehler, M. J. Fine-tuning large language models for domain adaptation: exploration of training strategies, scaling, model merging and synergistic capabilities. npj Computat. Mater. 11, 84 (2025).

Dagdelen, J. et al. Structured information extraction from scientific text with large language models. Nat. Commun. 15, 1418 (2024).

Article

Google Scholar

Ramos, M. C., Collison, C. J. & White, A. D. A review of large language models and autonomous agents in chemistry. Chem. Sci. 16, 2514–2572 (2025).

Lála, J. et al. PaperQA: retrieval-augmented generative agent for scientific research. Preprint at https://doi.org/10.48550/arXiv.2312.07559 (2023).

Skarlinski, M. D. et al. Language agents achieve superhuman synthesis of scientific knowledge. Preprint at https://doi.org/10.48550/arXiv.2409.13740 (2024).

Ramos, M. C., Michtavy, S. S., Porosoff, M. D. & White, A. D. Bayesian optimization of catalysts with in-context learning. Preprint at https://doi.org/10.48550/arXiv.2304.05341 (2023).

Sung, Y.-L. et al. An empirical study of multimodal model merging. In Findings of the Association for Computational Linguistics: EMNLP 2023 (eds Bouamor, H. et al.) 1563–1575 (ACL, 2023).

Buehler, M. J. Cephalo: multi-modal vision-language models for bio-inspired materials analysis and design. Adv. Funct. Mater. 34, 2409531 (2024).

Buehler, M. J. Accelerating scientific discovery with generative knowledge extraction, graph-based representation, and multimodal intelligent graph reasoning. Mach. Learn. Sci. Technol. 5, 035083 (2024).

Article

Google Scholar

Buehler, M. J. MeLM, a generative pretrained language modeling framework that solves forward and inverse mechanics problems. J. Mech. Phys. Solids 181, 105454 (2023).

Article

MathSciNet

Google Scholar

Gruver, N. et al. Fine-tuned language models generate stable inorganic materials as text. In AI for Accelerated Materials Design—NeurIPS 2023 Workshop (NeurIPS, 2023).

Ding, Q., Miret, S. & Liu, B. MatExpert: decomposing materials discovery by mimicking human experts. In 13th International Conference on Learning Representations (ICLR, 2025).

Alampara, N. et al. Probing the limitations of multimodal language models for chemistry and materials research. Preprint at https://doi.org/10.48550/arXiv.2411.16955 (2024).

Guan, X. et al. rStar-Math: small LLMs can master math reasoning with self-evolved deep thinking. Preprint at https://doi.org/10.48550/arXiv.2501.04519 (2025).

Qin, Y. et al. O1 replication journey: a strategic progress report—part 1. Preprint at https://doi.org/10.48550/arXiv.2410.18982 (2024).

Lambert, N. et al. Tulu 3: pushing frontiers in open language model post-training. Preprint at https://doi.org/10.48550/arXiv.2411.15124 (2024).

Brown, B. et al. Large language monkeys: scaling inference compute with repeated sampling. Preprint at https://doi.org/10.48550/arXiv.2407.21787 (2024).

Narayanan, S. et al. Aviary: training language agents on challenging scientific tasks. Preprint at https://doi.org/10.48550/arXiv.2412.21154 (2024).

Mysore, S. et al. The materials science procedural text corpus: annotating materials synthesis procedures with shallow semantic structures. In Proc. 13th Linguistic Annotation Workshop 56–64 (ACL, 2019).

Völker, C., Rug, T., Jablonka, K. M. & Kruschwitz, S. LLMs can design sustainable concrete—a systematic benchmark. Preprint at Res. Sq. https://doi.org/10.21203/rs.3.rs-3913272/v1 (2024).

Brinson, L. C. et al. Polymer nanocomposite data: curation, frameworks, access, and potential for discovery and design. ACS Macro Lett. 9, 1086–1094 (2020).

Article

Google Scholar

Circi, D., Khalighinejad, G., Chen, A., Dhingra, B. & Brinson, L. C. How well do large language models understand tables in materials science? Integr. Mater. Manuf. Innov. 13, 669–687 (2024).

Article

Google Scholar

Polak, M. P. & Morgan, D. Extracting accurate materials data from research papers with conversational language models and prompt engineering. Nat. Commun. 15, 1569 (2024).

Article

Google Scholar

Kristiadi, A. et al. A sober look at llms for material discovery: are they actually good for Bayesian optimization over molecules? In 41st International Conference on Machine Learning 25603–25622 (PMLR, 2024).

Vasudevan, R. K., Orozco, E. & Kalinin, S. V. Discovering mechanisms for materials microstructure optimization via reinforcement learning of a generative model. Mach. Learn. Sci. Technol. 3, 04LT03 (2022).

Article

Google Scholar

Mandal, I. et al. Autonomous microscopy experiments through large language model agents. Preprint at https://doi.org/10.48550/arXiv.2501.10385 (2024).

Liu, Y., Checa, M. & Vasudevan, R. K. Synergizing human expertise and AI efficiency with language model for microscopy operation and automated experiment design. Mach. Learn. Sci. Technol. 5, 02LT01 (2024).

Buehler, M. J. MechGPT, a language-based strategy for mechanics and materials modeling that connects knowledge across scales, disciplines, and modalities. Appl. Mech. Rev. 76, 021001 (2024).

Article

Google Scholar

Venugopal, V. & Olivetti, E. MatKG: an autonomously generated knowledge graph in material science. Sci. Data 11, 217 (2024).

Article

Google Scholar

Flam-Shepherd, D. & Aspuru-Guzik, A. Language models can generate molecules, materials, and protein binding sites directly in three dimensions as XYZ, CIF, and PDB files. Preprint at https://doi.org/10.48550/arXiv.2305.05708 (2023).

Zeni, C. et al. A generative model for inorganic materials design. Nature 639, 624–632 (2025).

Article

Google Scholar

Govindarajan, P. et al. Learning conditional policies for crystal design using offline reinforcement learning. In AI for Accelerated Materials Design—NeurIPS 2023 Workshop (NeurIPS, 2023).

Levy, D. et al. SymmCD: symmetry-preserving crystal generation with diffusion models. In 13th International Conference on Learning Representations (ICLR, 2024).

Rubungo, A. N., Arnold, C., Rand, B. P. & Dieng, A. B. LLM-Prop: predicting physical and electronic properties of crystalline solids from their text descriptions. Preprint at https://doi.org/10.48550/arXiv.2310.14029 (2023).

Sim, M. et al. ChemOS 2.0: an orchestration architecture for chemical self-driving laboratories. Matter 7, 2959–2977 (2024).

Article

Google Scholar

Szymanski, N. J. et al. An autonomous laboratory for the accelerated synthesis of novel materials. Nature 624, 86–91 (2023).

Article

Google Scholar