AI Research

3 Words That Could Be a Big Problem for Artificial Intelligence (AI) Chatbots

Key Points

-

Tech company Cloudflare is making it easy for content owners to deploy pay per crawl.

-

If chatbots have to pay each time they crawl a website, that can drastically increase their operating costs.

Many tech companies are investing heavily into artificial intelligence (AI) chatbots, which can help address customer queries efficiently and allow businesses to reduce their staffing levels. Grok, Claude, ChatGPT, Gemini, Perplexity, and Copilot are just some of the names you’ve probably encountered by now. And those are just some of the more popular chatbots.

The excitement around chatbots and their ability to collect, analyze, and summarize data has many people excited about their potential. But there are three words that could derail that potential and significantly increase the costs for the companies that are betting on chatbots: pay per crawl.

Where to invest $1,000 right now? Our analyst team just revealed what they believe are the 10 best stocks to buy right now. Continue »

Image source: Getty Images.

Cloudflare to offer pay per crawl

Cloudflare(NYSE: NET) helps people and companies create websites and make them both faster and more secure. And it has recently announced a new feature that could stifle chatbots: pay per crawl. What this means is that content owners could ensure that they are compensated when AI chatbots access their sites, collect data, and use it in a response to a user’s query.

This is what I expect to be the norm going forward. That’s because the danger for content owners is that if a chatbot can simply scrape information from a website, without compensating the owner for it, that results in less traffic and fewer ad dollars. Restricting access is one option, but forcing AI chatbots to pay for access is another one. And it’s crucial for chatbots because if they don’t have access to the latest information, their answers can quickly become outdated and less useful to the end user.

Given how common it is these days to see a company having its own chatbot, I believe the future will be that chatbots are all operating within their own silos and pull only company-specific information. Being able to scour and scrape the internet for all the best content seems improbable, given the costs that could be incurred from doing so, especially if sites deploy pay per crawl.

Earlier this year, OpenAI’s CEO Sam Altman said that even on a $200-per-month pro subscription for ChatGPT, the company was losing money. And that’s without having to worry about the costs if pay per crawl were initiated at a large scale. Under that scenario, it can be much more difficult for a company running an AI chatbot to turn a profit.

Investing in AI chatbots may not be a recipe for success

Many big tech companies can afford to invest heavily into tech, and they have indeed done so. One of the best examples of that is Meta Platforms (NASDAQ: META), which owns popular social media applications such as WhatsApp and Facebook.

It recently announced the launch of a new AI division, as it spends heavily on AI-related growth. Last month, it also announced a $14 billion investment into Scale AI and hired its co-founder, Alex Wang, to help lead Meta’s AI efforts.

The company has its own chatbot, Meta AI, which it is offering as a stand-alone app, as it looks to compete with others, including ChatGPT. Meta, with billions in monthly active users, has a ton of user data it can tap into. But in building up strong AI capabilities for its business and chatbot, it could result in more significant expenditures in the future, making it difficult for this to be a profitable venture down the road.

While Meta has deep pockets and has generated free cash flow of over $52 billion in the trailing 12 months, investors will want to keep a close eye on the company’s AI efforts, to ensure the new division doesn’t just become another money pit like Reality Labs.

Investors should tread carefully with stocks spending big on AI

AI is the new buzz term in tech, and while companies are falling over themselves spending heavily on these next-gen technologies, it’s unclear just how big of a payoff there might be from such efforts — if there will even be one at all. Some companies will undoubtedly become more efficient and profitable by improving their operations. But in other cases, especially when the focus is on chatbots, that may not be the case.

The prudent thing for investors to do when looking at tech stocks is to see what their plans are for AI, and how they believe their investments will lead to improved financials down the road. If there isn’t a clear plan and if it’s just about investing heavily into AI and into chatbots, that can be a sign that the company may be going down a spending spree that could end up doing more harm than good.

There’s a lot of excitement around AI these days, but it’s important to keep it in check. Hype can help a stock rally in the short term, but it’s strong fundamentals that will ensure its value remains high over the long haul.

Should you invest $1,000 in Cloudflare right now?

Before you buy stock in Cloudflare, consider this:

The Motley Fool Stock Advisor analyst team just identified what they believe are the 10 best stocks for investors to buy now… and Cloudflare wasn’t one of them. The 10 stocks that made the cut could produce monster returns in the coming years.

Consider when Netflix made this list on December 17, 2004… if you invested $1,000 at the time of our recommendation, you’d have $687,149!* Or when Nvidia made this list on April 15, 2005… if you invested $1,000 at the time of our recommendation, you’d have $1,060,406!*

Now, it’s worth noting Stock Advisor’s total average return is 1,072% — a market-crushing outperformance compared to 180% for the S&P 500. Don’t miss out on the latest top 10 list, available when you join Stock Advisor.

*Stock Advisor returns as of July 15, 2025

Randi Zuckerberg, a former director of market development and spokeswoman for Facebook and sister to Meta Platforms CEO Mark Zuckerberg, is a member of The Motley Fool’s board of directors. David Jagielski has no position in any of the stocks mentioned. The Motley Fool has positions in and recommends Cloudflare and Meta Platforms. The Motley Fool has a disclosure policy.

AI Research

AUI, PMU Sign Agreement to Establish AI Research Chair in Morocco

Rabat — Al Akhawayn University in Ifrane (AUI) and Prince Mohammed Bin Fahd University (PMU) announced an agreement establishing the Prince Mohammed Bin Fahd bin Abdulaziz Chair for Artificial Intelligence Applications.

A statement from AUI said Amine Bensaid, President of AUI, signed the agreement with his PMU counterpart Issa Al Ansari.

The Chair, established within AUI, will conduct applied research in AI to develop solutions that address societal needs and promote innovation to support Moroccan talents in their fields.

The agreement reflects a shared commitment to strengthen cooperation between the two institutions, with a focus on AI to contribute to the socio-economic development of both Morocco and Saudi Arabia, the statement added.

The initiative also seeks to help Morocco and Saudi Arabia boost their national priorities through AI as a key tool in advancing academic excellence.

Bensaid commented on the agreement, saying that the partnership will strengthen Al Akhawayn’s mission to “combine academic excellence with technological innovation.”

It will also help to master students’ skills in AI in order to serve humanity and protect citizens from risk.

“By hosting this initiative, we also affirm the role of Al Akhawayn and Morocco as pioneering actors in this field in Africa and in the region.”

For his part, Al Ansari also expressed satisfaction with the new agreement, stating that the pact is in line with PU’s efforts to serve Saudi Arabia’s Vision 2030.

This vision “places artificial intelligence at the heart of economic and social transformation,” he affirmed.

He also expressed his university’s commitment to working with Al Akhawayn University to help address tomorrow’s challenges and train the new generation of talents that are capable of shaping the future.

Al Akhawayn has been reiterating its commitment to continue to cooperate with other institutions in order to boost research as well as ethical AI use.

In April, AUI signed an agreement with the American University of Sharjah to promote collaboration in research and teaching, as well as to empower Moroccan and Emirati students and citizens to engage with AI tools while staying rooted in their cultural identity.

This is in line with Morocco’s ambition to enhance AI use in its own education sector.

In January, Secretary General of Education Younes Shimi outlined Morocco’s ambition and advocacy for integrating AI into education.

He also called for making this technology effective, adaptable, and accessible for the specific needs of Moroccans and for the rest of the Arab world.

AI Research

How NAU professors are using AI in their research – The NAU Review

Generative AI is in classrooms already. Can educators use this tool to enhance learning among their students instead of undercutting assignments?

Yes, said Priyanka Parekh, an assistant research professor in the Center for STEM Teaching and Learning at NAU. With a grant from NAU’s Transformation through Artificial Intelligence in Learning (TRAIL) program, Parekh is investigating how undergraduate students use GenAI as learning partners—building on what they learn in the classroom to maximize their understanding of STEM topics. It’s an important question as students make increasing use of these tools with or without their professors’ knowledge.

“As GenAI becomes an integral part of everyday life, this project contributes to building critical AI literacy skills that enable individuals to question, critique and ethically utilize AI tools in and beyond the school setting,” Parekh said.

That is the foundation of the TRAIL program, which is in its second year of offering grants to professors to explore how to use GenAI in their work. Fourteen professors received grants to implement GenAI in their classrooms this year. Now in its second year, the Office of the Provost partnered with the Office of the Vice President for Research to offer grants to professors in five different colleges to study the use of GenAI tools in research.

The recipients are:

- Chris Johnson, School of Communication, Integrating AI-Enhanced Creative Workflows into Art, Design, Visual Communication, and Animation Education

- Priyanka Parekh, Center for Science Teaching and Learning, Understanding Learner Interactions with Generative AI as Distributed Cognition

- Marco Gerosa, School of Informatics, Computing, and Cyber Systems, To what extent can AI replace human subjects in software engineering research?

- Emily Schneider, Criminology and Criminal Justice, Israeli-Palestinian Peacebuilding through Artificial Intelligence

- Delaney La Rosa, College of Nursing, Enhancing Research Proficiency in Higher Education: Analyzing the Impact of Afforai on Student Literature Review and Information Synthesis

Exploring how GenAI shapes students as learners

Parekh’s goals in her research are to understand how students engage with GenAI in real academic tasks and what this learning process looks like; to advance AI literacy, particularly among first-generation, rural and underrepresented learners; help faculty become more comfortable with AI; and provide evidence-based recommendations for integrating GenAI equitably in STEM education.

It’s a big ask, but she’s excited to see how the study shakes out and how students interact with the tools in an educational improvement. She anticipates her study will have broader applications as well; employees in industries like healthcare, engineering and finance are using AI, and her work may help implement more equitable GenAI use across a variety of industries.

“Understanding how learners interact with GenAI to solve problems, revise ideas or evaluate information can inform AI-enhanced workplace training, job simulations and continuing education,” she said.

Using AI as a collaborator, not a shortcut

Johnson, a professor of visual communication in the School of Communication, isn’t looking for AI to create art, but he thinks it can be an important tool in the creation process—one that helps human creators create even better art. His project will include:

- Building a set of classroom-ready workflows that combine different industry tools like After Effects, Procreate Dreams and Blender with AI assistants for tasks such as storyboarding, ideation, cleanup, accessibility support

- Running guided stories to compare baseline pipelines to AI-assisted pipelines, looking at time saved and quality

- Creating open teaching modules that other instructors can adopt

In addition to creating usable, adaptable curriculum that teaches students to use AI to enhance their workflow—without replacing their work—and to improve accessibility standards, Johnson said this study will produce clear before and after case studies that show where AI can help and where it can’t.

“AI is changing creative industries, but the real skill isn’t pressing a button—it’s knowing how to direct, critique and refine AI as a collaborator,” Johnson said. “That’s what we’re teaching our students: how to keep authorship, ethics and creativity at the center.”

Johnson’s work also will take on the ethics of training and provenance that are a constant part of the conversation around using AI in art creation. His study will emphasize tools that respect artists’ rights and steer clear of imitating the styles of living artists without consent. He also will emphasize to students where AI fits into the work; it’s second in the process after they’ve initially created their work. It offers feedback; it doesn’t create the work.

Top photo: This is an image produced by ChatGPT illustrating Parekh’s research. I started with the prompt: “Can you make an image that has picture quality that shows a student with a reflection journal or interface showing their GenAI interaction and metacognitive responses (e.g., “Did this response help me?”)? It took a few rounds of changing the prompt, including telling AI twice to not put three hands into the image, to get to an image that reflects Parekh’s research and adheres to The NAU Review’s standards.

Heidi Toth | NAU Communications

(928) 523-8737 | heidi.toth@nau.edu

AI Research

How London Stock Exchange Group is detecting market abuse with their AI-powered Surveillance Guide on Amazon Bedrock

London Stock Exchange Group (LSEG) is a global provider of financial markets data and infrastructure. It operates the London Stock Exchange and manages international equity, fixed income, and derivative markets. The group also develops capital markets software, offers real-time and reference data products, and provides extensive post-trade services. This post was co-authored with Charles Kellaway and Rasika Withanawasam of LSEG.

Financial markets are remarkably complex, hosting increasingly dynamic investment strategies across new asset classes and interconnected venues. Accordingly, regulators place great emphasis on the ability of market surveillance teams to keep pace with evolving risk profiles. However, the landscape is vast; London Stock Exchange alone facilitates the trading and reporting of over £1 trillion of securities by 400 members annually. Effective monitoring must cover all MiFID asset classes, markets and jurisdictions to detect market abuse, while also giving weight to participant relationships, and market surveillance systems must scale with volumes and volatility. As a result, many systems are outdated and unsatisfactory for regulatory expectations, requiring manual and time-consuming work.

To address these challenges, London Stock Exchange Group (LSEG) has developed an innovative solution using Amazon Bedrock, a fully managed service that offers a choice of high-performing foundation models from leading AI companies, to automate and enhance their market surveillance capabilities. LSEG’s AI-powered Surveillance Guide helps analysts efficiently review trades flagged for potential market abuse by automatically analyzing news sensitivity and its impact on market behavior.

In this post, we explore how LSEG used Amazon Bedrock and Anthropic’s Claude foundation models to build an automated system that significantly improves the efficiency and accuracy of market surveillance operations.

The challenge

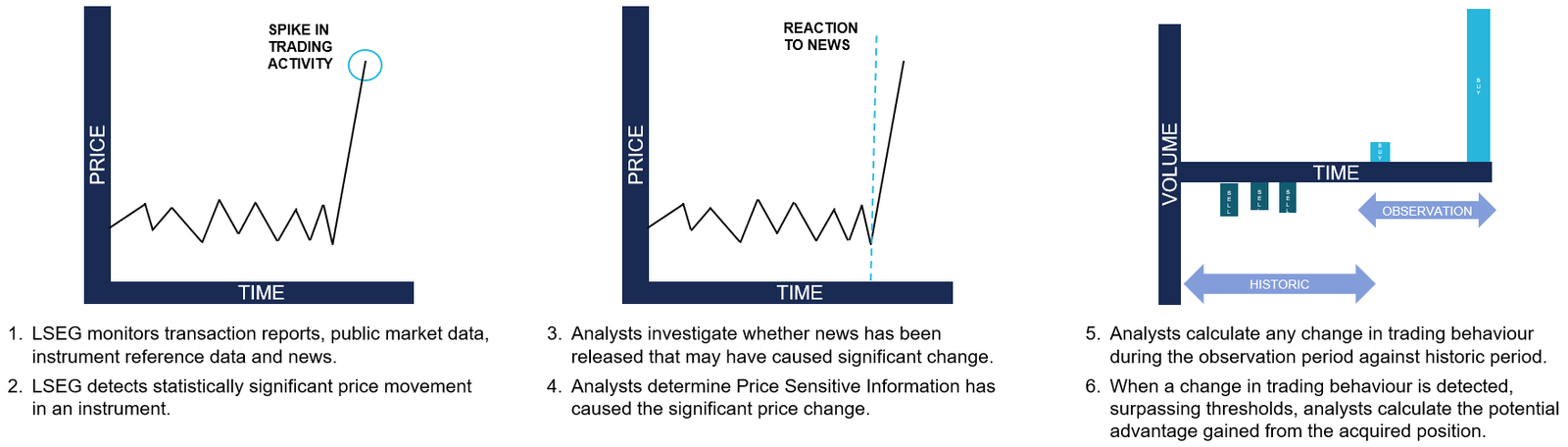

Currently, LSEG’s surveillance monitoring systems generate automated, customized alerts to flag suspicious trading activity to the Market Supervision team. Analysts then conduct initial triage assessments to determine whether the activity warrants further investigation, which might require undertaking differing levels of qualitative analysis. This could involve manual collation of all and any evidence that might be applicable when methodically corroborating regulation, news, sentiment and trading activity. For example, during an insider dealing investigation, analysts are alerted to statistically significant price movements. The analyst must then conduct an initial assessment of related news during the observation period to determine if the highlighted price move has been caused by specific news and its likely price sensitivity, as shown in the following figure. This initial step in assessing the presence, or absence, of price sensitive news guides the subsequent actions an analyst will take with a possible case of market abuse.

Initial triaging can be a time-consuming and resource-intensive process and still necessitate a full investigation if the identified behavior remains potentially suspicious or abusive.

Moreover, the dynamic nature of financial markets and evolving tactics and sophistication of bad actors demand that market facilitators revisit automated rules-based surveillance systems. The increasing frequency of alerts and high number of false positives adversely impact an analyst’s ability to devote quality time to the most meaningful cases, and such heightened emphasis on resources could result in operational delays.

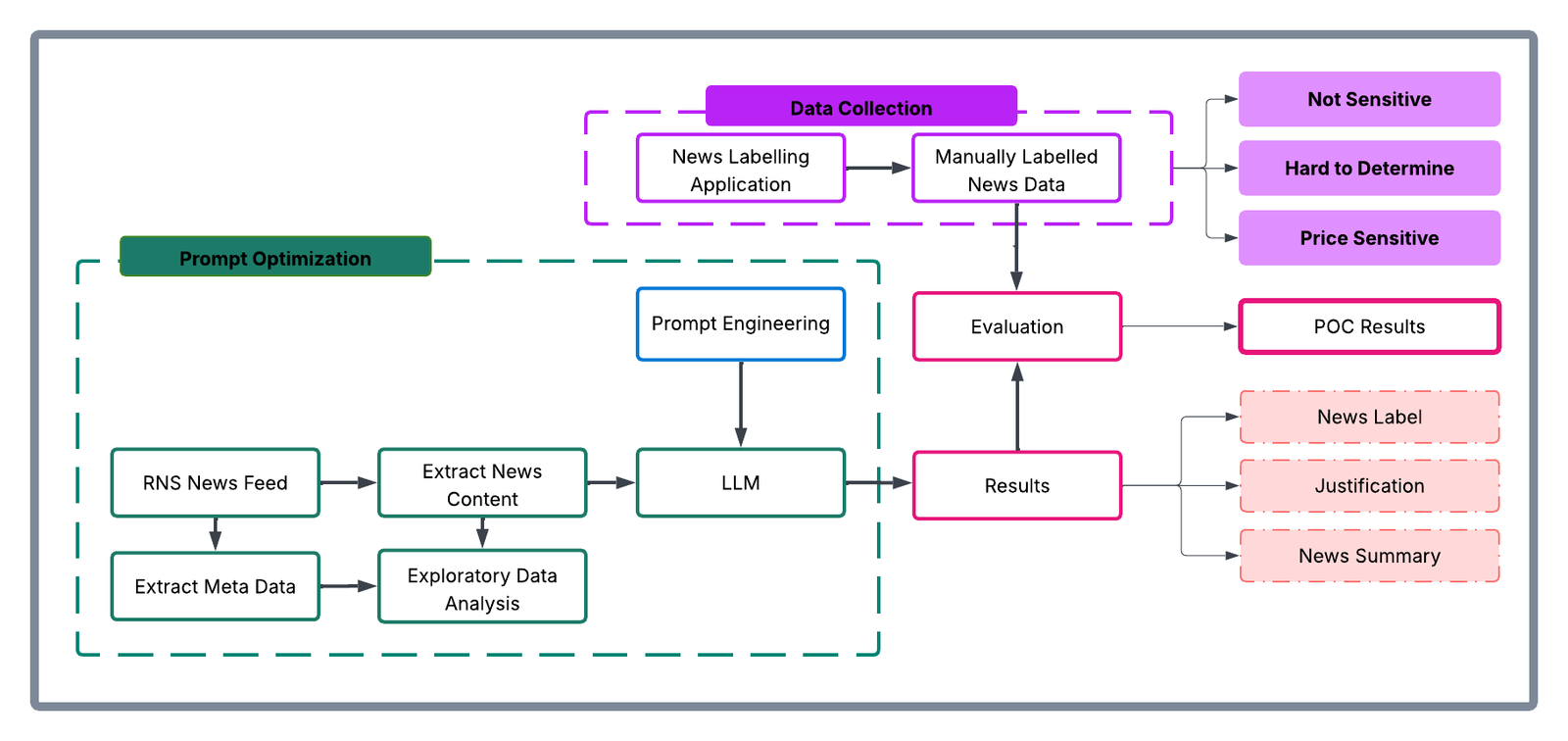

Solution overview

To address these challenges, LSEG collaborated with AWS to improve insider dealing detection, developing a generative AI prototype that automatically predicts the probability of news articles being price sensitive. The system employs Anthropic’s Claude Sonnet 3.5 model—the most price performant model at the time—through Amazon Bedrock to analyze news content from LSEG’s Regulatory News Service (RNS) and classify articles based on their potential market impact. The results support analysts to more quickly determine whether highlighted trading activity can be mitigated during the observation period.

The architecture consists of three main components:

- A data ingestion and preprocessing pipeline for RNS articles

- Amazon Bedrock integration for news analysis using Claude Sonnet 3.5

- Inference application for visualising results and predictions

The following diagram illustrates the conceptual approach:

The workflow processes news articles through the following steps:

- Ingest raw RNS news documents in HTML format

- Preprocess and extract clean news text

- Fill the classification prompt template with text from the news documents

- Prompt Anthropic’s Claude Sonnet 3.5 through Amazon Bedrock

- Receive and process model predictions and justifications

- Present results through the visualization interface developed using Streamlit

Methodology

The team collated a comprehensive dataset of approximately 250,000 RNS articles spanning 6 consecutive months of trading activity in 2023. The raw data—HTML documents from RNS—were initially pre-processed within the AWS environment by removing extraneous HTML elements and formatted to extract clean textual content. Having isolated substantive news content, the team subsequently carried out exploratory data analysis to understand distribution patterns within the RNS corpus, focused on three dimensions:

- News categories: Distribution of articles across different regulatory categories

- Instruments: Financial instruments referenced in the news articles

- Article length: Statistical distribution of document sizes

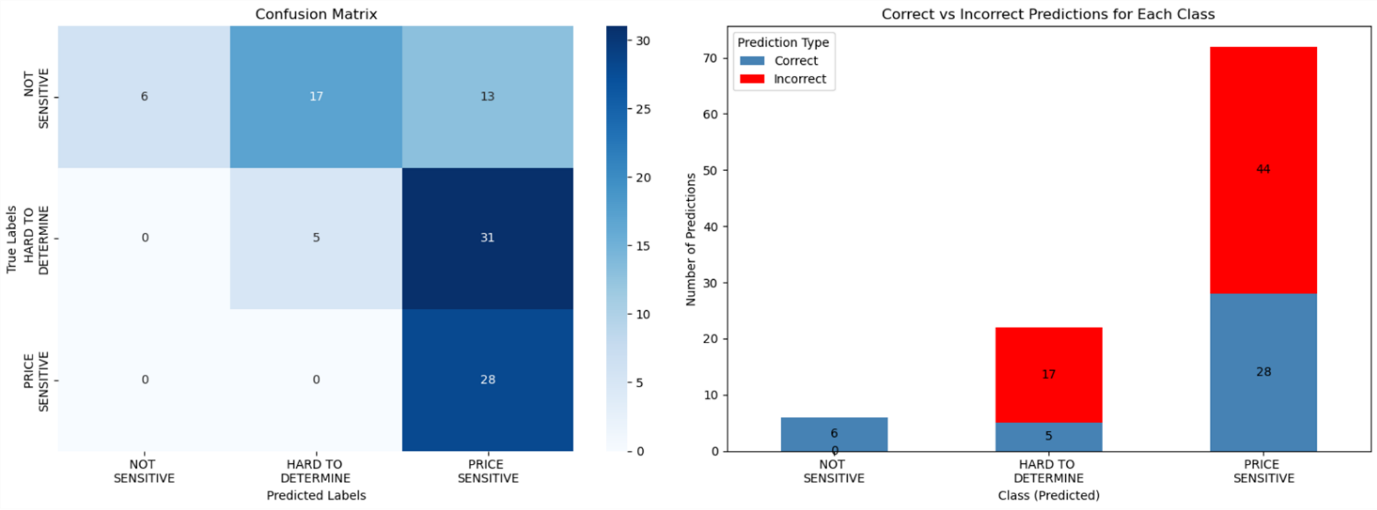

Exploration provided contextual understanding of the news landscape and informed the sampling strategy in creating a representative evaluation dataset. 110 articles were selected to cover major news categories, and this curated subset was presented to market surveillance analysts who, as domain experts, evaluated each article’s price sensitivity on a nine-point scale, as shown in the following image:

- 1–3: PRICE_NOT_SENSITIVE – Low probability of price sensitivity

- 4–6: HARD_TO_DETERMINE – Uncertain price sensitivity

- 7–9: PRICE_SENSITIVE – High probability of price sensitivity

The experiment was executed within Amazon SageMaker using Jupyter Notebooks as the development environment. The technical stack consisted of:

- Instructor library: Provided integration capabilities with Anthropic’s Claude Sonnet 3.5 model in Amazon Bedrock

- Amazon Bedrock: Served as the API infrastructure for model access

- Custom data processing pipelines (Python): For data ingestion and preprocessing

This infrastructure enabled systematic experimentation with various algorithmic approaches, including traditional supervised learning methods, prompt engineering with foundation models, and fine-tuning scenarios.

The evaluation framework established specific technical success metrics:

- Data pipeline implementation: Successful ingestion and preprocessing of RNS data

- Metric definition: Clear articulation of precision, recall, and F1 metrics

- Workflow completion: Execution of comprehensive exploratory data analysis (EDA) and experimental workflows

The analytical approach was a two-step classification process, as shown in the following figure:

- Step 1: Classify news articles as potentially price sensitive or other

- Step 2: Classify news articles as potentially price not sensitive or other

This multi-stage architecture was designed to maximize classification accuracy by allowing analysts to focus on specific aspects of price sensitivity at each stage. The results from each step were then merged to produce the final output, which was compared with the human-labeled dataset to generate quantitative results.

To consolidate the results from both classification steps, the data merging rules followed were:

| Step 1 Classification | Step 2 Classification | Final Classification |

|---|---|---|

| Sensitive | Other | Sensitive |

| Other | Non-sensitive | Non-sensitive |

| Other | Other | Ambiguous – requires manual review i.e., Hard to Determine |

| Sensitive | Non-sensitive | Ambiguous – requires manual review i.e., Hard to Determine |

Based on the insights gathered, prompts were optimized. The prompt templates elicited three key components from the model:

- A concise summary of the news article

- A price sensitivity classification

- A chain-of-thought explanation justifying the classification decision

The following is an example prompt:

As shown in the following figure, the solution was optimized to maximize:

- Precision for the

NOT SENSITIVEclass - Recall for the

PRICE SENSITIVEclass

This optimization strategy was deliberate, facilitating high confidence in non-sensitive classifications to reduce unnecessary escalations to human analysts (in other words, to reduce false positives). Through this methodical approach, prompts were iteratively refined while maintaining rigorous evaluation standards through comparison against the expert-annotated baseline data.

Key benefits and results

Over a 6-week period, Surveillance Guide demonstrated remarkable accuracy when evaluated on a representative sample dataset. Key achievements include the following:

- 100% precision in identifying non-sensitive news, allocating 6 articles to this category that analysts confirmed were non price sensitive

- 100% recall in detecting price-sensitive content, allocating 36 hard to determine and 28 price sensitive articles labelled by analysts into one of these two categories (never misclassifying price sensitive content)

- Automated analysis of complex financial news

- Detailed justifications for classification decisions

- Effective triaging of results by sensitivity level

In this implementation, LSEG has employed Amazon Bedrock so that they can use secure, scalable access to foundation models through a unified API, minimizing the need for direct model management and reducing operational complexity. Because of the serverless architecture of Amazon Bedrock, LSEG can take advantage of dynamic scaling of model inference capacity based on news volume, while maintaining consistent performance during market-critical periods. Its built-in monitoring and governance features support reliable model performance and maintain audit trails for regulatory compliance.

Impact on market surveillance

This AI-powered solution transforms market surveillance operations by:

- Reducing manual review time for analysts

- Improving consistency in price-sensitivity assessment

- Providing detailed audit trails through automated justifications

- Enabling faster response to potential market abuse cases

- Scaling surveillance capabilities without proportional resource increases

The system’s ability to process news articles instantly and provide detailed justifications helps analysts focus their attention on the most critical cases while maintaining comprehensive market oversight.

Proposed next steps

LSEG plans to first enhance the solution, for internal use, by:

- Integrating additional data sources, including company financials and market data

- Implementing few-shot prompting and fine-tuning capabilities

- Expanding the evaluation dataset for continued accuracy improvements

- Deploying in live environments alongside manual processes for validation

- Adapting to additional market abuse typologies

Conclusion

LSEG’s Surveillance Guide demonstrates how generative AI can transform market surveillance operations. Powered by Amazon Bedrock, the solution improves efficiency and enhances the quality and consistency of market abuse detection.

As financial markets continue to evolve, AI-powered solutions architected along similar lines will become increasingly important for maintaining integrity and compliance. AWS and LSEG are intent on being at the forefront of this change.

The selection of Amazon Bedrock as the foundation model service provides LSEG with the flexibility to iterate on their solution while maintaining enterprise-grade security and scalability. To learn more about building similar solutions with Amazon Bedrock, visit the Amazon Bedrock documentation or explore other financial services use cases in the AWS Financial Services Blog.

About the authors

Charles Kellaway is a Senior Manager in the Equities Trading team at LSE plc, based in London. With a background spanning both Equity and Insurance markets, Charles specialises in deep market research and business strategy, with a focus on deploying technology to unlock liquidity and drive operational efficiency. His work bridges the gap between finance and engineering, and he always brings a cross-functional perspective to solving complex challenges.

Charles Kellaway is a Senior Manager in the Equities Trading team at LSE plc, based in London. With a background spanning both Equity and Insurance markets, Charles specialises in deep market research and business strategy, with a focus on deploying technology to unlock liquidity and drive operational efficiency. His work bridges the gap between finance and engineering, and he always brings a cross-functional perspective to solving complex challenges.

Rasika Withanawasam is a seasoned technology leader with over two decades of experience architecting and developing mission-critical, scalable, low-latency software solutions. Rasika’s core expertise lies in big data and machine learning applications, focusing intently on FinTech and RegTech sectors. He has held several pivotal roles at LSEG, including Chief Product Architect for the flagship Millennium Surveillance and Millennium Analytics platforms, and currently serves as Manager of the Quantitative Surveillance & Technology team, where he leads AI/ML solution development.

Rasika Withanawasam is a seasoned technology leader with over two decades of experience architecting and developing mission-critical, scalable, low-latency software solutions. Rasika’s core expertise lies in big data and machine learning applications, focusing intently on FinTech and RegTech sectors. He has held several pivotal roles at LSEG, including Chief Product Architect for the flagship Millennium Surveillance and Millennium Analytics platforms, and currently serves as Manager of the Quantitative Surveillance & Technology team, where he leads AI/ML solution development.

Richard Chester is a Principal Solutions Architect at AWS, advising large Financial Services organisations. He has 25+ years’ experience across the Financial Services Industry where he has held leadership roles in transformation programs, DevOps engineering, and Development Tooling. Since moving across to AWS from being a customer, Richard is now focused on driving the execution of strategic initiatives, mitigating risks and tackling complex technical challenges for AWS customers.

Richard Chester is a Principal Solutions Architect at AWS, advising large Financial Services organisations. He has 25+ years’ experience across the Financial Services Industry where he has held leadership roles in transformation programs, DevOps engineering, and Development Tooling. Since moving across to AWS from being a customer, Richard is now focused on driving the execution of strategic initiatives, mitigating risks and tackling complex technical challenges for AWS customers.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms4 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi