AI Research

China surpasses the west in AI research and talent—but how?

- China leads in AI research, publishing more than the US, UK, and EU combined.

- It’s also the top global AI collaborator—while relying less on others.

Artificial intelligence has become more than a technological trend—it’s now viewed as a national asset. A new report from research analytics firm Digital Science shows that China is pulling far ahead in AI research, outpacing the US, UK, and EU in publication volume, patent filings, and researcher activity.

The report, DeepSeek and the New Geopolitics of AI, written by Digital Science CEO Dr Daniel Hook, draws from the Dimensions research database. It reviews global AI trends from 2000 to 2024, including research output, international partnerships, talent flows, and innovation outcomes. The conclusion is clear: China is now the most dominant force in AI research, and it’s widening the gap.

China outpaces global peers in research and citations

Back in 2000, fewer than 10,000 AI papers were published globally. In 2024, that number hit 60,000. But not all growth has been equal. China now produces as much AI research as the US, UK, and EU-27 combined. In terms of research attention, China captured over 40% of global citations in 2024—four times higher than the US and EU individually, and 20 times more than the UK.

More importantly, China is building a research ecosystem that doesn’t rely on others. While the US, UK, and EU remain tightly linked in AI collaboration, they are all more dependent on China than China is on them. Only 4% of China’s AI publications in 2024 involved collaborators from these regions. By contrast, 25% of the UK’s AI papers included a co-author from China, making China its top research partner.

Even the US—despite years of political tension and efforts to decouple—maintains its highest AI research ties with China. These relationships have persisted even as legislation like the China Initiative and chip export controls aimed to limit such collaboration.

Dr Hook argues that AI has become a key tool of geopolitics, similar to energy or defence capabilities. “AI is no longer neutral,” he writes. “Governments are using it as a strategic asset.”

DeepSeek shows China’s technical independence

China’s development of DeepSeek, a cost-efficient, open-source chatbot released in early 2025, shows how the country is finding workarounds to chip shortages and positioning itself for leadership. DeepSeek didn’t require expensive GPU training runs and was released under an MIT license—moves that signal both technical skill and confidence.

Beyond the model itself, DeepSeek is symbolic of something larger. China has built a vast, young, AI-trained population. More than 30,000 AI researchers are currently active in China. Its combined PhD and postdoc base alone is double the size of the entire US AI research population. By comparison, the US has about 10,000 researchers, the EU-27 around 20,000, and the UK roughly 3,000.

What stands out isn’t just scale—it’s structure. China’s AI workforce is overwhelmingly young, with relatively few senior researchers. This suggests China is investing in long-term capacity, rather than relying on a few high-profile experts. It’s also drawing in talent from overseas. The report notes that China is now a net gainer of AI researchers from countries like the US and UK, reversing earlier trends.

Research is translating into innovation

While China’s talent base is growing, its research is also translating into patents and products. The report highlights how China now files nearly ten times more AI-related patents than the US. It’s not just publishing papers—it’s protecting ideas and building businesses around them.

Geographically, China’s approach is different too. Its AI research is spread widely, not just clustered in a few cities. In 2024, 156 institutions in China published more than 50 AI papers each. These included universities, companies, hospitals, and research centres located in places like Beijing, Shanghai, Nanjing, and Guangzhou. The US had 37 such institutions, the EU-27 had 54, and the UK had 19.

This nationwide spread shows that China isn’t betting on a few research hubs—it’s building a broad AI infrastructure that reaches across the country. That could make it harder to disrupt or outcompete.

EU lags, UK punches above its weight

Meanwhile, Europe shows signs of falling behind. While EU countries collaborate well internally, they’re less connected to outside regions and struggle to turn research into patents or startups. Notably, despite France’s vocal investment in AI since 2018, no French research institution published more than 50 AI papers in 2024. Even top performers like Université de Toulouse fell just short.

The UK, despite its smaller size, continues to punch above its weight in AI visibility. Its research consistently draws more citations than expected for its volume, showing a high attention-per-output ratio. But it, too, is now leaning heavily on China for collaboration.

China’s companies are gaining ground

In terms of corporate involvement, China is also closing in on the US. While US companies still publish more AI papers overall, Chinese companies are gaining fast. The number of research-active companies in China has nearly caught up to the US, suggesting that China’s private sector is becoming a bigger player in AI R&D.

Dr Hook notes that much of US AI research now happens behind closed doors, in private firms like OpenAI. That makes it harder to track and may distort the picture. Even so, the report’s data shows the US is at risk of losing its narrow lead in research-focused AI startups.

The global shift has already happened

The report calls attention to a broader trend: China is not just a competitor—it’s becoming the preferred global connector in AI research. While Western countries still have strong academic networks and commercial pipelines, China’s scale, growth rate, and independence set it apart.

DeepSeek didn’t appear out of nowhere. It reflects years of investment, education, and infrastructure-building. It also signals what may come next—not just more chatbots, but a wave of AI tools built by a large, skilled workforce, increasingly operating on China’s own terms.

In the next decade, the report suggests, the real advantage may go to the countries that can not only attract talent and fund research, but also build systems that let that work reach society. China appears to be doing all three—and doing it faster than anyone else.

AI Research

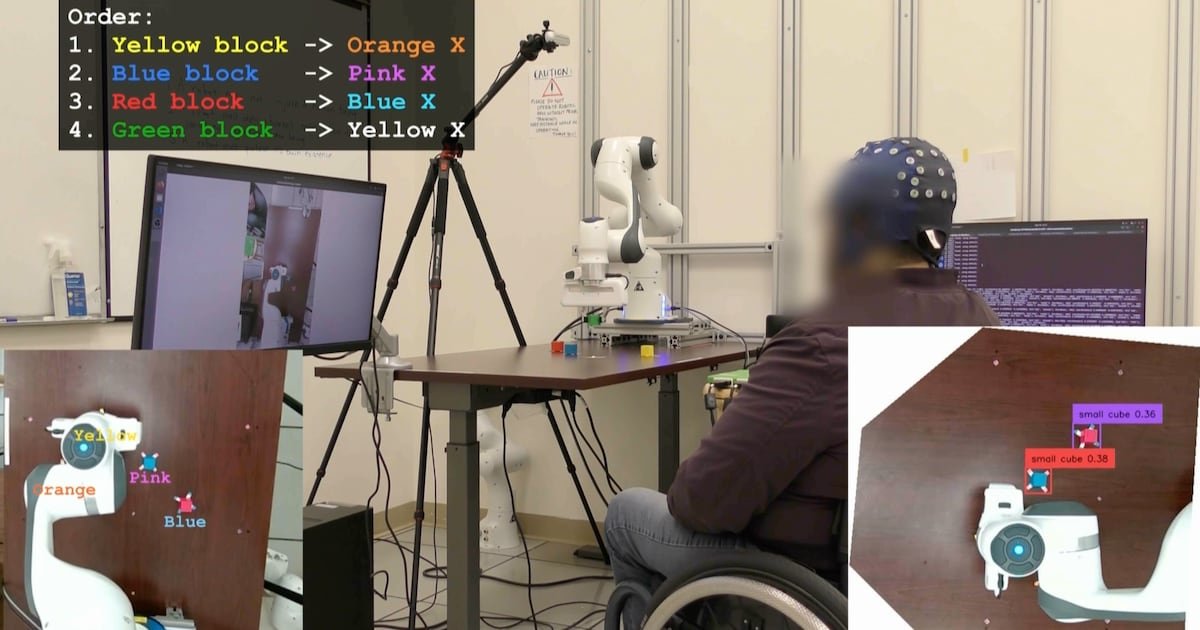

UCLA Researchers Enable Paralyzed Patients to Control Robots with Thoughts Using AI – CHOSUNBIZ – Chosun Biz

AI Research

Hackers exploit hidden prompts in AI images, researchers warn

Cybersecurity firm Trail of Bits has revealed a technique that embeds malicious prompts into images processed by large language models (LLMs). The method exploits how AI platforms compress and downscale images for efficiency. While the original files appear harmless, the resizing process introduces visual artifacts that expose concealed instructions, which the model interprets as legitimate user input.

In tests, the researchers demonstrated that such manipulated images could direct AI systems to perform unauthorized actions. One example showed Google Calendar data being siphoned to an external email address without the user’s knowledge. Platforms affected in the trials included Google’s Gemini CLI, Vertex AI Studio, Google Assistant on Android, and Gemini’s web interface.

Read More: Meta curbs AI flirty chats, self-harm talk with teens

The approach builds on earlier academic work from TU Braunschweig in Germany, which identified image scaling as a potential attack surface in machine learning. Trail of Bits expanded on this research, creating “Anamorpher,” an open-source tool that generates malicious images using interpolation techniques such as nearest neighbor, bilinear, and bicubic resampling.

From the user’s perspective, nothing unusual occurs when such an image is uploaded. Yet behind the scenes, the AI system executes hidden commands alongside normal prompts, raising serious concerns about data security and identity theft. Because multimodal models often integrate with calendars, messaging, and workflow tools, the risks extend into sensitive personal and professional domains.

Also Read: Nvidia CEO Jensen Huang says AI boom far from over

Traditional defenses such as firewalls cannot easily detect this type of manipulation. The researchers recommend a combination of layered security, previewing downscaled images, restricting input dimensions, and requiring explicit confirmation for sensitive operations.

“The strongest defense is to implement secure design patterns and systematic safeguards that limit prompt injection, including multimodal attacks,” the Trail of Bits team concluded.

AI Research

When AI Freezes Over | Psychology Today

A phrase I’ve often clung to regarding artificial intelligence is one that is also cloaked in a bit of techno-mystery. And I bet you’ve heard it as part of the lexicon of technology and imagination: “emergent abilities.” It’s common to hear that large language models (LLMs) have these curious “emergent” behaviors that are often coupled with linguistic partners like scaling and complexity. And yes, I’m guilty too.

In AI research, this phrase first took off after a 2022 paper that described how abilities seem to appear suddenly as models scale and tasks that a small model fails at completely, a larger model suddenly handles with ease. One day a model can’t solve math problems, the next day it can. It’s an irresistible story as machines have their own little Archimedean “eureka!” moments. It’s almost as if “intelligence” has suddenly switched on.

But I’m not buying into the sensation, at least not yet. A newer 2025 study suggests we should be more careful. Instead of magical leaps, what we’re seeing looks a lot more like the physics of phase changes.

Ice, Water, and Math

Think about water. At one temperature it’s liquid, at another it’s ice. The molecules don’t become something new—they’re always two hydrogens and an oxygen—but the way they organize shifts dramatically. At the freezing point, hydrogen bonds “loosely set” into a lattice, driven by those fleeting electrical charges on the hydrogen atoms. The result is ice, the same ingredients reorganized into a solid that’s curiously less dense than liquid water. And, yes, there’s even a touch of magic in the science as ice floats. But that magic melts when you learn about Van der Waals forces.

The same kind of shift shows up in LLMs and is often mislabeled as “emergence.” In small models, the easiest strategy is positional, where computation leans on word order and simple statistical shortcuts. It’s an easy trick that works just enough to reduce error. But scale things up by using more parameters and data, and the system reorganizes. The 2025 study by Cui shows that, at a critical threshold, the model shifts into semantic mode and relies on the geometry of meaning in its high-dimensional vector space. It isn’t magic, it’s optimization. Just as water molecules align into a lattice, the model settles into a more stable solution in its mathematical landscape.

The Mirage of “Emergence”

That 2022 paper called these shifts emergent abilities. And yes, tasks like arithmetic or multi-step reasoning can look as though they “switch on.” But the model hasn’t suddenly “understood” arithmetic. What’s happening is that semantic generalization finally outperforms positional shortcuts once scale crosses a threshold. Yes, it’s a mouthful. But happening here is the computational process that is shifting from a simple “word position” in a prompt (like, the cat in the _____) to a complex, hyperdimensional matrix where semantic associations across thousands of dimensions create amazing strength to the computation.

And those sudden jumps? They’re often illusions. On simple pass/fail tests, a model can look stuck at zero until it finally tips over the line and then it seems to leap forward. In reality, it was improving step by step all along. The so-called “light-bulb moment” is really just a quirk of how we measure progress. No emergence, just math.

Why “Emergence” Is So Seductive

Why does the language of “emergence” stick? Because it borrows from biology and philosophy. Life “emerges” from chemistry as consciousness “emerges” from neurons. It makes LLMs sound like they’re undergoing cognitive leaps. Some argue emergence is a hallmark of complex systems, and there’s truth to that. So, to a degree, it does capture the idea of surprising shifts.

But we need to be careful. What’s happening here is still math, not mind. Calling it emergence risks sliding into anthropomorphism, where sudden performance shifts are mistaken for genuine understanding. And it happens all the time.

A Useful Imitation

The 2022 paper gave us the language of “emergence.” The 2025 paper shows that what looks like emergence is really closer to a high-complexity phase change. It’s the same math and the same machinery. At small scales, positional tricks (word sequence) dominate. At large scales, semantic structures (multidimensional linguistic analysis) win out.

No insight, no spark of consciousness. It’s just a system reorganizing under new constraints. And this supports my larger thesis: What we’re witnessing isn’t intelligence at all, but anti-intelligence, a powerful, useful imitation that mimics the surface of cognition without the interior substance that only a human mind offers.

Artificial Intelligence Essential Reads

So the next time you hear about an LLM with “emergent ability,” don’t imagine Archimedes leaping from his bath. Picture water freezing. The same molecules, new structure. The same math, new mode. What looks like insight is just another phase of anti-intelligence that is complex, fascinating, even beautiful in its way, but not to be mistaken for a mind.

-

Business3 days ago

Business3 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences3 months ago

Events & Conferences3 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Mergers & Acquisitions2 months ago

Mergers & Acquisitions2 months agoDonald Trump suggests US government review subsidies to Elon Musk’s companies