AI Research

The West tries to keep up with China in the AI research race

- The US, UK, and EU are still in the AI race, but cracks are showing.

- China leads in output, patents, and talent, while the West leans on global ties.

AI has become a matter of national interest for governments worldwide, with some treating the technology as if it were like energy or defence. While China gains attention for rapid growth in the space, a closer look shows that the West – namely the US, UK, and EU – is still a major player in AI research, even if the balance is starting to shift.

A recent report by research analytics company Digital Science tracks global AI research trends from 2000 to 2024. Based on data from the Dimensions research database, the report compares research volume, collaboration, talent movement, and innovation output in different regions. Figures show that the West remains influential in AI, but also points to areas where it’s starting to lose ground.

The US holds ground but faces pressure

The United States still plays a big role in AI development. It maintains the strongest ecosystem for startups and company-led research. While the country no longer leads in the number of academic publications – that title now belongs to China – it continues to drive commercial applications of AI, especially through firms like OpenAI, Google, and Anthropic.

However, much of that research happens behind closed doors. The report notes a drop in publicly-available AI research from US firms. As companies focus more on private development, the visibility and academic impact of US-based AI research may be harder to track.

On paper, the US remains the second-largest contributor to AI research by volume. It also plays a central role in international collaboration. But even here, things are changing. China has become the US’s top AI research partner, and that relationship has continued despite rising political tension. Meanwhile, the US is more dependent on the partnerships than China, which publishes most of its research without Western involvement.

Patent filings show another area of concern to Western observers. While the US has a strong record of commercialising research, China now files about ten times more AI-related patents. That suggests the US may be publishing quality work but not always turning it into protected innovation.

UK punches above its weight

Despite its smaller size, the UK continues to perform well in AI research impact. It ranks fourth globally in attention-per-output, which measures how often a country’s research is cited relative to how much it publishes: UK research is being noticed, even if it isn’t produced in high volumes.

The UK also has strong AI hubs in London, Cambridge, and Edinburgh, with several institutions consistently publishing high-impact work. But it leans heavily on international partners to maintain that status. China has become its top AI research collaborator – accounting for over 25% of the UK’s collaborative output in 2024, more than the UK shares with the US or EU.

The country’s total number of AI researchers, about 3,000, is modest compared to China or the EU but the UK continues to attract talent and attention. The challenge is keeping the momentum going as funding shifts and global competition increases.

EU shows internal strength, external gaps

The EU-27 collectively produces a large volume of AI research, second only to China. Many of its member countries – Germany, Italy, Spain, and the Netherlands – have active research communities. EU institutions also benefit from regional programmes that support collaboration inside individual countries’ borders. That internal cooperation is a strong point.

Where the EU falls short is in visibility and conversion. Its research doesn’t attract as much global attention, and fewer projects result in patents or market-ready solutions. Only a small portion of its research output is internationally cited at high levels. The report suggests that while EU countries are producing a lot of work, they may struggle to get it noticed or applied.

External collaboration is another weak spot. Compared to the US and UK, the EU collaborates with fewer countries outside the region. This limits exposure and reduces the chance for shared development with global leaders in AI.

The EU is also fragmentated. While there is internal cooperation, there’s less clarity on a unified AI strategy in the bloc. That leaves some research institutions without the scale or coordination needed to compete with larger players.

Talent is moving, and the West is losing

One of the more striking data points in the report involves international moves by researchers. In the past, Western countries were top destinations for AI talent but that pattern is changing.

China now has a net gain in the number of AI researchers from the US, UK, and EU. In other words, more researchers are moving to China than leaving it. Meanwhile, the West is still drawing in international talent, but it’s losing some of its own experts in return.

The US remains the largest source and destination for AI researchers globally, but its net balance has shrunk. The UK and EU are also sending more talent abroad than they’re getting back. The raises questions about how long the West can maintain its research base without stronger local investment and retention.

Institutions in the West still matter

While the report focuses on national output, it also highlights standout institutions. The US is home to several high-output AI institutions, including top universities and research labs. The UK has 19 institutions that each published over 50 AI papers in 2024. In the EU, that number stands at 54. For comparison, China has 156 such institutions.

The disparity shows that while quality exists in the West, it’s less evenly spread. China’s broader base gives it more resilience and reach. The US and EU have high-performing research centres, but they tend to be more concentrated.

Collaboration patterns are shifting

International collaboration has long been a strength of Western research. The report confirms that trend but adds an important caveat: while the West is collaborating more, it’s also growing more reliant on China.

In 2024, China was the top AI collaborator for the US, UK, and EU. But China does not rely on those same countries to the same extent. Just 4% of China’s AI research included collaborators from the West. That number was 25% for the UK and about 10-12% for the US and EU.

The imbalance suggests that China has positioned itself as a connector – but one that can also work alone. The West, in contrast, depends more on external ties to maintain research output and influence.

Looking ahead

The West is still in the AI race – but it may need to rethink its strategy. While the US, UK, and EU have strong research histories, high-impact institutions, and skilled talent, those advantages are being tested.

As China expands its AI base, builds long-term talent, and dominates in patents, Western nations may need to focus less on volume and more on outcomes. That includes better research-to-product pipelines, more inclusive collaboration networks, and policies that keep talent from drifting elsewhere.

The gap isn’t yet unbridgeable – but the numbers show it’s widening.

AI Research

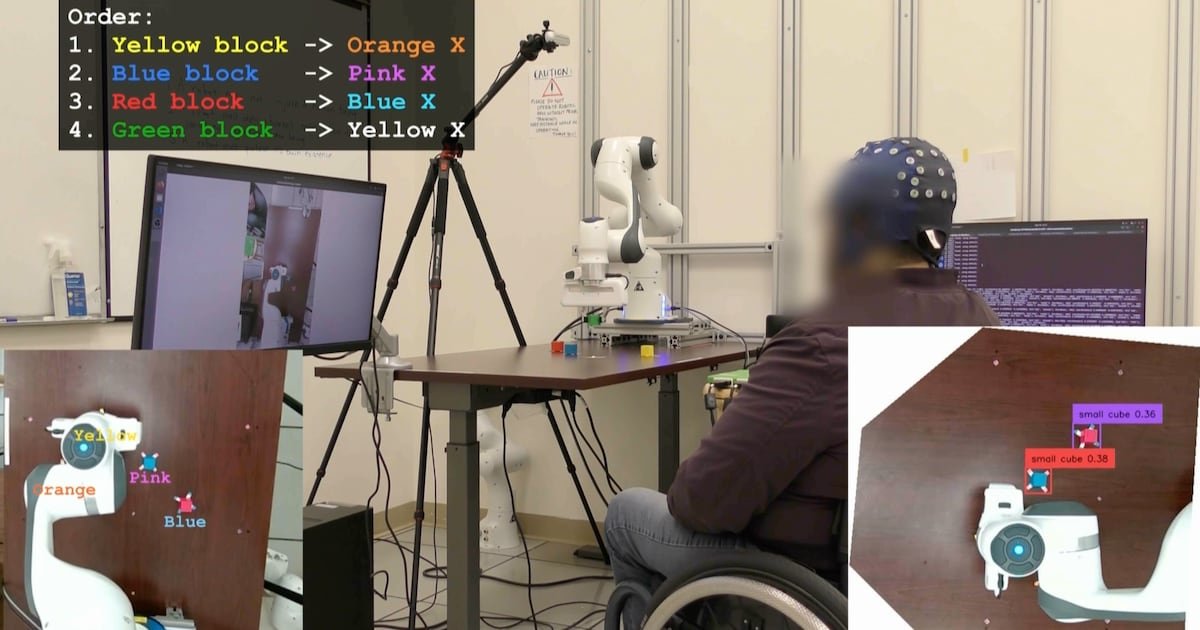

UCLA Researchers Enable Paralyzed Patients to Control Robots with Thoughts Using AI – CHOSUNBIZ – Chosun Biz

AI Research

Hackers exploit hidden prompts in AI images, researchers warn

Cybersecurity firm Trail of Bits has revealed a technique that embeds malicious prompts into images processed by large language models (LLMs). The method exploits how AI platforms compress and downscale images for efficiency. While the original files appear harmless, the resizing process introduces visual artifacts that expose concealed instructions, which the model interprets as legitimate user input.

In tests, the researchers demonstrated that such manipulated images could direct AI systems to perform unauthorized actions. One example showed Google Calendar data being siphoned to an external email address without the user’s knowledge. Platforms affected in the trials included Google’s Gemini CLI, Vertex AI Studio, Google Assistant on Android, and Gemini’s web interface.

Read More: Meta curbs AI flirty chats, self-harm talk with teens

The approach builds on earlier academic work from TU Braunschweig in Germany, which identified image scaling as a potential attack surface in machine learning. Trail of Bits expanded on this research, creating “Anamorpher,” an open-source tool that generates malicious images using interpolation techniques such as nearest neighbor, bilinear, and bicubic resampling.

From the user’s perspective, nothing unusual occurs when such an image is uploaded. Yet behind the scenes, the AI system executes hidden commands alongside normal prompts, raising serious concerns about data security and identity theft. Because multimodal models often integrate with calendars, messaging, and workflow tools, the risks extend into sensitive personal and professional domains.

Also Read: Nvidia CEO Jensen Huang says AI boom far from over

Traditional defenses such as firewalls cannot easily detect this type of manipulation. The researchers recommend a combination of layered security, previewing downscaled images, restricting input dimensions, and requiring explicit confirmation for sensitive operations.

“The strongest defense is to implement secure design patterns and systematic safeguards that limit prompt injection, including multimodal attacks,” the Trail of Bits team concluded.

AI Research

When AI Freezes Over | Psychology Today

A phrase I’ve often clung to regarding artificial intelligence is one that is also cloaked in a bit of techno-mystery. And I bet you’ve heard it as part of the lexicon of technology and imagination: “emergent abilities.” It’s common to hear that large language models (LLMs) have these curious “emergent” behaviors that are often coupled with linguistic partners like scaling and complexity. And yes, I’m guilty too.

In AI research, this phrase first took off after a 2022 paper that described how abilities seem to appear suddenly as models scale and tasks that a small model fails at completely, a larger model suddenly handles with ease. One day a model can’t solve math problems, the next day it can. It’s an irresistible story as machines have their own little Archimedean “eureka!” moments. It’s almost as if “intelligence” has suddenly switched on.

But I’m not buying into the sensation, at least not yet. A newer 2025 study suggests we should be more careful. Instead of magical leaps, what we’re seeing looks a lot more like the physics of phase changes.

Ice, Water, and Math

Think about water. At one temperature it’s liquid, at another it’s ice. The molecules don’t become something new—they’re always two hydrogens and an oxygen—but the way they organize shifts dramatically. At the freezing point, hydrogen bonds “loosely set” into a lattice, driven by those fleeting electrical charges on the hydrogen atoms. The result is ice, the same ingredients reorganized into a solid that’s curiously less dense than liquid water. And, yes, there’s even a touch of magic in the science as ice floats. But that magic melts when you learn about Van der Waals forces.

The same kind of shift shows up in LLMs and is often mislabeled as “emergence.” In small models, the easiest strategy is positional, where computation leans on word order and simple statistical shortcuts. It’s an easy trick that works just enough to reduce error. But scale things up by using more parameters and data, and the system reorganizes. The 2025 study by Cui shows that, at a critical threshold, the model shifts into semantic mode and relies on the geometry of meaning in its high-dimensional vector space. It isn’t magic, it’s optimization. Just as water molecules align into a lattice, the model settles into a more stable solution in its mathematical landscape.

The Mirage of “Emergence”

That 2022 paper called these shifts emergent abilities. And yes, tasks like arithmetic or multi-step reasoning can look as though they “switch on.” But the model hasn’t suddenly “understood” arithmetic. What’s happening is that semantic generalization finally outperforms positional shortcuts once scale crosses a threshold. Yes, it’s a mouthful. But happening here is the computational process that is shifting from a simple “word position” in a prompt (like, the cat in the _____) to a complex, hyperdimensional matrix where semantic associations across thousands of dimensions create amazing strength to the computation.

And those sudden jumps? They’re often illusions. On simple pass/fail tests, a model can look stuck at zero until it finally tips over the line and then it seems to leap forward. In reality, it was improving step by step all along. The so-called “light-bulb moment” is really just a quirk of how we measure progress. No emergence, just math.

Why “Emergence” Is So Seductive

Why does the language of “emergence” stick? Because it borrows from biology and philosophy. Life “emerges” from chemistry as consciousness “emerges” from neurons. It makes LLMs sound like they’re undergoing cognitive leaps. Some argue emergence is a hallmark of complex systems, and there’s truth to that. So, to a degree, it does capture the idea of surprising shifts.

But we need to be careful. What’s happening here is still math, not mind. Calling it emergence risks sliding into anthropomorphism, where sudden performance shifts are mistaken for genuine understanding. And it happens all the time.

A Useful Imitation

The 2022 paper gave us the language of “emergence.” The 2025 paper shows that what looks like emergence is really closer to a high-complexity phase change. It’s the same math and the same machinery. At small scales, positional tricks (word sequence) dominate. At large scales, semantic structures (multidimensional linguistic analysis) win out.

No insight, no spark of consciousness. It’s just a system reorganizing under new constraints. And this supports my larger thesis: What we’re witnessing isn’t intelligence at all, but anti-intelligence, a powerful, useful imitation that mimics the surface of cognition without the interior substance that only a human mind offers.

Artificial Intelligence Essential Reads

So the next time you hear about an LLM with “emergent ability,” don’t imagine Archimedes leaping from his bath. Picture water freezing. The same molecules, new structure. The same math, new mode. What looks like insight is just another phase of anti-intelligence that is complex, fascinating, even beautiful in its way, but not to be mistaken for a mind.

-

Business3 days ago

Business3 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences3 months ago

Events & Conferences3 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Mergers & Acquisitions2 months ago

Mergers & Acquisitions2 months agoDonald Trump suggests US government review subsidies to Elon Musk’s companies