CNN

—

The chief executive of the world’s leading chipmaker warned that while artificial intelligence will significantly boost workplace productivity, it could lead to job loss if industries lack innovation.

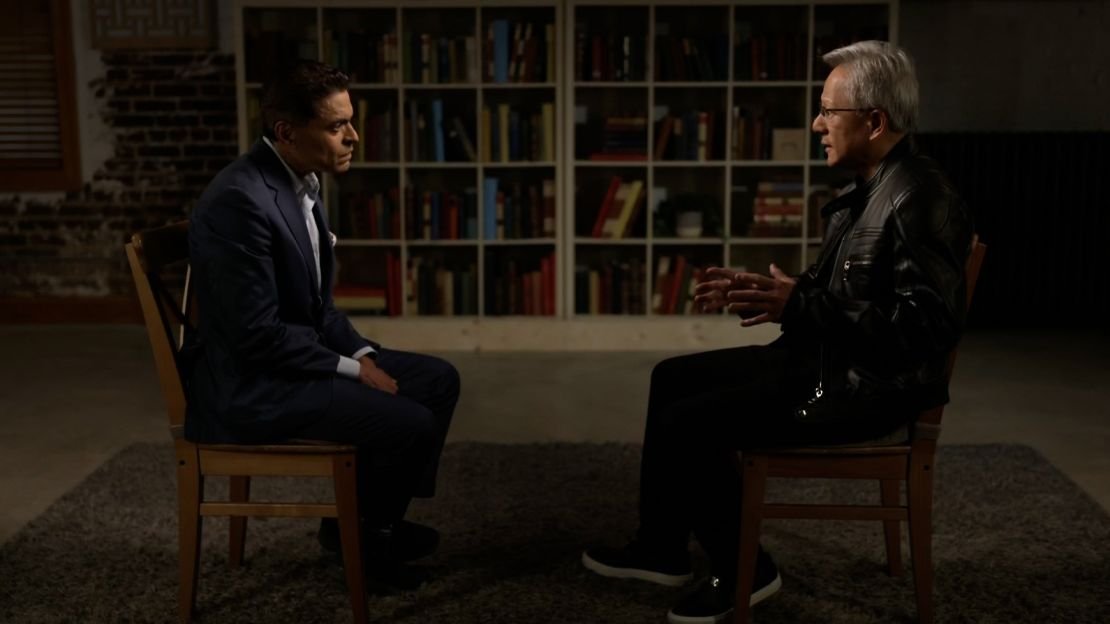

“If the world runs out of ideas, then productivity gains translates to job loss,” said Nvidia CEO Jensen Huang in an interview with CNN’s Fareed Zakaria when asked about comments made by fellow tech leader Dario Amodei, who suggested AI will cause mass employment disruptions.

Amodei, the head of Anthropic, warned last month that the technology could cause a dramatic spike in unemployment in the very near future. He told Axios that AI could eliminate half of entry-level, white-collar jobs and spike unemployment to as much as 20% in the next five years.

Huang believes that as long as companies come up with fresh ideas, there’s room for productivity and employment to thrive. But without new ambitions, “productivity drives down,” he said, potentially resulting in fewer jobs.

“The fundamental thing is this, do we have more ideas left in society? And if we do, if we’re more productive, we’ll be able to grow,” he said.

The increase in AI investments, which fueled a massive technology boom in recent years, has raised concerns about whether the technology will threaten jobs in the future. Roughly 41% of chief executives have said AI will reduce the number of workers at thousands of companies over the next five years, according to a 2024 survey from staffing firm Adecco Group. A survey released in January from the World Economic Forum showed 41% of employers plan to downsize their workforce by 2030 because of AI automation.

“Everybody’s jobs will be affected. Some jobs will be lost. Many jobs will be created and what I hope is that the productivity gains that we see in all the industries will lift society,” Huang said.

Nvidia, which briefly reached $4 trillion in market value, is among the companies leading the AI revolution. The Santa Clara, California-based chipmaker’s technology has been used to power data centers that companies like Microsoft, Amazon and Google use to operate their AI models and cloud services.

Huang defended the development of AI, saying that “over the course of the last 300 years, 100 years, 60 years, even in the era of computers,” both employment and productivity increased. He added that technological advancements can facilitate the realization of “an abundance of ideas” and “ways that we could build a better future.”

Artificial intelligence is also likely to change the way work is done. More than half of large US firms said they plan to automate tasks previously done by employees, such as paying suppliers or doing invoices, according to a 2024 survey by Duke University and the Federal Reserve Banks of Atlanta and Richmond.

Huang said that even his job has changed as a result of the AI revolution, “but I’m still doing my job.”

Some companies also use AI tools, like ChatGPT and chatbots, for creative tasks including drafting job posts, press releases and building marketing campaigns.

“AI is the greatest technology equalizer we’ve ever seen,” said Huang. “It lifts the people who don’t understand technology.”

Fareed Zakaria’s interview with Nvidia CEO Jensen Huang can be seen on “Fareed Zakaria GPS” on Sunday 10 a.m. ET/PT.