AI Research

Simulation-based pipeline tailors training data for dexterous robots | MIT News

When ChatGPT or Gemini give what seems to be an expert response to your burning questions, you may not realize how much information it relies on to give that reply. Like other popular generative artificial intelligence (AI) models, these chatbots rely on backbone systems called foundation models that train on billions, or even trillions, of data points.

In a similar vein, engineers are hoping to build foundation models that train a range of robots on new skills like picking up, moving, and putting down objects in places like homes and factories. The problem is that it’s difficult to collect and transfer instructional data across robotic systems. You could teach your system by teleoperating the hardware step-by-step using technology like virtual reality (VR), but that can be time-consuming. Training on videos from the internet is less instructive, since the clips don’t provide a step-by-step, specialized task walk-through for particular robots.

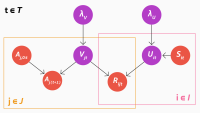

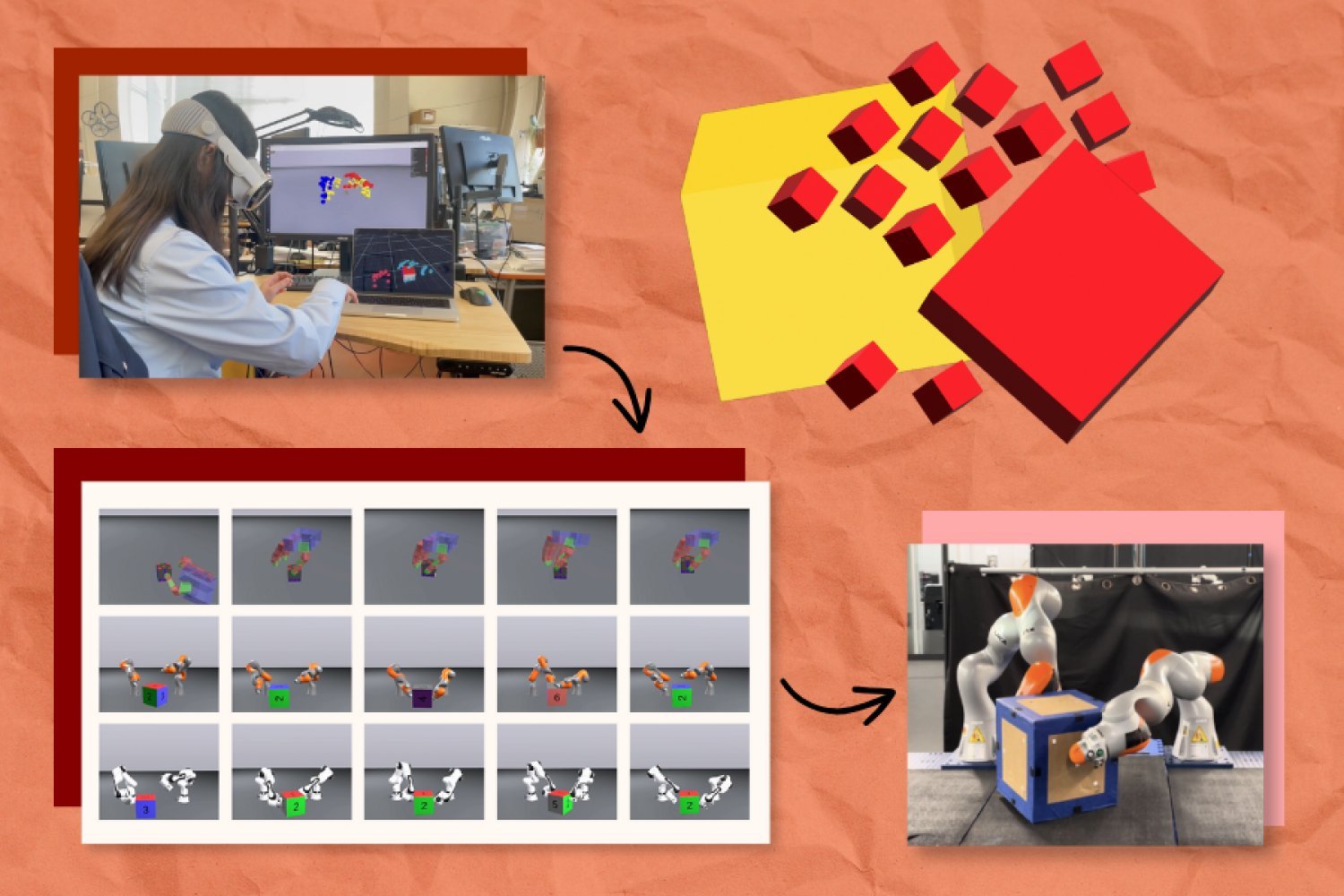

A simulation-driven approach called “PhysicsGen” from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and the Robotics and AI Institute customizes robot training data to help robots find the most efficient movements for a task. The system can multiply a few dozen VR demonstrations into nearly 3,000 simulations per machine. These high-quality instructions are then mapped to the precise configurations of mechanical companions like robotic arms and hands.

PhysicsGen creates data that generalize to specific robots and condition via a three-step process. First, a VR headset tracks how humans manipulate objects like blocks using their hands. These interactions are mapped in a 3D physics simulator at the same time, visualizing the key points of our hands as small spheres that mirror our gestures. For example, if you flipped a toy over, you’d see 3D shapes representing different parts of your hands rotating a virtual version of that object.

The pipeline then remaps these points to a 3D model of the setup of a specific machine (like a robotic arm), moving them to the precise “joints” where a system twists and turns. Finally, PhysicsGen uses trajectory optimization — essentially simulating the most efficient motions to complete a task — so the robot knows the best ways to do things like repositioning a box.

Each simulation is a detailed training data point that walks a robot through potential ways to handle objects. When implemented into a policy (or the action plan that the robot follows), the machine has a variety of ways to approach a task, and can try out different motions if one doesn’t work.

“We’re creating robot-specific data without needing humans to re-record specialized demonstrations for each machine,” says Lujie Yang, an MIT PhD student in electrical engineering and computer science and CSAIL affiliate who is the lead author of a new paper introducing the project. “We’re scaling up the data in an autonomous and efficient way, making task instructions useful to a wider range of machines.”

Generating so many instructional trajectories for robots could eventually help engineers build a massive dataset to guide machines like robotic arms and dexterous hands. For example, the pipeline might help two robotic arms collaborate on picking up warehouse items and placing them in the right boxes for deliveries. The system may also guide two robots to work together in a household on tasks like putting away cups.

PhysicsGen’s potential also extends to converting data designed for older robots or different environments into useful instructions for new machines. “Despite being collected for a specific type of robot, we can revive these prior datasets to make them more generally useful,” adds Yang.

Addition by multiplication

PhysicsGen turned just 24 human demonstrations into thousands of simulated ones, helping both digital and real-world robots reorient objects.

Yang and her colleagues first tested their pipeline in a virtual experiment where a floating robotic hand needed to rotate a block into a target position. The digital robot executed the task at a rate of 81 percent accuracy by training on PhysicGen’s massive dataset, a 60 percent improvement from a baseline that only learned from human demonstrations.

The researchers also found that PhysicsGen could improve how virtual robotic arms collaborate to manipulate objects. Their system created extra training data that helped two pairs of robots successfully accomplish tasks as much as 30 percent more often than a purely human-taught baseline.

In an experiment with a pair of real-world robotic arms, the researchers observed similar improvements as the machines teamed up to flip a large box into its designated position. When the robots deviated from the intended trajectory or mishandled the object, they were able to recover mid-task by referencing alternative trajectories from their library of instructional data.

Senior author Russ Tedrake, who is the Toyota Professor of Electrical Engineering and Computer Science, Aeronautics and Astronautics, and Mechanical Engineering at MIT, adds that this imitation-guided data generation technique combines the strengths of human demonstration with the power of robot motion planning algorithms.

“Even a single demonstration from a human can make the motion planning problem much easier,” says Tedrake, who is also a senior vice president of large behavior models at the Toyota Research Institute and CSAIL principal investigator. “In the future, perhaps the foundation models will be able to provide this information, and this type of data generation technique will provide a type of post-training recipe for that model.”

The future of PhysicsGen

Soon, PhysicsGen may be extended to a new frontier: diversifying the tasks a machine can execute.

“We’d like to use PhysicsGen to teach a robot to pour water when it’s only been trained to put away dishes, for example,” says Yang. “Our pipeline doesn’t just generate dynamically feasible motions for familiar tasks; it also has the potential of creating a diverse library of physical interactions that we believe can serve as building blocks for accomplishing entirely new tasks a human hasn’t demonstrated.”

Creating lots of widely applicable training data may eventually help build a foundation model for robots, though MIT researchers caution that this is a somewhat distant goal. The CSAIL-led team is investigating how PhysicsGen can harness vast, unstructured resources — like internet videos — as seeds for simulation. The goal: transform everyday visual content into rich, robot-ready data that could teach machines to perform tasks no one explicitly showed them.

Yang and her colleagues also aim to make PhysicsGen even more useful for robots with diverse shapes and configurations in the future. To make that happen, they plan to leverage datasets with demonstrations of real robots, capturing how robotic joints move instead of human ones.

The researchers also plan to incorporate reinforcement learning, where an AI system learns by trial and error, to make PhysicsGen expand its dataset beyond human-provided examples. They may augment their pipeline with advanced perception techniques to help a robot perceive and interpret their environment visually, allowing the machine to analyze and adapt to the complexities of the physical world.

For now, PhysicsGen shows how AI can help us teach different robots to manipulate objects within the same category, particularly rigid ones. The pipeline may soon help robots find the best ways to handle soft items (like fruits) and deformable ones (like clay), but those interactions aren’t easy to simulate yet.

Yang and Tedrake wrote the paper with two CSAIL colleagues: co-lead author and MIT PhD student Hyung Ju “Terry” Suh SM ’22 and MIT PhD student Bernhard Paus Græsdal. Robotics and AI Institute researchers Tong Zhao ’22, MEng ’23, Tarik Kelestemur, Jiuguang Wang, and Tao Pang PhD ’23 are also authors. Their work was supported by the Robotics and AI Institute and Amazon.

The researchers recently presented their work at the Robotics: Science and Systems conference.

AI Research

School Cheating: Research Shows AI Has Not Increased Its Scale

Changes in Learning: Cheating and Artificial Intelligence

When reading the news, one gets the impression that all students use artificial intelligence to cheat in their studies. Headlines in newspapers such as The Wall Street Journal or the New York Times often mention ‘cheating’ and ‘AI’. Many stories, similar to a publication in New York Magazine, describe students who openly testify about using generative AI to complete assignments.

With the rise of such headlines, it seems that education is under threat: traditional exams, readings, and essays are filled with cheating through AI. In the worst cases, students use tools like ChatGPT to write complete works.

This seems frustrating, but such a thought is only part of the story.

Cheating has always existed. As an educational researcher studying cheating with AI, I can assert that preliminary data indicate that AI has changed the methods of cheating, but not its volumes.

Our early data suggest that AI has changed the method, but not necessarily the scale of cheating that was already taking place.

This does not mean that cheating using AI is not a serious problem. Important questions are raised: Will cheating increase in the future due to AI? Is the use of AI in education cheating? How should parents and schools respond to prepare children for a life that is significantly different from our experience?

The Pervasiveness of Cheating

Cheating has existed for a very long Time — probably since the creation of educational institutions. In the 1990s and 2000s, Don McCabe, a business school professor at Rutgers University, recorded high levels of cheating among students. One of his studies showed that up to 96% of business students admitted to engaging in ‘cheating behavior’.

McCabe used anonymous surveys where students had to indicate how often they engaged in cheating. This allowed for high cheating rates, which varied from 61.3% to 82.7% before the pandemic.

Cheating in the AI Era

Has cheating using AI increased? Analyzing data from over 1900 students from three schools before and after the introduction of ChatGPT, we found no significant changes in cheating behavior. In particular, 11% of students used AI to write their papers.

Our diligent work showed that AI is becoming a popular tool for cheating, but many questions remain to be explored. For example, in 2024 and 2025, we studied the behavior of another 28000-39000 students, where 15% admitted to using AI to create their work.

Challenges of Using AI

Students are accustomed to using AI but understand that there are boundaries between acceptable and unacceptable use. Reports indicate that many use AI to avoid doing homework or to gain ideas for creative work.

Students feel that their teachers use AI, and many consider it unfair when they are punished for using AI in education.

What Will AI Use Mean for Schools?

The modern education system was not designed with generative AI in mind. Traditionally, educational tasks are seen as the result of intensive work, but now this work is increasingly blurred.

It is important to understand what the main reasons for cheating are, how it relates to stress, time management, and the curriculum. Protecting students from cheating is important, but ways of teaching and the use of AI in classrooms also need to be rethought.

Four Future Questions

AI has not caused cheating in educational institutions but has only opened new possibilities. Here are questions worth considering:

- Why do students resort to cheating? The stress of studying may lead them to seek easier solutions.

- Do teachers adhere to their rules? Hypocrisy in demands on students can shape false perceptions of AI use in education.

- Are the rules concerning AI clearly stated? Determining the acceptability of AI use in education may be vague.

- What is important for students to know in a future rich in AI? Educational methods must be timely adapted to the new reality.

The future of education in the age of AI requires an open dialogue between teachers and students. This will allow for the development of new skills and knowledge necessary for successful learning.

AI Research

Artificial intelligence helps break barriers for Hispanic homeownership | National News

We recognize you are attempting to access this website from a country belonging to the European Economic Area (EEA) including the EU which

enforces the General Data Protection Regulation (GDPR) and therefore access cannot be granted at this time.

For any issues, call (641) 684-4611.

AI Research

Artificial intelligence helps break barriers for Hispanic homeownership – Temple Daily Telegram

-

Business6 days ago

Business6 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics