Ethics & Policy

Environmental Factor – April 2025: Synthetic data created by generative AI poses ethical challenges

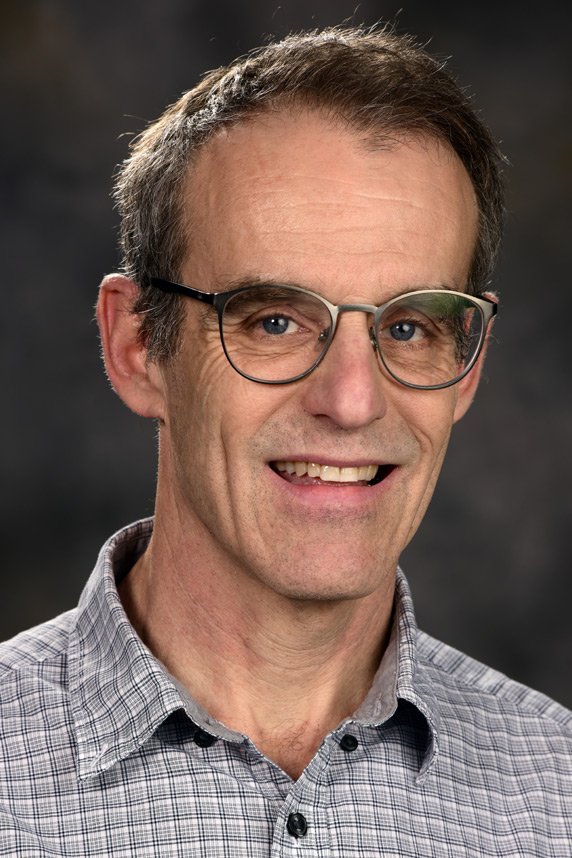

Scientists have been generating and using synthetic data for more than 60 years, according to David Resnik, J.D., Ph.D., a bioethicist at NIEHS. This mock data mimics real-world data but does not come from actual measurements or observations. With rapid advances in machine learning and generative artificial intelligence (GenAI) systems, such as ChatGPT, the use of synthetic data in research has grown.

Exploring the ethical implications tied to the rise in GenAI synthetic data is the focus of an opinion piece Resnik and co-authors published in the Proceedings of the National Academy of Sciences, March 4. Environmental Factor recently spoke with Resnik about what makes synthetic data generated by AI different, challenges scientists now face, and steps the scientific community can take to safeguard research data.

Environmental Factor (EF): How can synthetic data benefit environmental health research?

David Resnik: One valuable use of synthetic data — whether produced by GenAI or another process — is for modeling phenomena. Let’s say you have a hypothesis or theory in environmental science — you can generate some synthetic data to test it before conducting a real field study. This model can tell you things about the testing process and determine whether it’s worth pursuing.

Another potential use, though not common at NIEHS, is creating a digital twin of a person. This is a model that mimics a person’s data — like their height, weight, and other characteristics — but doesn’t include enough details to identify them. This allows for sharing data without privacy concerns. Digital twins can be useful for modeling, hypothesis testing, and developing theories.

EF: What made you want to write this opinion piece now?

Resnik: The potential for research misconduct, combined with the ability of generative AI [GenAI] to create highly realistic fake data, makes for a ticking time bomb. We could see lots of fake data infiltrating the scientific community, if we’re not proactively trying to stop it.

While research misconduct is rare, with some estimates saying it affects only about 1-2% of research, even that small rate is extremely destructive and can harm the trust people have in the scientific process and the institutions involved.

EF: What concerns you about synthetic data generated by AI?

Resnik: I have two main concerns. The first is accidental misuse, where synthetic GenAI data is mistakenly treated as real data, which could corrupt the research record. One way to deal with that is to watermark synthetic GenAI data so that everyone knows what it is and doesn’t treat it as real data. However, that might not be enough. Although most journals and databases clearly mark retracted papers, scientists often continue to cite them long after they’ve been retracted, either because they don’t notice the retractions or ignore them.

The second concern is the potential for deliberate misuse, where people intentionally fabricate or falsify data, passing it off as real without revealing that it’s synthetic. That’s a much harder problem to deal with. We have tools to detect plagiarism, AI-generated writing, and AI-generated images. But detection is getting harder. There’s essentially a race unfolding between computer scientists developing systems to detect synthetic GenAI data and those developing ways to evade these tools.

EF: How else can scientists address the ethical challenges of synthetic GenAI data?

Resnik: The ultimate thing we have to keep in mind is that no technical solution is ever going to be perfect. I think it would be helpful if journals, funding agencies, or academic institutions developed some guidelines, starting with a basic definition of synthetic data and its acceptable uses. And we could always ask scientists to sign an honor code certifying that all the data they are publishing is real.

But the only thing we really have to fall back on is ethics. We just have to teach people to do the right thing, even when this technology is available to them.

NIEHS has maintained a strong commitment to training people in the responsible conduct of research, which is necessary to protect the integrity of research data today and in the future.

(Marla Broadfoot, Ph.D., is a contract writer for the NIEHS Office of Communications and Public Liaison.)

Ethics & Policy

Vatican Hosts Historic “Grace for the World” Concert and AI Ethics Summit | Ukraine news

Crowds gather in St. Peter’s Square for the concert ‘Grace for the World,’ co-directed by Andrea Bocelli and Pharrell Williams, as part of the World Meeting on Human Fraternity aimed at promoting unity, in the Vatican, September 13, 2025. REUTERS/Ciro De Luca

According to CNN CNN

In the Vatican, a historic concert titled “Grace for the World” took place, which for the first time brought together world pop stars on St. Peter’s Square. The event featured John Legend, Teddy Swims, Karol G, and other stars, and the broadcast was provided by CNN and ABC News. The concert occurred as part of the Third World Meeting on Human Fraternity and was open to everyone.

During the event, performances spanning various genres graced the stage. Among the participants were Thai rapper Bambam from GOT7, Black Eyed Peas frontman Will.i.am, and American singer Pharrell Williams. Between performances, Vatican cardinals addressed the audience with calls to remain humane and to uphold mutual respect among people.

Key Moments of the Event

“to remain humane”

Within the framework of the Third World Meeting on Human Fraternity in the Vatican, the topic of artificial intelligence and ethical regulation of its use was also discussed. The summit participants emphasized the need to establish international norms and governance systems for artificial intelligence to ensure the safety of societies. Leading experts joined the discussion: Geoffrey Hinton, known as the “godfather of artificial intelligence,” Max Tegmark from the Massachusetts Institute of Technology, Khimena Sofia Viveros Alvarez, and Marco Trombetti, founder of Translated. Pope Leo XIV also participated in the discussion and reaffirmed the position of the previous pope regarding the establishment of a single agreement on the use of artificial intelligence.

“to define local and international pathways for developing new forms of social charity and to see the image of God in the poor, refugees, and even adversaries.”

They also discussed the risk of the digital divide between countries with access to AI and those without such access. Participants urged concrete local and international initiatives aimed at developing new forms of social philanthropy and supporting the most vulnerable segments of the population.

Other topics you might like:

Ethics & Policy

Pet Dog Joins Google’s Gemini AI Retro Photo Trend! Internet Can’t Get Enough | Viral Video | Viral

Beautiful retro pictures of people in breathtaking ethics in front of an esthetically pleasing wall under the golden hour is currently what is going on on social media! All in all, a new trend is in the ‘internet town’ and it’s spreading- fast. For those not aware, it’s basically a trend where netizens are using Google’s Gemini AI to create a rather beautiful retro version of themselves. In a nutshell, social media is currently full of such pictures. However, when this PET DOG joined the bandwagon, many instantly declared the furry one the winner- and for obvious reasons. The video showed the trend being used on the pet dog- the result of which was simply heartwarming. The AI generated pictures showed the cute one draped in multiple dupattas, with ears that looked like the perfect hairstyle one can ask for- for their pets. Most netizens loved the video, while some expressed their desire to try the same on their pets. Times Now could not confirm the authenticity of the post. Image Source: Jinnie Bhatt/ Instagram

Ethics & Policy

Morocco Signs Deal to Build National Responsible AI Platform

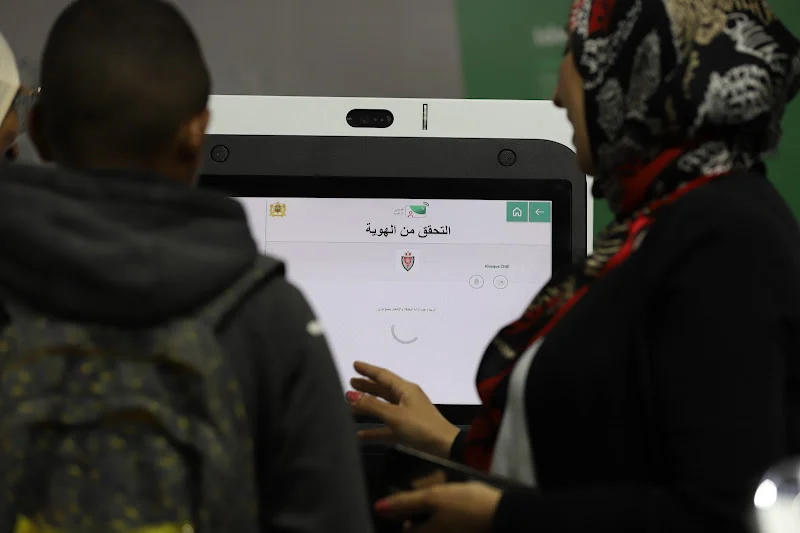

Morocco’s Ministry of Digital Transition and Administrative Reform signed an agreement Thursday with the National Commission for the Control of Personal Data Protection (CNDP) to develop a national platform for responsible artificial intelligence.

The deal, signed in Rabat by Minister Delegate Amale Falah and CNDP President Omar Seghrouchni, will guide the design of large language models tailored to Morocco’s language, culture, legal framework, and digital identity.

Officials said the initiative will provide citizens, businesses, and government agencies with safe generative AI tools that protect fundamental rights. The ministry called the agreement a “strategic step” toward AI sovereignty, ethics, and responsibility, positioning Morocco as a digital leader in Africa and globally.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers3 months ago

Jobs & Careers3 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Funding & Business3 months ago

Funding & Business3 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries