AI Insights

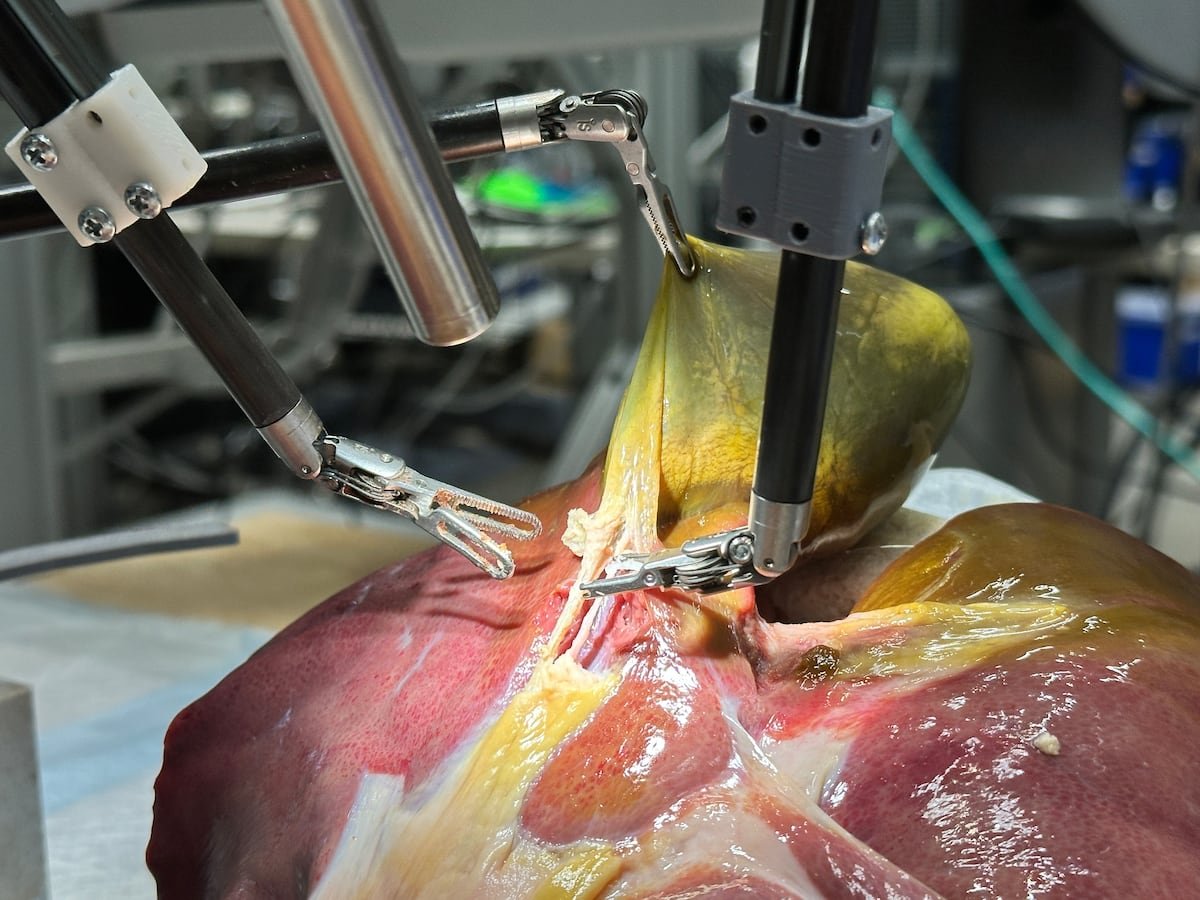

A robot shows that machines may one day replace human surgeons | Science

Nearly four decades ago, the Defense Advanced Research Projects Agency (DARPA) and NASA began promoting projects that would make it possible to perform surgeries remotely — whether on the battlefield or in space. Out of those initial efforts emerged robotic surgical systems like Da Vinci, which function as an extension of the surgeon, allowing them to carry out minimally invasive procedures using remote controls and 3D vision. But this still involves a human using a sophisticated tool. Now, the integration of generative artificial intelligence and machine learning into the control of systems like Da Vinci are bringing the possibility of autonomous surgical robots closer to reality.

This Wednesday, the journal Science Robotics published the results of a study conducted by researchers at Johns Hopkins and Stanford universities, in which they present a system capable of autonomously performing several steps of a surgical procedure, learning from videos of humans operating and receiving commands in natural language — just like a medical resident would.

Like human learning, the team of scientists had been gradually teaching the robot the necessary steps to complete a surgery. Last year, the Johns Hopkins team, led by Axel Krieger, trained the robot to perform three basic surgical tasks: handling a needle, lifting tissue, and suturing. This training was done through imitation and a machine learning system similar to the one used by ChatGPT, but instead of words and text, it uses a robotic language that translates the machine’s movement angles into mathematical data.

In the new experiment, two experienced human surgeons performed demonstrations of gallbladder removal surgeries on pig tissues outside the animal. They used 34 gallbladders to collect 17 hours of data and 16,000 trajectories, which the machine used to learn. Afterward, the robots, without human intervention and with eight gallbladders they hadn’t seen before, were able to perform some of the 17 tasks required to remove the organ with 100% precision — such as identifying certain ducts and arteries, holding them precisely, strategically placing clips, and cutting with scissors. During the experiments, the model was able to correct its own mistakes and adapt to unforeseen situations.

In 2022, this same team performed the first autonomous robotic surgery on a live animal: a laparoscopy on a pig. But that robot needed the tissue to have special markers, was in a controlled environment, and followed a pre-established surgical plan. In a statement from his institution, Krieger said it was like teaching a robot to drive a carefully mapped-out route. The new experiment just presented would be — for the robot — like driving on a road it had never seen before, relying only on a general understanding of how to drive a car.

José Granell, head of the Department of Otolaryngology and Head and Neck Surgery at HLA Moncloa University Hospital and professor at the European University of Madrid, believes that the Johns Hopkins team’s work “is beginning to approach something that resembles real surgery.”

“The problem with robotic surgery on soft tissue is that biology has a lot of intrinsic variability, and even if you know the technique, in the real world there are many possible scenarios,” explains Granell. “Asking a robot to carve a bone is easy, but with soft tissue, everything is more difficult because it moves. You can’t predict how it will react when you push, how much it will move, whether an artery will tear if you pull too hard,” continues the surgeon, adding: “This technology changes the way we train the sequence of gestures that constitute surgery.”

For Krieger, this advancement takes us “from robots that can perform specific surgical tasks to robots that truly understand surgical procedures.” The team leader behind this innovation, made possible by generative AI, argues: “It’s a crucial distinction that brings us significantly closer to clinically viable autonomous surgical systems, capable of navigating the messy and unpredictable reality of real-life patient care.”

Francisco Clascá, professor of Human Anatomy and Embryology at the Autonomous University of Madrid, welcomes the study, but points out that “it’s a very simple surgery” and is performed on organs from “very young animals, which don’t have the level of deterioration and complications of a 60- or 70-year-old person, which is when this type of surgery is typically needed.” Furthermore, the robot is still much slower than a human performing the same tasks.

Mario Fernández, head of the Head and Neck Surgery department at the Gregorio Marañón General University Hospital in Madrid, finds the advance interesting, but believes that replacing human surgeons with machines “is a long way off.” He also cautions against being dazzled by technology without fully understanding its real benefits, and points out that its high cost means it won’t be accessible to everyone.

“I know a hospital in India, for example, where they have a robot and can perform two surgical sessions per month, operating on two patients. A total of 48 per year. For them, robotic surgery may be a way to practice and learn, but it’s not a reality for the patients there,” says Fernández. He believes we should appreciate technological progress, but surgery must be judged by what it actually delivers to patients. As a contrasting example, he points out that “a technique called transoral ultrasound surgery, which was developed in Madrid and is available worldwide, is performed on six patients every day.”

Krieger believes that their proof of concept shows it’s possible to perform complex surgical procedures autonomously, and that their imitation learning system can be applied to more types of surgeries — something they will continue to test with other interventions.

Looking ahead, Granell points out that, beyond overcoming technical challenges, the adoption of surgical robots will be slow because in surgery, “we are very conservative about patient safety.”

He also raises philosophical questions, such as overcoming Isaac Asimov’s First and Second Laws of Robotics: “A robot may not injure a human being or, through inaction, allow a human being to come to harm,” and “A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.” This specialist highlights the apparent contradiction in the fact that human surgeons “do cause harm, but in pursuit of the patient’s benefit; and this is a dilemma that [for a robot] will have to be resolved.”

Sign up for our weekly newsletter to get more English-language news coverage from EL PAÍS USA Edition

AI Insights

Why the Next Winners in the AI Boom May Not Be AI Stocks

As my colleague Kenneth Lamont recently wrote, artificial intelligence is the “defining investment theme of our era.” The most well-known beneficiary is Nvidia NVDA, which became the world’s first $4 trillion company by enabling the technology. Less obvious winners include Vertiv VRT, an industrial business that supplies AI data centers.

But ever since ChatGPT burst onto the scene nearly three years ago, traditional growth stocks have captured most of the market spoils. Morningstar’s broad US growth index has outperformed its value counterpart by a wide margin since late 2022. That’s not to say value hasn’t had moments. During pullbacks in the fourth quarter of 2024 and from February through April 2025, value stocks held up best. When AI enthusiasm resumed, however, growth pulled ahead.

Will the value side of the market ever make a sustained run? Growth stock dominance in the US really goes back more than 10 years—well before AI enthusiasm took hold (internationally, it’s a different story). Periods of value resurgence, like 2016 and 2022, look like aberrations in retrospect. Value investors can be forgiven for capitulating.

It’s worth remembering, though, that change is the only constant in markets. The stocks, sectors, and styles that triumphed in the past are rarely future leaders. Turning points are only obvious in retrospect.

Catalysts for market rotations are also hard to identify in advance. That’s why I was struck by the prediction Vanguard chief economist Joe Davis made during a recent interview on Morningstar’s The Long View podcast. Davis thinks AI is likely to boost economic growth and thinks its stock market impact will be greatest on the value side of the market. “[I]f you’re the most bullish on AI, you actually want to invest outside of the Mag 7 and technology sphere, because it’s going to be that transformational. I’m not picking on those companies at all. I’m talking about the second half of the chessboard.”

I asked him to elaborate.

The Best AI Opportunities Today Aren’t AI Stocks

Lefkovitz: Joe, you made a comment earlier, I wanted to come back to, about value stocks and how they might be a surprise winner from AI. Wondering if you could lay that out a little bit more.

Davis: This was a surprise. I didn’t know this, and it’s not infallible like the motions of the tide, the ocean. If the tides are going out, they’re definitely coming back in. But I think the odds are tilted that way. And what was a surprise to me is that there are stylistically, so very loosely, there’s two phases to a technology cycle. First of all, you have to know that you’re actually in a transformative technology cycle. Like, did I know in 1992 that a personal computer—I know now a personal computer was transformative, but did I really know in 1992? Probably not. Our system, our data-driven framework, gives you a modest sense, but with uncertainty in real time, in 1992, because of the signals it picks up. Today, it says we’re certainly likely to be in this extended technology cycle, which means there’s a general-purpose technology likely to emerge.

Now, in periods when they happen—I wish we had hundreds of those examples. Dan, we just don’t. You have electricity, you have a combustion engine. And people, even economists, debate what a general-purpose technology is. Just because we use something a lot doesn’t mean it lifted everyone and fundamentally changed society. Like the microwave oven, it’s a new technology. It’s not a general-purpose technology. However, we are in that, and our odds are more likely than not that AI is a general-purpose technology. What happens is there’s, what I was surprised to find is that there’s two phases to the technology cycle. The first phase is what I call just the production of the technology is starting to spread. There’s a massive investment in the space. A lot of new businesses are formed trying to produce the technology. It was in the personal computer. It was hardware, software, and the dial-up internet. I’ll use that as an example because it makes it tangible.

And some will say, Oh, there’s a bubble that emerges. I don’t know. I mean, yes, generally enough, I don’t want to make that claim. And that’s really almost immaterial to the second half. What emerges in the second half of the investment cycle is what was surprising to me and gets to your question, Dan. And if this technology is that transformational as we think it is, it starts to benefit companies through higher earnings, to productivity, to new products with that technology as a platform. I’ll give you two examples.

In the personal computer, now I know with the benefit of hindsight, things such as online shopping, companies that sell it all, I don’t know, books and music ended up being 4% of the company—I’m trying not to use company names, but you can think of like the jungle, Amazon AMZN emerged. But that was not technically a technology company by the true letter of the law. It was a consumer staple. With electricity, guess what powered the assembly line? Well, two winners emerged. They were called Ford Motor Company F and General Motors GM. Now, electricity didn’t lead to their profitability, but without those disruptive technologies, I don’t think we’re talking about those companies today. It’s spread to sectors outside of electricity on the one hand and computers on the other. But that’s how technology works. And if it’s not that transformative, then it hasn’t lifted growth; then it’s a dud to begin with.

Why Value Stocks Should be Long-Term Winners in the AI Era

That’s what was surprising to me is that if we play out—and you have to give this five or seven years, and again, the irony is that outside of the tech sector, parts of those investing universes don’t have the multiples that say the Magnificent Seven (Alphabet, Amazon.com, Apple, Meta Platforms, Microsoft, Nvidia, and Tesla) or the technology stocks do have. And I’m not saying they’re not delivering value. I just said that this AI has the likelihood of being as transformative as a personal computer. That’s pretty high praise. But what it says is that if it is truly this transformational, other opportunities emerge, and that’s where it pushes you at the margin, given the multiples outside of value and outside of the United States. It’s not being skeptical of technology. Quite the contrary. It’s actually saying, no, if this thing has legs, then it’s going to spider web into outside of Silicon Valley.

Morningstar, Inc., licenses indexes to financial institutions as the tracking indexes for investable products, such as exchange-traded funds, sponsored by the financial institution. The license fee for such use is paid by the sponsoring financial institution based mainly on the total assets of the investable product. A list of ETFs that track a Morningstar index is available via the Capabilities section at indexes.morningstar.com. A list of other investable products linked to a Morningstar index is available upon request. Morningstar, Inc., does not market, sell, or make any representations regarding the advisability of investing in any investable product that tracks a Morningstar index.

AI Insights

Exclusive: Ex-Google DeepMinders’ algorithm-making AI company gets $5 million in seed funding

Two former Google DeepMind researchers who worked on the company’s Nobel Prize-winning AlphaFold protein structure prediction AI as well as its AlphaEvolve code generation system have launched a new company, with the mission of democratizing access to advanced algorithms.

The company, which is called Hiverge, emerged from stealth today with $5 million in seed funding, led by Flying Fish Ventures with participation from Ahren Innovation Capital and Alpha Intelligence Capital. Legendary coder and Google chief scientist Jeff Dean is also an investor in the startup.

The company has built a platform it calls “Hive” that uses AI to generate and test novel algorithms to run vital business processes—everything from product recommendations to delivery routing— automatically optimizing them. While large companies that can afford to employ their own data science and machine learning teams do sometimes develop bespoke algorithms, this capability has been out of the reach of most medium and small businesses. Smaller firms have often had to rely on off-the-shelf software that comes with pre-built algorithms that may not be ideally suited for that particular business and its data.

The Hive system also promises the potential to discover unusual algorithms that may produce superior results that human data scientists might never be able to develop through intuition or trial-and-error, Alhussein Fawzi, the company’s cofounder and CEO told Fortune. “The idea behind Hiverge is really to empower those companies with the best, best-in-class algorithms,” he said.

“You can apply [the Hive] to machine learning algorithms, and then you can apply it to planning algorithms,” Fawzi explained. “These are the two things that are, in terms of algorithms, quite different, yet it actually improves on both of them.”

At Google DeepMind, Fawzi had led the team that in 2022 developed its AlphaTensor AI, which discovered new ways to do matrix multiplication, a fundamental mathematical process for training and running neural networks and many other computer applications. The following year, Fawzi and the team developed FunSearch, a method that used large language models to generate new coding approaches and then used an automated evaluator to weed out erroneous solutions.

He also worked on the early stages of what became Google DeepMind’s AlphaEvolve system, which uses several LLMs working together as agents to create entire new code bases for solving complex problems. Google has credited AlphaEvolve with finding ways to optimize its LLMs. For instance, it found a way to improve on the way Gemini does matrix multiplication to deliver a 23% speed-up; it also optimized another key step in the way Transformers, the kind of AI architecture on which LLMs are based, work, boosting speeds by 32%.

Cofounding Hiverge with him is his brother Hamza Fawzi, a professor of applied mathematics at the University of Cambridge, who is serving as a technical advisor to the company; and Bernardino Romera-Paredes, who was part of the Google DeepMind team that created AlphaFold and who is now Hiverge’s chief technology officer.

Hiverge has already demonstrated the utility of its Hive system by using it to win the Airbus Beluga Challenge, which calls on contestants to find the most optimal way of loading and storage of aircraft parts that are carried by an Airbus Beluga XL aircraft. The solution developed by Hiverge delivered a 10,000-times speed-up over the existing aircraft-loading algorithm. The company also showed that it could take a machine learning training algorithm that was already optimized and speed it up by another three times. And it has found novel ways to improve computer vision algorithms.

Alhussein Fawzi said that Hiverge, based in Cambridge, England, currently has six employees but that it would use the money raised in its latest funding round to expand its team. “We will also transition from research to building out our product,” he said.

The company plans to make its technology accessible through cloud marketplaces like AWS and Google Cloud, where customers can directly use the system on their own code. The platform analyzes which parts of code represent bottlenecks, generates improved algorithms, and provides recommendations to engineers.

AI Insights

AI in classroom NC | Board of education considers policy for artificial intelligence in Wake County Public School district

CARY, N.C. (WTVD) — The Wake County Board of Education held the first in a series of meetings to discuss the development of the district’s AI policy.

Board members learned about a number of topics related to AI, including how AI is being used now and the potential risks associated with the use of the growing software.

WCPSS Superintendent Dr. Robert Taylor said the district wanted board members to be well-informed now before developing a district-wide policy.

“The one thing I wanted to make sure is that we didn’t create a situation where we restrict something that is going to be a part of society, that our students are going to be responsible for learning that our teachers are going to be responsible for doing so,” he said.

A team from Amazon Web Services, or AWS, gave the board an informational presentation on AI.

District staff say there is no timeline for the adoption of the policy right now.

AI could be a major tool for the district, with the board saying it could help with personalized learning plans.

Still, some board members expressed concerns on how to teach students to use AI responsibly.

“I think the biggest concern that everyone has is academic integrity and honesty, things that can be used with AI to give false narratives, false pictures,” said Dr. Taylor.

Mikaya Thurmond is an AI expert and lecturer. She says the district needs to consider including AI training for teachers and develop rules governing students’ AI usage for their policy framework.

“If anyone believes that students are not using AI to get to conclusions and to turn in homework, at this point, they’re just not being honest about it,” she said.

For starters, she says students should credit AI when used on assignments and show their chat history with AI programs.

“That tells me you’re at least doing the critical thinking part,” said Thurmond. “And then there should be some assignments that no eye is allowed for and some where it is integrated. But I think that there has to be a mixture once educators know how to use it themselves.”

Something the superintendent and Thurmond agree on is parental involvement.

They both say parents should be having conversations now with their children about appropriate conversations to have with AI.

Copyright © 2025 WTVD-TV. All Rights Reserved.

-

Business3 weeks ago

Business3 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers3 months ago

Jobs & Careers3 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education3 months ago

Education3 months agoVEX Robotics launches AI-powered classroom robotics system

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Funding & Business3 months ago

Funding & Business3 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries