Ethics & Policy

An AI Ethics Roadmap Beyond Academic Integrity For Higher Education

CAMBRIDGE, MASSACHUSETTS – JUNE 29: People walk through the gate on Harvard Yard at the Harvard … More

Higher education institutions are rapidly embracing artificial intelligence, but often without a comprehensive strategic framework. According to the 2025 EDUCAUSE AI Landscape Study, 74% of institutions prioritized AI use for academic integrity alongside other core challenges like coursework (65%) and assessment (54%). At the same time, 68% of respondents say students use AI “somewhat more” or “a lot more” than faculty.

These data underscore a potential misalignment: Institutions recognize integrity as a top concern, but students are racing ahead with AI and faculty lack commensurate fluency. As a result, AI ethics debates are unfolding in classrooms with underprepared educators.

The necessity of integrating ethical considerations alongside AI tools in education is paramount. Employers have made it clear that ethical reasoning and responsible technology use are critical skills in today’s workforce. According to the Graduate Management Admission Council’s 2024 Corporate Recruiters Survey, these skills are increasingly vital for graduates, underscoring ethics as a competitive advantage rather than merely a supplemental skill.

Yet, many institutions struggle to clearly define how ethics should intertwine with their AI-enhanced pedagogical practices. Recent discussions with education leaders from Grammarly, SAS, and the University of Delaware offer actionable strategies to ethically and strategically integrate AI into higher education.

Ethical AI At The Core

Grammarly’s commitment to ethical AI was partially inspired by a viral incident: a student using Grammarly’s writing support was incorrectly accused of plagiarism by an AI detector. In response, Grammarly introduced Authorship, a transparency tool that delineates student-created content from AI-generated or refined content. Authorship provides crucial context for student edits, enabling educators to shift from suspicion to meaningful teaching moments.

Similarly, SAS has embedded ethical safeguards into its platform, SAS Viya, featuring built-in bias detection tools and ethically vetted “model cards.” These features help students and faculty bring awareness to and proactively address potential biases in AI models.

SAS supports faculty through comprehensive professional development, including an upcoming AI Foundations credential with a module focused on Responsible Innovation and Trustworthy AI. Grammarly partners directly with institutions like the University of Florida, where Associate Provost Brian Harfe redesigned a general education course to emphasize reflective engagement with AI tools, enhancing student agency and ethical awareness.

Campus Spotlight: University of Delaware

The University of Delaware offers a compelling case study. In the wake of COVID-19, their Academic Technology Services team tapped into 15 years of lecture capture data to build “Study Aid,” a generative AI-powered tool that helps students create flashcards, quizzes, and summaries from course transcripts. Led by instructional designer Erin Ford Sicuranza and developer Jevonia Harris, the initiative exemplifies ethical, inclusive innovation:

- Data Integrity: The system uses time-coded transcripts, ensuring auditability and traceability.

- Human in the Loop: Faculty validate topics before the content is used.

- Knowledge Graph Approach: Instead of retrieval-based AI, the tool builds structured data to map relationships and respect academic complexity.

- Cross-Campus Collaboration: Librarians, engineers, data scientists, and faculty were involved from the start.

- Ethical Guardrails: Student access is gated until full review, and the university retains consent-based control over data.

Though the tool is still in pilot phase, faculty from diverse disciplines—psychology, climate science, marketing—have opted in. With support from AWS and a growing slate of speaking engagements, UD has emerged as a national model. Their “Aim Higher” initiative brought together IT leaders, faculty, and software developers to a conference and hands-on AI Makerspace in June 2025.

As Sicuranza put it: “We didn’t set out to build AI. We used existing tools in a new way—and we did it ethically.”

An Ethical Roadmap For The AI Era

Artificial intelligence is not a neutral force—it reflects the values of its designers and users. As colleges and universities prepare students for AI-rich futures, they must do more than teach tools. They must cultivate responsibility, critical thinking, and the ethical imagination to use AI wisely. Institutions that lead on ethics will shape the future—not just of higher education, but of society itself.

Now is the time to act by building capacity, empowering communities, and leading with purpose.

Ethics & Policy

Meneath: The Hidden Island of Ethics

Meneath: The Hidden Island of Ethics : Release Date, Trailer, Cast & Songs

| Title | Meneath: The Hidden Island of Ethics |

| Release status | Released |

| Release date | Sep 10, 2021 |

| Language | English |

| Genre | Animation |

| Actors | Gail MauriceLake DelisleKent McQuaid |

| Director | Terril Calder |

| Critic Rating | 7.2 |

| Duration | 20 mins |

Meneath: The Hidden Island of Ethics Storyline

Meneath: The Hidden Island of Ethics

Meneath: The Hidden Island of Ethics – Star Cast And Crew

Image Gallery

Ethics & Policy

5 interesting stats to start your week

Third of UK marketers have ‘dramatically’ changed AI approach since AI Act

More than a third (37%) of UK marketers say they have ‘dramatically’ changed their approach to AI, since the introduction of the European Union’s AI Act a year ago, according to research by SAP Emarsys.

Additionally, nearly half (44%) of UK marketers say their approach to AI is more ethical than it was this time last year, while 46% report a better understanding of AI ethics, and 48% claim full compliance with the AI Act, which is designed to ensure safe and transparent AI.

The act sets out a phased approach to regulating the technology, classifying models into risk categories and setting up legal, technological, and governance frameworks which will come into place over the next two years.

However, some marketers are sceptical about the legislation, with 28% raising concerns that the AI Act will lead to the end of innovation in marketing.

Source: SAP Emarsys

Shoppers more likely to trust user reviews than influencers

Nearly two-thirds (65%) of UK consumers say they have made a purchase based on online reviews or comments from fellow shoppers, as opposed to 58% who say they have made a purchase thanks to a social media endorsement.

Nearly two-thirds (65%) of UK consumers say they have made a purchase based on online reviews or comments from fellow shoppers, as opposed to 58% who say they have made a purchase thanks to a social media endorsement.

Sports and leisure equipment (63%), decorative homewares (58%), luxury goods (56%), and cultural events (55%) are identified as product categories where consumers are most likely to find peer-to-peer information valuable.

Accurate product information was found to be a key factor in whether a review was positive or negative. Two-thirds (66%) of UK shoppers say that discrepancies between the product they receive and its description are a key reason for leaving negative reviews, whereas 40% of respondents say they have returned an item in the past year because the product details were inaccurate or misleading.

According to research by Akeeno, purchases driven by influencer activity have also declined since 2023, with 50% reporting having made a purchase based on influencer content in 2025 compared to 54% two years ago.

Source: Akeeno

77% of B2B marketing leaders say buyers still rely on their networks

When vetting what brands to work with, 77% of B2B marketing leaders say potential buyers still look at the company’s wider network as well as its own channels.

When vetting what brands to work with, 77% of B2B marketing leaders say potential buyers still look at the company’s wider network as well as its own channels.

Given the amount of content professionals are faced with, they are more likely to rely on other professionals they already know and trust, according to research from LinkedIn.

More than two-fifths (43%) of B2B marketers globally say their network is still their primary source for advice at work, ahead of family and friends, search engines, and AI tools.

Additionally, younger professionals surveyed say they are still somewhat sceptical of AI, with three-quarters (75%) of 18- to 24-year-olds saying that even as AI becomes more advanced, there’s still no substitute for the intuition and insights they get from trusted colleagues.

Since professionals are more likely to trust content and advice from peers, marketers are now investing more in creators, employees, and subject matter experts to build trust. As a result, 80% of marketers say trusted creators are now essential to earning credibility with younger buyers.

Source: LinkedIn

Business confidence up 11 points but leaders remain concerned about economy

Business leader confidence has increased slightly from last month, having risen from -72 in July to -61 in August.

Business leader confidence has increased slightly from last month, having risen from -72 in July to -61 in August.

The IoD Directors’ Economic Confidence Index, which measures business leader optimism in prospects for the UK economy, is now back to where it was immediately after last year’s Budget.

This improvement comes from several factors, including the rise in investment intentions (up from -27 in July to -8 in August), the rise in headcount expectations from -23 to -4 over the same period, and the increase in revenue expectations from -8 to 12.

Additionally, business leaders’ confidence in their own organisations is also up, standing at 1 in August compared to -9 in July.

Several factors were identified as being of concern for business leaders; these include UK economic conditions at 76%, up from 67% in May, and both employment taxes (remaining at 59%) and business taxes (up to 47%, from 45%) continuing to be of significant concern.

Source: The Institute of Directors

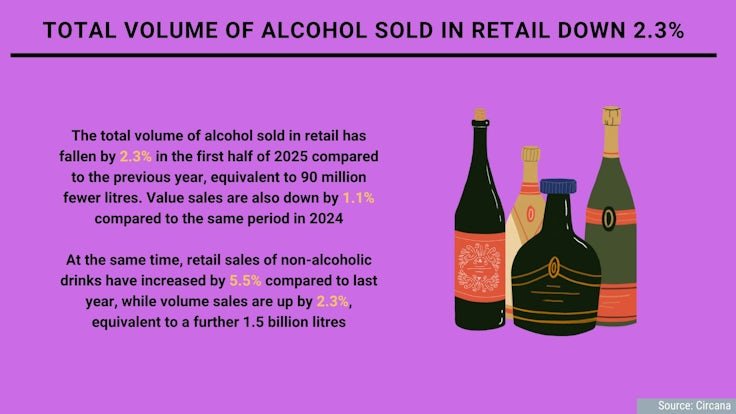

Total volume of alcohol sold in retail down 2.3%

The total volume of alcohol sold in retail has fallen by 2.3% in the first half of 2025 compared to the previous year, equivalent to 90 million fewer litres. Value sales are also down by 1.1% compared to the same period in 2024.

The total volume of alcohol sold in retail has fallen by 2.3% in the first half of 2025 compared to the previous year, equivalent to 90 million fewer litres. Value sales are also down by 1.1% compared to the same period in 2024.

At the same time, retail sales of non-alcoholic drinks have increased by 5.5% compared to last year, while volume sales are up by 2.3%, equivalent to a further 1.5 billion litres.

As the demand for non-alcoholic beverages grows, people increasingly expect these options to be available in their local bars and restaurants, with 55% of Brits and Europeans now expecting bars to always serve non-alcoholic beer.

As well as this, there are shifts happening within the alcoholic beverages category with value sales of no and low-alcohol spirits rising by 16.1%, and sales of ready-to-drink spirits growing by 11.6% compared to last year.

Source: Circana

Ethics & Policy

AI ethics under scrutiny, young people most exposed

New reports into the rise of artificial intelligence (AI) showed incidents linked to ethical breaches have more than doubled in just two years.

At the same time, entry-level job opportunities have been shrinking, partly due to the spread of this automation.

AI is moving from the margins to the mainstream at extraordinary speed and both workplaces and universities are struggling to keep up.

Tools such as ChatGPT, Gemini and Claude are now being used to draft emails, analyse data, write code, mark essays and even decide who gets a job interview.

Alongside this rapid rollout, a March report from McKinsey, one by the OECD in July and an earlier Rand report warned of a sharp increase in ethical controversies — from cheating scandals in exams to biased recruitment systems and cybersecurity threats — leaving regulators and institutions scrambling to respond.

The McKinsey survey said almost eight in 10 organisations now used AI in at least one business function, up from half in 2022.

While adoption promises faster workflows and lower costs, many companies deploy AI without clear policies. Universities face similar struggles, with students increasingly relying on AI for assignments and exams while academic rules remain inconsistent, it said.

The OECD’s AI Incidents and Hazards Monitor reported that ethical and operational issues involving AI have more than doubled since 2022.

Common concerns included accountability — who is responsible when AI errs; transparency — whether users understand AI decisions; and fairness, whether AI discriminates against certain groups.

Many models operated as “black boxes”, producing results without explanation, making errors hard to detect and correct, it said.

In workplaces, AI is used to screen CVs, rank applicants, and monitor performance. Yet studies show AI trained on historical data can replicate biases, unintentionally favouring certain groups.

Rand reported that AI was also used to manipulate information, influence decisions in sensitive sectors, and conduct cyberattacks.

Meanwhile, 41 per cent of professionals report that AI-driven change is harming their mental health, with younger workers feeling most anxious about job security.

LinkedIn data showed that entry-level roles in the US have fallen by more than 35 per cent since 2023, while 63 per cent of executives expected AI to replace tasks currently done by junior staff.

Aneesh Raman, LinkedIn’s chief economic opportunity officer, described this as “a perfect storm” for new graduates: Hiring freezes, economic uncertainty and AI disruption, as the BBC reported August 26.

LinkedIn forecasts that 70 per cent of jobs will look very different by 2030.

Recent Stanford research confirmed that employment among early-career workers in AI-exposed roles has dropped 13 per cent since generative AI became widespread, while more experienced workers or less AI-exposed roles remained stable.

Companies are adjusting through layoffs rather than pay cuts, squeezing younger workers out, it found.

In Belgium, AI ethics and fairness debates have intensified following a scandal in Flanders’ medical entrance exams.

Investigators caught three candidates using ChatGPT during the test.

Separately, 19 students filed appeals, suspecting others may have used AI unfairly after unusually high pass rates: Some 2,608 of 5,544 participants passed but only 1,741 could enter medical school. The success rate jumped to 47 per cent from 18.9 per cent in 2024, raising concerns about fairness and potential AI misuse.

Flemish education minister Zuhal Demir condemned the incidents, saying students who used AI had “cheated themselves, the university and society”.

Exam commission chair Professor Jan Eggermont noted that the higher pass rate might also reflect easier questions, which were deliberately simplified after the previous year’s exam proved excessively difficult, as well as the record number of participants, rather than AI-assisted cheating alone.

French-speaking universities, in the other part of the country, were not concerned by this scandal, as they still conduct medical entrance exams entirely on paper, something Demir said he was considering going back to.

-

Business3 days ago

Business3 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences3 months ago

Events & Conferences3 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Mergers & Acquisitions2 months ago

Mergers & Acquisitions2 months agoDonald Trump suggests US government review subsidies to Elon Musk’s companies