Jobs & Careers

Large Language Models: A Self-Study Roadmap

Large language models are a big step forward in artificial intelligence. They can predict and generate text that sounds like it was written by a human. LLMs learn the rules of language, like grammar and meaning, which allows them to perform many tasks. They can answer questions, summarize long texts, and even create stories. The growing need for automatically generated and organized content is driving the expansion of the large language model market. According to one report, Large Language Model (LLM) Market Size & Forecast:

“The global LLM Market is currently witnessing robust growth, with estimates indicating a substantial increase in market size. Projections suggest a notable expansion in market value, from USD 6.4 billion in 2024 to USD 36.1 billion by 2030, reflecting a substantial CAGR of 33.2% over the forecast period”

This means 2025 might be the best year to start learning LLMs. Learning advanced concepts of LLMs includes a structured, stepwise approach that includes concepts, models, training, and optimization as well as deployment and advanced retrieval methods. This roadmap presents a step-by-step method to gain expertise in LLMs. So, let’s get started.

Step 1: Cover the Fundamentals

You can skip this step if you already know the basics of programming, machine learning, and natural language processing. However, if you are new to these concepts consider learning them from the following resources:

- Programming: You need to learn the basics of programming in Python, the most popular programming language for machine learning. These resources can help you learn Python:

- Machine Learning: After you learn programming, you have to cover the basic concepts of machine learning before moving on with LLMs. The key here is to focus on concepts like supervised vs. unsupervised learning, regression, classification, clustering, and model evaluation. The best course I found to learn the basics of ML is:

- Natural Language Processing: It is very important to learn the fundamental topics of NLP if you want to learn LLMs. Focus on the key concepts: tokenization, word embeddings, attention mechanisms, etc. I have given a few resources that might help you learn NLP:

Step 2: Understand Core Architectures Behind Large Language Models

Large language models rely on various architectures, with transformers being the most prominent foundation. Understanding these different architectural approaches is essential for working effectively with modern LLMs. Here are the key topics and resources to enhance your understanding:

- Understand transformer architecture and emphasize on understanding self-attention, multi-head attention, and positional encoding.

- Start with Attention Is All You Need, then explore different architectural variants: decoder-only models (GPT series), encoder-only models (BERT), and encoder-decoder models (T5, BART).

- Use libraries like Hugging Face’s Transformers to access and implement various model architectures.

- Practice fine-tuning different architectures for specific tasks like classification, generation, and summarization.

Recommended Learning Resources

Step 3: Specializing in Large Language Models

With the basics in place, it’s time to focus specifically on LLMs. These courses are designed to deepen your understanding of their architecture, ethical implications, and real-world applications:

- LLM University – Cohere (Recommended): Offers both a sequential track for newcomers and a non-sequential, application-driven path for seasoned professionals. It provides a structured exploration of both the theoretical and practical aspects of LLMs.

- Stanford CS324: Large Language Models (Recommended): A comprehensive course exploring the theory, ethics, and hands-on practice of LLMs. You will learn how to build and evaluate LLMs.

- Maxime Labonne Guide (Recommended): This guide provides a clear roadmap for two career paths: LLM Scientist and LLM Engineer. The LLM Scientist path is for those who want to build advanced language models using the latest techniques. The LLM Engineer path focuses on creating and deploying applications that use LLMs. It also includes The LLM Engineer’s Handbook, which takes you step by step from designing to launching LLM-based applications.

- Princeton COS597G: Understanding Large Language Models: A graduate-level course that covers models like BERT, GPT, T5, and more. It is Ideal for those aiming to engage in deep technical research, this course explores both the capabilities and limitations of LLMs.

- Fine Tuning LLM Models – Generative AI Course When working with LLMs, you will often need to fine-tune LLMs, so consider learning efficient fine-tuning techniques such as LoRA and QLoRA, as well as model quantization techniques. These approaches can help reduce model size and computational requirements while maintaining performance. This course will teach you fine-tuning using QLoRA and LoRA, as well as Quantization using LLama2, Gradient, and the Google Gemma model.

- Finetune LLMs to teach them ANYTHING with Huggingface and Pytorch | Step-by-step tutorial: It provides a comprehensive guide on fine-tuning LLMs using Hugging Face and PyTorch. It covers the entire process, from data preparation to model training and evaluation, enabling viewers to adapt LLMs for specific tasks or domains.

Step 4: Build, Deploy & Operationalize LLM Applications

Learning a concept theoretically is one thing; applying it practically is another. The former strengthens your understanding of fundamental ideas, while the latter enables you to translate those concepts into real-world solutions. This section focuses on integrating large language models into projects using popular frameworks, APIs, and best practices for deploying and managing LLMs in production and local environments. By mastering these tools, you’ll efficiently build applications, scale deployments, and implement LLMOps strategies for monitoring, optimization, and maintenance.

- Application Development: Learn how to integrate LLMs into user-facing applications or services.

- LangChain: LangChain is the fast and efficient framework for LLM projects. Learn how to build applications using LangChain.

- API Integrations: Explore how to connect various APIs, like OpenAI’s, to add advanced features to your projects.

- Local LLM Deployment: Learn to set up and run LLMs on your local machine.

- LLMOps Practices: Learn the methodologies for deploying, monitoring, and maintaining LLMs in production environments.

Recommended Learning Resources & Projects

Building LLM applications:

Local LLM Deployment:

Deploying & Managing LLM applications In Production Environments:

GitHub Repositories:

- Awesome-LLM: It is a curated collection of papers, frameworks, tools, courses, tutorials, and resources focused on large language models (LLMs), with a special emphasis on ChatGPT.

- Awesome-langchain: This repository is the hub to track initiatives and projects related to LangChain’s ecosystem.

Step 5: RAG & Vector Databases

Retrieval-Augmented Generation (RAG) is a hybrid approach that combines information retrieval with text generation. Instead of relying only on pre-trained knowledge, RAG retrieves relevant documents from external sources before generating responses. This improves accuracy, reduces hallucinations, and makes models more useful for knowledge-intensive tasks.

- Understand RAG & its Architectures: Standard RAG, Hierarchical RAG, Hybrid RAG etc.

- Vector Databases: Understand how to implement vector databases with RAG. Vector databases store and retrieve information based on semantic meaning rather than exact keyword matches. This makes them ideal for RAG-based applications as these allow for fast and efficient retrieval of relevant documents.

- Retrieval Strategies: Implement dense retrieval, sparse retrieval, and hybrid search for better document matching.

- LlamaIndex & LangChain: Learn how these frameworks facilitate RAG.

- Scaling RAG for Enterprise Applications: Understand distributed retrieval, caching, and latency optimizations for handling large-scale document retrieval.

Recommended Learning Resources & Projects

Basic Foundational courses:

Advanced RAG Architectures & Implementations:

Enterprise-Grade RAG & Scaling:

Step 6: Optimize LLM Inference

Optimizing inference is crucial for making LLM-powered applications efficient, cost-effective, and scalable. This step focuses on techniques to reduce latency, improve response times, and minimize computational overhead.

Key Topics

- Model Quantization: Reduce model size and improve speed using techniques like 8-bit and 4-bit quantization (e.g., GPTQ, AWQ).

- Efficient Serving: Deploy models efficiently with frameworks like vLLM, TGI (Text Generation Inference), and DeepSpeed.

- LoRA & QLoRA: Use parameter-efficient fine-tuning methods to enhance model performance without high resource costs.

- Batching & Caching: Optimize API calls and memory usage with batch processing and caching strategies.

- On-Device Inference: Run LLMs on edge devices using tools like GGUF (for llama.cpp) and optimized runtimes like ONNX and TensorRT.

Recommended Learning Resources

- Efficiently Serving LLMs – Coursera – A guided project on optimizing and deploying large language models efficiently for real-world applications.

- Mastering LLM Inference Optimization: From Theory to Cost-Effective Deployment – YouTube – A tutorial discussing the challenges and solutions in LLM inference. It focuses on scalability, performance, and cost management. (Recommended)

- MIT 6.5940 Fall 2024 TinyML and Efficient Deep Learning Computing – It covers model compression, quantization, and optimization techniques to deploy deep learning models efficiently on resource-constrained devices. (Recommended)

- Inference Optimization Tutorial (KDD) – Making Models Run Faster – YouTube – A tutorial from the Amazon AWS team on methods to accelerate LLM runtime performance.

- Large Language Model inference with ONNX Runtime (Kunal Vaishnavi) – A guide on optimizing LLM inference using ONNX Runtime for faster and more efficient execution.

- Run Llama 2 Locally On CPU without GPU GGUF Quantized Models Colab Notebook Demo – A step-by-step tutorial on running LLaMA 2 models locally on a CPU using GGUF quantization.

- Tutorial on LLM Quantization w/ QLoRA, GPTQ and Llamacpp, LLama 2 – Covers various quantization techniques like QLoRA and GPTQ.

- Inference, Serving, PagedAtttention and vLLM – Explains inference optimization techniques, including PagedAttention and vLLM, to speed up LLM serving.

Wrapping Up

This guide covers a comprehensive roadmap to learning and mastering LLMs in 2025. I know it might seem overwhelming at first, but trust me — if you follow this step-by-step approach, you’ll cover everything in no time. If you have any questions or need more help, do comment.

Kanwal Mehreen Kanwal is a machine learning engineer and a technical writer with a profound passion for data science and the intersection of AI with medicine. She co-authored the ebook “Maximizing Productivity with ChatGPT”. As a Google Generation Scholar 2022 for APAC, she champions diversity and academic excellence. She’s also recognized as a Teradata Diversity in Tech Scholar, Mitacs Globalink Research Scholar, and Harvard WeCode Scholar. Kanwal is an ardent advocate for change, having founded FEMCodes to empower women in STEM fields.

Jobs & Careers

Larry Ellison Tops Elon Musk to Become World’s Richest Person After Oracle Stock Surge

Larry Ellison has become the world’s richest person, overtaking Tesla chief Elon Musk after an unprecedented rally in Oracle’s stock added more than $100 billion to his fortune in a single day.

The 81-year-old Oracle co-founder and chief technology officer saw his net worth climb to around $393 billion, according to the Bloomberg Billionaires Index. The leap dethroned Musk, whose wealth has been under pressure amid a slide in Tesla shares and the volatile performance of his other ventures.

The trigger came from Oracle’s fiscal first-quarter results which revealed explosive growth in the company’s artificial intelligence-driven cloud business.

Investor enthusiasm sent Oracle’s stock soaring more than 40 percent, pushing the software giant’s market value up by nearly $200 billion in a single session.

Analysts described the rally as one of the most dramatic in the company’s history, fueled by a swelling order book for AI cloud services that is now approaching half a trillion dollars

Oracle Corporation reported fiscal first-quarter 2026 revenue of $14.9 billion on Tuesday, up 12% year-over-year in US dollars and 11% in constant currency.

Cloud revenue, including infrastructure and applications, rose 28% to $7.2 billion. Infrastructure-as-a-Service (IaaS) revenue grew 55% to $3.3 billion, while Software-as-a-Service (SaaS) applications revenue increased 11% to $3.8 billion. Within SaaS, Fusion Cloud ERP revenue was $1 billion, up 17%, and NetSuite Cloud ERP revenue also reached $1 billion, up 16%.

Oracle CEO Safra Catz said the company has signed major cloud contracts with top AI players such as OpenAI, xAI, Meta, NVIDIA, and AMD. “At the end of Q1, remaining performance obligations, or RPO, now to $455 billion. This is up 359% from last year and up $317 billion from the end of Q4. Our cloud RPO grew nearly 500% on top of 83% growth last year,” she said.

“MultiCloud database revenue from Amazon, Google and Microsoft grew at the incredible rate of 1,529% in Q1,” said Ellison. “We expect MultiCloud revenue to grow substantially every quarter for several years as we deliver another 37 datacenters to our three Hyperscaler partners, for a total of 71.”

Eliision added that next month at Oracle AI World, the company will introduce a new Cloud Infrastructure service called the ‘Oracle AI Database’ that enables customers to use LLMs of their choice, including Google’s Gemini, OpenAI’s ChatGPT, xAI’s Grok, etc., directly on top of the Oracle Database to easily access and analyse all their existing database data.

Oracle also raised its forecast for Oracle Cloud Infrastructure, projecting 77% growth this fiscal year to $18 billion, up from an earlier estimate of 70%.

Over the longer term, the company expects cloud infrastructure revenues to accelerate dramatically, targeting $32 billion next year and as much as $144 billion within five years.“We now expect Oracle Cloud Infrastructure will grow 77% to $18 billion this fiscal year and then increase to $32 billion, $73 billion, $114 billion and $144 billion over the following 4 years,” said Catz.

The post Larry Ellison Tops Elon Musk to Become World’s Richest Person After Oracle Stock Surge appeared first on Analytics India Magazine.

Jobs & Careers

Pune-Based Astrophel Aerospace Develops Indigenous Cryogenic Pump for Rocket Engines

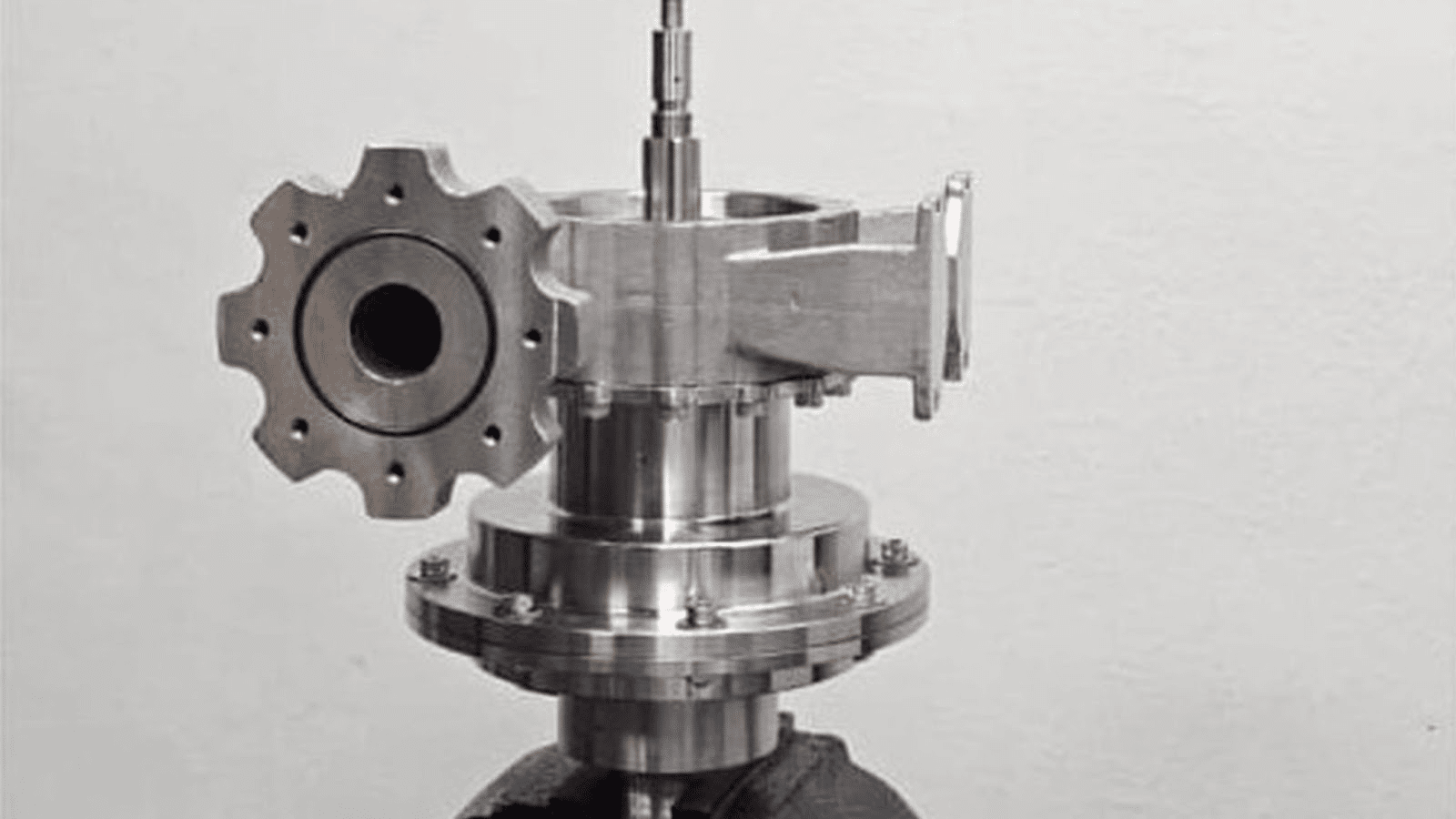

Astrophel Aerospace, a space tech startup from Pune, has developed an indigenous cryogenic pump designed to power its upcoming Astra C1 rocket engine. The pump, capable of spinning at 25,000 revolutions per minute, is undergoing testing at Indian Space Research Organisation (ISRO) facilities.

The company plans to upgrade it into a turbopump for integration into its first and second stage engines by late 2026.

Astrophel announced the development, stating that it positions the firm among the first private Indian startups to build an in-house cryogenic pump. “This milestone is a testament to how India can indigenously develop advanced propulsion technologies at a fraction of global costs,” Suyash Bafna, co-founder of Astrophel Aerospace, said.

The company recently raised ₹6.84 crore (~$800,000) in a pre-seed funding round to develop a reusable semi-cryogenic launch vehicle and missile-grade guidance systems.

Testing and Global Plans

The company is also preparing to sign a memorandum of understanding with a US-based partner (unnamed them as of now) and exploring global collaborations for export opportunities at the sub-component level. These efforts aim to meet rising demand in both the space sector and industries such as oil and gas, which use cryogenic liquids.

According to Astrophel, the pump, though the size of a one-litre bottle, produces 100 to 150 horsepower, equivalent to a family car. The turbopump version will scale this to 500 to 600 horsepower for larger launch vehicles.

“ISRO’s certification will validate not just our pump, but India’s ability to innovate world-class space hardware with global export opportunities,” Bafna added.

Astrophel’s announcement comes as India works to expand its space economy from $8.4 billion in 2022 to $44 billion by 2033, aiming to capture 8% of the global market.

“This milestone represents the culmination of years of frugal engineering and is a stepping stone toward India’s first privately developed gas generator cycle,” Immanuel Louis, co-founder of Astrophel Aerospace, said.

The post Pune-Based Astrophel Aerospace Develops Indigenous Cryogenic Pump for Rocket Engines appeared first on Analytics India Magazine.

Jobs & Careers

Esri India, Dhruva Space Partner to Expand Satellite Data Access

Sanjana Gupta

An information designer by training, Sanjana likes to delve into deep tech and enjoys learning about quantum, space, robotics and chips that build up our world. Outside of work, she likes to spend her time with books, especially those that explore the absurd.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms4 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi