Books, Courses & Certifications

4-Year-Olds Pose Messy Challenge for Elementary Schools: Toilet-Training

More 4-year-olds across California are entering transitional kindergarten (TK) this year — curious and eager to play and learn. But some aren’t fully potty-trained, posing an unexpected challenge for schools.

“They are younger, and they’re going to have more accidents,” said Elyse Doerflinger, a TK teacher in the Woodlake Unified School District in Tulare County. “Then what?”

It’s a question school districts across the state are grappling with as they expand TK to younger children.

Once designed to serve only children who missed the kindergarten age cutoff, transitional kindergarten, often referred to as TK, has expanded to include all 4-year-olds, including those who turn 4 on Sept. 1.

Teaching children through play is one thing, but handling potty issues is another. There’s state guidance, but with little to no local direction, toileting practices differ across the board.

Private preschools vary in their approach to toilet-training. Many programs train preschool teachers to help children with toileting while others require children to be potty-trained before enrolling. Public schools cannot require students to be toilet-trained, but elementary school teachers are often not trained to help.

Most districts have adopted a hands-off approach for staff to work with TK students who have an accident, which relies on verbal guidance to talk to a student through the bathroom door when changing out of soiled clothes. When that fails, those students must wait for their parents to come to campus and help, disrupting everyone’s learning.

“You can’t hold down a job if you’re constantly being called to the school to get your kid,” Doerflinger said.

Other districts have created procedures to support students through toileting plans or special education services. Some teachers, without district guidance, have found ways to help their students as best as they can.

But there’s not one model that will work for all districts, schools, or even classrooms, said Patricia Lozano, executive director of the advocacy group Early Edge California.

‘We Do Not Wipe’

“Can you come wipe me?” a Fresno Unified School District TK student yelled out last week from the connected bathroom in Kristi Henkle’s class.

“We don’t do that,” she responded.

“Who’s going to do it?” the student quickly replied. It was the third day of school.

Educators in TK frequently remind young learners to use the restroom, wash their hands and flush the toilet, among other bathroom etiquette, like putting toilet paper, not paper towels, into the toilet.

But wiping students, many educators say, is well beyond those tasks.

“We do not wipe,” said Shawna Adam, a TK teacher in Hacienda La Puente Unified School District in Los Angeles County. “Our aides are not trained; neither are we as teachers for doing potty training. We’re not going to be trained in doing toileting and wiping. I am a general ed teacher.”

Although aides or paraprofessionals work alongside teachers to share responsibilities for serving young students, who will assist with wiping depends on the school district, its toileting practices for TK and labor contract language.

In many cases, a certain paraeducator is paid more to assist with wiping or changing.

“A lot of kids are not fully potty-trained,” Oakland Unified School District TK teacher Amairani Sanchez said. She has 24 students and two aides this year because the student-staff ratio for TK went down to 10-to-1. “Now that I have that second aide, if a kid needs help wiping, my para does that.”

According to the California Department of Education’s 32-page toileting toolkit, districts and schools should engage with union representatives about “which jobs will include direct toileting support activities, such as assisting a child with changing clothing or cleaning themselves.”

While contract language often dictates duties, if that isn’t specified, local district guidance or policy is necessary for educators to rely on — if it exists.

The Madera Unified School District’s two-page toileting guidance instructs its educators to verbally guide children through the process of changing into clean clothes if they wet themselves, or to discreetly send them to the office in the event of a bowel movement accident. That is the go-to strategy in other districts, such as Hacienda La Puente Unified.

“If there are accidents, (your) child (must) be able to take off their soiled clothing by themselves and we’ll give them wipes. If there’s No. 2, then, that’s on you; we call you to come down and change them,” Adam bluntly tells TK parents.

Despite how much teachers may want to help a student in soiled clothes, most are wary of disciplinary action from their district or lawsuits from the student’s family.

Not enough districts have clear guidance with systems and supports in place for teachers to overcome the challenges created by the expansion of TK.

“Teachers across the state, in their unions, are fighting and advocating for more resources for our youngest students,” said David Goldberg, president of the California Teachers Association.

Proper Facilities Necessary

Without contract language or local guidance, educators sometimes reach a consensus on their own.

Special education paraprofessionals, who are often tasked with supporting children who are still in diapers, have helped general education teachers and aides create toileting resources for the classroom.

The special education teacher will “put men’s boxers over her own clothes to demonstrate how to pull your pants down,” Doerflinger said about the teacher at her school. “She’ll take paper plates and smear peanut butter all over and have the kids practice wiping it off. They learn you have to do it until it’s clean all the way.”

Even for districts that have adopted approaches, such as opt-in forms on whether students can be helped with toileting, educators need more than just guidance to handle it.

Teachers and aides require supplies, such as wipes and gloves, as well as a trash can for the proper disposal of materials.

Schools also need the right kinds of facilities.

In some schools, TK classrooms do not have their own bathrooms, and the young children must use the same bathroom as all other students. In such cases, educators are unable to guide a child through the process of cleaning and dressing themselves.

In one elementary bathroom, a naked TK student runs to the door each day, carrying her clothes because she doesn’t know how to put them back on.

That’s one of many reasons why having a bathroom in the TK classroom is ideal for 4-year-olds.

A lack of state funding impacted districts’ ability to add toilets and bathrooms for them.

California voters in November approved $40 billion in local construction bonds and $10 billion in a statewide bond for facilities, but none of those funds are exclusively for transitional kindergarten. Because districts are also struggling to meet facilities needs, such as outdated or deteriorating buildings, TK will likely not take priority.

Many school districts still report that facilities, including developing age-appropriate bathrooms connected to classrooms, are a top challenge in implementing universal prekindergarten, according to a June 2025 Learning Policy Institute report.

At Greenberg Elementary School in Fresno Unified, for example, one TK classroom has a bathroom, while the other doesn’t. The other classroom on campus with a bathroom is for kindergarten students, who also require smaller toilets.

Students in classes with attached bathrooms have the freedom to go on their own schedules. Those who do not have an in-class restroom, however, must follow a stricter schedule with all of them going to the bathroom at once or must trek to the elementary bathroom with an aide.

“The intention of having a second adult in the classroom is for them to be a second teacher,” not “the walker to the bathroom,” said Hanna Melnick, director of early learning policy at Learning Policy Institute. “It defeats the purpose.”

Two dozen students being on the same potty schedule, teachers say, can also lead to more mishaps, so teachers must quickly get students in and out to avoid a potty accident.

A Partnership With Families

Before this school year, there was a premise that 4-year-olds who were not potty-trained needed an individualized education plan (IEP) for special education services, which required an aide for changing diapers and helping with toileting.

But that shouldn’t be the norm, some teachers and experts say.

“If you actually think there could be a disability, then let’s assess and check,” said Doerflinger, the TK teacher in Woodlake Unified who has one student who is not potty-trained and has been identified as having special needs and another student in the process of being recognized for such services. “Some kids just have trauma. Some kids just take longer. Some kids are terrified of a bathroom with a loud flushing toilet.”

While there will need to be a mindset shift among educators who believe all students who are not potty-trained need special education services, teachers and experts agree that families should play an active role in potty training their 4-year-olds.

In Los Angeles Unified, the state’s largest school district, toileting is considered a team effort between families and schools, said Pia Sadaqatmal, the district’s chief of transitional programs.

For students to have “toileting independence,” they should have opportunities to practice their toileting skills and exercise that independence, she said, noting frequent bathroom reminders and breaks at school, step-by-step picture guides once in the restroom, and books, instructional materials and resources shared with families to support toileting at home.

By sharing resources with families, “we’re all using the same language from the time the child wakes up in the morning until the time the child goes to bed at night,” said Ranae Amezquita, the district’s early childhood education director.

“We say, ‘Are kids ready for school?’ Also, ‘Are our schools ready for kids?’” said Lozano with Early Edge. “That is something that schools need to think about.”

Books, Courses & Certifications

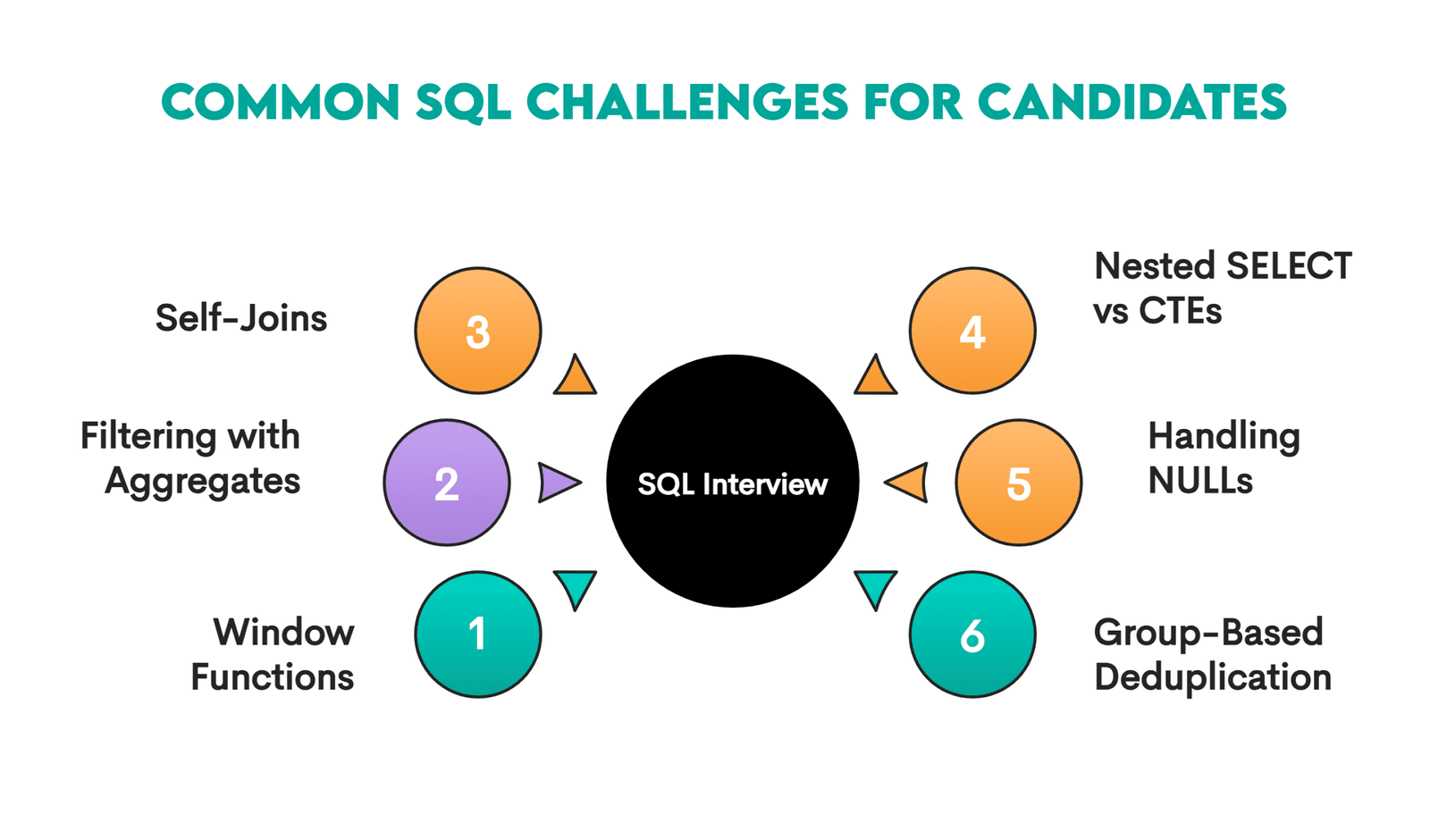

Most Candidates Fail These SQL Concepts in Data Interviews

Image by author | Canva

An interviewer’s job is to find the most suitable candidates for the advertised position. In doing so, they will gladly set up SQL interview questions to see if they can catch you off guard. There are several SQL concepts at which candidates often fail.

Hopefully, you’ll be one of those who avoid that destiny, as I’ll explain these concepts in detail below, complete with examples of how to solve certain problems correctly.

# 1. Window Functions

Why It’s Hard: Candidates memorize what each window function does but don’t really understand how window frames, partitions, or ordering actually work.

Common Mistakes: A common mistake is not specifying ORDER BY in ranking window functions or value window functions, such as LEAD() or LAG(), and expecting the query to work or for the result to be deterministic.

Example: In this example, you need to find users who made a second purchase within 7 days of any previous purchase.

You might write this query.

WITH ordered_tx AS (

SELECT user_id,

created_at::date AS tx_date,

LAG(created_at::DATE) OVER (PARTITION BY user_id) AS prev_tx_date

FROM amazon_transactions

)

SELECT DISTINCT user_id

FROM ordered_tx

WHERE prev_tx_date IS NOT NULL AND tx_date - prev_tx_date <= 7;

At first glance, everything might seem right. The code even outputs something that might appear to be a correct answer.

First of all, we are lucky that the code works at all! This happens simply because I’m writing it in PostgreSQL. In some other SQL flavors, you’d get an error since ORDER BY is mandatory in ranking and analytical window functions.

Second, the output is wrong; I highlighted some rows that shouldn’t be there. Why do they appear, then?

They appear because we didn’t specify an ORDER BY clause in the LAG() window function. Without it, the row order is arbitrary. So, we are comparing the current transaction to some random earlier row for that user, not the one that occurred immediately before it in time.

This is not what the question asks. We need to compare each transaction to the previous one by date. In other words, we need to specify this explicitly in the ORDER BY clause within the LAG() function.

WITH ordered_tx AS (

SELECT user_id,

created_at::date AS tx_date,

LAG(created_at::DATE) OVER (PARTITION BY user_id ORDER BY created_at) AS prev_tx_date

FROM amazon_transactions

)

SELECT DISTINCT user_id

FROM ordered_tx

WHERE prev_tx_date IS NOT NULL AND tx_date - prev_tx_date <= 7;

# 2. Filtering With Aggregates (Especially HAVING vs. WHERE)

Why It’s Hard: People often don’t understand the execution order in SQL, which is: FROM -> WHERE -> GROUP BY -> HAVING -> SELECT -> ORDER BY. This order means that WHERE filters rows before aggregation, and HAVING filters after. That also, logically, means that you can’t use aggregate functions in the WHERE clause.

Common Mistake: Trying to use aggregate functions in WHERE in a grouped query and getting an error.

Example: This interview question asks you to find the total revenue made by each winery. Only wineries where 90 is the lowest number of points for any of their varieties should be considered.

Many will see this as an easy question and hastily write this query.

SELECT winery,

variety,

SUM(price) AS total_revenue

FROM winemag_p1

WHERE MIN(points) >= 90

GROUP BY winery, variety

ORDER BY winery, total_revenue DESC;

However, that code will throw an error stating that aggregate functions are not allowed in the WHERE clause. This pretty much explains everything. The solution? Move the filtering condition from WHERE to HAVING.

SELECT winery,

variety,

SUM(price) AS total_revenue

FROM winemag_p1

GROUP BY winery, variety

HAVING MIN(points) >= 90

ORDER BY winery, total_revenue DESC;

# 3. Self-Joins for Time-Based or Event-Based Comparisons

Why It’s Hard: The idea of joining a table with itself is quite unintuitive, so candidates often forget it’s an option.

Common Mistake: Using subqueries and complicating the query when joining a table with itself would be simpler and faster, especially when filtering by dates or events.

Example: Here’s a question asking you to show the change of every currency’s exchange rate between 1 January 2020 and 1 July 2020.

You can solve this by writing an outer correlated subquery that fetches the July 1 exchange rates, then subtracts the January 1 exchange rates, which come from the inner subquery.

SELECT jan_rates.source_currency,

(SELECT exchange_rate

FROM sf_exchange_rate

WHERE source_currency = jan_rates.source_currency AND date="2020-07-01") - jan_rates.exchange_rate AS difference

FROM (SELECT source_currency, exchange_rate

FROM sf_exchange_rate

WHERE date="2020-01-01"

) AS jan_rates;

This returns a correct output, but such a solution is unnecessarily complicated. A much simpler solution, with fewer lines of code, involves self-joining a table with itself and then applying two date filtering conditions in the WHERE clause.

SELECT jan.source_currency,

jul.exchange_rate - jan.exchange_rate AS difference

FROM sf_exchange_rate jan

JOIN sf_exchange_rate jul ON jan.source_currency = jul.source_currency

WHERE jan.date="2020-01-01" AND jul.date="2020-07-01";

# 4. Subqueries vs. Common Table Expressions (CTEs)

Why It’s Hard: People often get stuck on subqueries because they learn them before Common Table Expressions (CTEs) and continue using them for any query with layered logic. However, subqueries can get messy very quickly.

Common Mistake: Using deeply nested SELECT statements when CTEs would be much simpler.

Example: In the interview question from Google and Netflix, you need to find the top actors based on their average movie rating within the genre in which they appear most frequently.

The solution using CTEs is as follows.

WITH genre_stats AS

(SELECT actor_name,

genre,

COUNT(*) AS movie_count,

AVG(movie_rating) AS avg_rating

FROM top_actors_rating

GROUP BY actor_name,

genre),

max_genre_count AS

(SELECT actor_name,

MAX(movie_count) AS max_count

FROM genre_stats

GROUP BY actor_name),

top_genres AS

(SELECT gs.*

FROM genre_stats gs

JOIN max_genre_count mgc ON gs.actor_name = mgc.actor_name

AND gs.movie_count = mgc.max_count),

top_genre_avg AS

(SELECT actor_name,

MAX(avg_rating) AS max_avg_rating

FROM top_genres

GROUP BY actor_name),

filtered_top_genres AS

(SELECT tg.*

FROM top_genres tg

JOIN top_genre_avg tga ON tg.actor_name = tga.actor_name

AND tg.avg_rating = tga.max_avg_rating),

ranked_actors AS

(SELECT *,

DENSE_RANK() OVER (

ORDER BY avg_rating DESC) AS rank

FROM filtered_top_genres),

final_selection AS

(SELECT MAX(rank) AS max_rank

FROM ranked_actors

WHERE rank <= 3)

SELECT actor_name,

genre,

avg_rating

FROM ranked_actors

WHERE rank <=

(SELECT max_rank

FROM final_selection);

It is relatively complicated, but it still consists of six clear CTEs, with the code’s readability enhanced by clear aliases.

Curious what the same solution would look like using only subqueries? Here it is.

SELECT ra.actor_name,

ra.genre,

ra.avg_rating

FROM (

SELECT *,

DENSE_RANK() OVER (ORDER BY avg_rating DESC) AS rank

FROM (

SELECT tg.*

FROM (

SELECT gs.*

FROM (

SELECT actor_name,

genre,

COUNT(*) AS movie_count,

AVG(movie_rating) AS avg_rating

FROM top_actors_rating

GROUP BY actor_name, genre

) AS gs

JOIN (

SELECT actor_name,

MAX(movie_count) AS max_count

FROM (

SELECT actor_name,

genre,

COUNT(*) AS movie_count,

AVG(movie_rating) AS avg_rating

FROM top_actors_rating

GROUP BY actor_name, genre

) AS genre_stats

GROUP BY actor_name

) AS mgc

ON gs.actor_name = mgc.actor_name AND gs.movie_count = mgc.max_count

) AS tg

JOIN (

SELECT actor_name,

MAX(avg_rating) AS max_avg_rating

FROM (

SELECT gs.*

FROM (

SELECT actor_name,

genre,

COUNT(*) AS movie_count,

AVG(movie_rating) AS avg_rating

FROM top_actors_rating

GROUP BY actor_name, genre

) AS gs

JOIN (

SELECT actor_name,

MAX(movie_count) AS max_count

FROM (

SELECT actor_name,

genre,

COUNT(*) AS movie_count,

AVG(movie_rating) AS avg_rating

FROM top_actors_rating

GROUP BY actor_name, genre

) AS genre_stats

GROUP BY actor_name

) AS mgc

ON gs.actor_name = mgc.actor_name AND gs.movie_count = mgc.max_count

) AS top_genres

GROUP BY actor_name

) AS tga

ON tg.actor_name = tga.actor_name AND tg.avg_rating = tga.max_avg_rating

) AS filtered_top_genres

) AS ra

WHERE ra.rank <= (

SELECT MAX(rank)

FROM (

SELECT *,

DENSE_RANK() OVER (ORDER BY avg_rating DESC) AS rank

FROM (

SELECT tg.*

FROM (

SELECT gs.*

FROM (

SELECT actor_name,

genre,

COUNT(*) AS movie_count,

AVG(movie_rating) AS avg_rating

FROM top_actors_rating

GROUP BY actor_name, genre

) AS gs

JOIN (

SELECT actor_name,

MAX(movie_count) AS max_count

FROM (

SELECT actor_name,

genre,

COUNT(*) AS movie_count,

AVG(movie_rating) AS avg_rating

FROM top_actors_rating

GROUP BY actor_name, genre

) AS genre_stats

GROUP BY actor_name

) AS mgc

ON gs.actor_name = mgc.actor_name AND gs.movie_count = mgc.max_count

) AS tg

JOIN (

SELECT actor_name,

MAX(avg_rating) AS max_avg_rating

FROM (

SELECT gs.*

FROM (

SELECT actor_name,

genre,

COUNT(*) AS movie_count,

AVG(movie_rating) AS avg_rating

FROM top_actors_rating

GROUP BY actor_name, genre

) AS gs

JOIN (

SELECT actor_name,

MAX(movie_count) AS max_count

FROM (

SELECT actor_name,

genre,

COUNT(*) AS movie_count,

AVG(movie_rating) AS avg_rating

FROM top_actors_rating

GROUP BY actor_name, genre

) AS genre_stats

GROUP BY actor_name

) AS mgc

ON gs.actor_name = mgc.actor_name AND gs.movie_count = mgc.max_count

) AS top_genres

GROUP BY actor_name

) AS tga

ON tg.actor_name = tga.actor_name AND tg.avg_rating = tga.max_avg_rating

) AS filtered_top_genres

) AS ranked_actors

WHERE rank <= 3

);

There is redundant logic repeated across subqueries. How many subqueries is that? I have no idea. The code is impossible to maintain. Even though I just wrote it, I’d still need half a day to understand it if I wanted to change something tomorrow. Additionally, the completely meaningless subquery aliases do not help.

# 5. Handling NULLs in Logic

Why It’s Hard: Candidates often think that NULL is equal to something. It’s not. NULL isn’t equal to anything — not even itself. Logic involving NULLs behaves differently from logic involving actual values.

Common Mistake: Using = NULL instead of IS NULL in filtering or missing output rows because NULLs break the condition logic.

Example: There’s an interview question by IBM that asks you to calculate the total number of interactions and the total number of contents created for each customer.

It doesn’t sound too tricky, so you might write this solution with two CTEs, where one CTE counts the number of interactions per customer, while the other counts the number of content items created by a customer. In the final SELECT, you FULL OUTER JOIN the two CTEs, and you have the solution. Right?

WITH interactions_summary AS

(SELECT customer_id,

COUNT(*) AS total_interactions

FROM customer_interactions

GROUP BY customer_id),

content_summary AS

(SELECT customer_id,

COUNT(*) AS total_content_items

FROM user_content

GROUP BY customer_id)

SELECT i.customer_id,

i.total_interactions,

c.total_content_items

FROM interactions_summary AS i

FULL OUTER JOIN content_summary AS c ON i.customer_id = c.customer_id

ORDER BY customer_id;

Almost right. Here’s the output. (By the way, you see double quotation marks (“”) instead of NULL. That’s how the StrataScratch UI displays it, but trust me, the engine still treats them for what they are: NULL values).

The highlighted rows contain NULLs. This makes the output incorrect. A NULL value is neither the customer ID nor the number of interactions and contents, which the question explicitly asks you to show.

What we’re missing in the above solution is COALESCE() to handle NULLs in the final SELECT. Now, all the customers without interactions will get their IDs from the content_summary CTE. Also, for customers that don’t have interactions, or content, or both, we’ll now replace NULL with 0, which is a valid number.

WITH interactions_summary AS

(SELECT customer_id,

COUNT(*) AS total_interactions

FROM customer_interactions

GROUP BY customer_id),

content_summary AS

(SELECT customer_id,

COUNT(*) AS total_content_items

FROM user_content

GROUP BY customer_id)

SELECT COALESCE(i.customer_id, c.customer_id) AS customer_id,

COALESCE(i.total_interactions, 0) AS total_interactions,

COALESCE(c.total_content_items, 0) AS total_content_items

FROM interactions_summary AS i

FULL OUTER JOIN content_summary AS c ON i.customer_id = c.customer_id

ORDER BY customer_id;

# 6. Group-Based Deduplication

Why It’s Hard: Group-based deduplication means you’re selecting one row per group, e.g., “most recent”, “highest score”, etc. At first, it sounds like you only need to pick one row per user. But you can’t use GROUP BY unless you aggregate. On the other hand, you often need a full row, not a single value that aggregation and GROUP BY return.

Common Mistake: Using GROUP BY + LIMIT 1 (or DISTINCT ON, which is PostgreSQL-specific) instead of ROW_NUMBER() or RANK(), the latter if you want ties included.

Example: This question asks you to identify the best-selling item for each month, and there’s no need to separate months by year. The best-selling item is calculated as unitprice * quantity.

The naive approach would be this. First, extract the sale month from invoicedate, select description, and find the total sales by summing unitprice * quantity. Then, to get the total sales by month and product description, we simply GROUP BY those two columns. Finally, we only need to use ORDER BY to sort the output from the best to the worst-selling product and use LIMIT 1 to output only the first row, i.e., the best-selling item.

SELECT DATE_PART('MONTH', invoicedate) AS sale_month,

description,

SUM(unitprice * quantity) AS total_paid

FROM online_retail

GROUP BY sale_month, description

ORDER BY total_paid DESC

LIMIT 1;

As I said, this is naive; the output somewhat resembles what we need, but we need this for every month, not just one.

One of the correct approaches is to use the RANK() window function. With this approach, we follow a similar method to the previous code. The difference is that the query now becomes a subquery in the FROM clause. In addition, we use RANK() to partition the data by month and then rank the rows within each partition (i.e., for each month separately) from the best-selling to the worst-selling item.

Then, in the main query, we simply select the required columns and output only rows where the rank is 1 using the WHERE clause.

SELECT month,

description,

total_paid

FROM

(SELECT DATE_PART('month', invoicedate) AS month,

description,

SUM(unitprice * quantity) AS total_paid,

RANK() OVER (PARTITION BY DATE_PART('month', invoicedate) ORDER BY SUM(unitprice * quantity) DESC) AS rnk

FROM online_retail

GROUP BY month, description) AS tmp

WHERE rnk = 1;

# Conclusion

The six concepts we’ve covered commonly appear in SQL coding interview questions. Pay attention to them, practice interview questions that involve these concepts, learn the correct approaches, and you’ll significantly improve your chances in your interviews.

Nate Rosidi is a data scientist and in product strategy. He’s also an adjunct professor teaching analytics, and is the founder of StrataScratch, a platform helping data scientists prepare for their interviews with real interview questions from top companies. Nate writes on the latest trends in the career market, gives interview advice, shares data science projects, and covers everything SQL.

Books, Courses & Certifications

Top Data Science Courses 2025

Image by Editor | ChatGPT

# Introduction

There are a lot of data science courses out there. Class Central alone lists over 20,000 of them. That’s crazy! I remember looking for data science courses in 2013 and having a very difficult time coming across any. There was Andrew Ng’s machine learning course, Bill Howe’s Introduction to Data Science course on Coursera, the Johns Hopkins Coursera specialization… and that’s about it IIRC.

But don’t worry; now there are more than 20,000. I know what you’re thinking: with 20,000 or more courses out there, it should be really easy to find the best, high quality ones, right? 🙄 While that isn’t the case, there are a lot of quality offerings out there, and a lot of diverse offerings as well. Gone are the days of monolith “data science” courses; today you can find very specific training on performing specific operations on particular cloud manufaturer platforms, using ChatGPT to improve your analytics workflow, and generative AI for poets (OK, not sure about that last one…). There are also options for everything from one hour targeted courses to months long specializations with multiple constituent courses on broad topics. Looking to train for free? There are lots of options. So, too, are there for those looking to pay something to have their progress recognized with a credential of some sort.

# Top Data Science Courses of 2025

Let’s not waste anymore time. Here are a collection of 10 courses (or, in a few cases, collections of courses) that are diverse in terms of topics, lengths, time commitments, credentials, vendor neutrality vs. specificity, and costs. I have tried to mix topics, and cover the basis of contemporary cutting-edge techniques that data scientists are looking to add to their repertoire. If you’re looking for data science courses, there’s bound to be something in here that appeals to you.

// 1. Retrieval Augmented Generation (RAG) Course

Platform: Coursera

Organizer: DeepLearning.AI

Credential: Coursera course certificate

- Teaches how to build end-to-end RAG systems by linking large language models to external data: students learn to design retrievers, vector databases, and LLM prompts tailored to real-world needs

- Covers core RAG components and trade-offs: learn different retrieval methods (semantic search, BM25, Reciprocal Rank Fusion, etc.) and how to balance cost, speed, and quality for each part of the pipeline

- Hands-on, project-driven learning: assignments guide you to “build your first RAG system by writing retrieval and prompt functions”, compare retrieval techniques, scale with Weaviate (vector DB), and construct a domain-specific chatbot on real data

- Realistic scenario exercises: implement a chatbot that answers FAQs from a custom dataset, handling challenges like dynamic pricing and logging for reliability

Differentiator: Deep practical focus on every piece of a RAG pipeline, which is perfect for learners who want step-by-step experience building, optimizing, and evaluating RAG systems with production tools.

// 2. IBM RAG & Agentic AI Professional Certificate

Platform: Coursera

Organizer: IBM

Credential: Coursera Professional Certificate

- Focuses on cutting-edge generative AI engineering: covers prompt engineering, agentic AI (multi-agent systems), and multimodal (text, image, audio) integration for context-aware applications

- Teaches RAG pipelines: building efficient RAG systems that connect LLMs to external data sources (text, image, audio), using tools like LangChain and LangGraph

- Emphasizes practical AI tool integration: hands-on labs with LangChain, CrewAI, BeeAI, etc., and building full-stack GenAI applications (Python using Flask/Gradio) powered by LLMs

- Develops autonomous AI agents: covers designing and orchestrating complex AI agent workflows and integrations to solve real-world tasks

Differentiator: Unique emphasis on agentic AI and integration of the latest AI frameworks (LangChain, LangGraph, CrewAI, etc.), making it ideal for developers wanting to master the newest generative AI innovations.

// 3. ChatGPT Advanced Data Analysis

Platform: Coursera

Organizer: Vanderbilt University

Credential: Coursera course certificate

- Learn to leverage ChatGPT’s Advanced Data Analysis: automate a variety of data and productivity tasks, including converting Excel data into charts and slides, extracting insights from PDFs, and generating presentations from documents

- Hands-on use-cases: turning an Excel file into visualizations and a PowerPoint presentation, or building a chatbot that answers questions about PDF content, using natural language prompting

- Emphasizes prompt engineering for ADA: teaches how to write effective prompts to get the best results from ChatGPT’s Advanced Data Analysis tool, empowering you to efficiently direct it

- No coding experience required: designed for beginners; learners practice “conversing with ChatGPT ADA” to solve problems, making it accessible for non-technical users seeking to boost productivity

Differentiator: A unique, beginner-friendly focus on automating everyday analytics and content tasks using ChatGPT’s Advanced Data Analysis, ideal for those looking to harness generative AI capabilities without writing code.

// 4. Google Advanced Data Analytics Professional Certificate

Platform: Coursera

Organizer: Google

Credential: Coursera Professional Certificate + Credly badge (ACE credit-recommended)

- Comprehensive 8-course series on advanced analytics: covers statistical analysis, regression, machine learning, predictive modeling, and experimental design for handling large datasets

- Emphasizes data visualization and storytelling: students learn to create impactful visualizations and apply statistical methods to investigate data, then communicate insights clearly to stakeholders

- Project-based, hands-on learning: includes lab work with Jupyter Notebook, Python, and Tableau, and culminates in a capstone project, with learners building portfolio pieces to demonstrate real-world analytics skills

- Built for career advancement: designed for people who already have foundational analytics knowledge and want to step up to data science roles, preparing learners for roles like senior data analyst or junior data scientist

Differentiator: Google-created curriculum that bridges basic data skills to advanced analytics, with strong emphasis on modern ML and predictive techniques, making it stand out for those aiming for higher-level data roles.

// 5. IBM Data Engineering Professional Certificate

Platform: Coursera

Organizer: IBM

Credential: Coursera Professional Certificate + IBM Digital Badge

- 16-course program covering core data engineering skills: Python programming, SQL and relational databases (MySQL, PostgreSQL, IBM Db2), data warehousing, and ETL concepts

- Extensive toolset coverage: students gain working knowledge of NoSQL and big data technologies (MongoDB, Cassandra, Hadoop) and the Apache Spark ecosystem (Spark SQL, Spark MLlib, Spark Streaming) for large-scale data processing

- Focus on data pipelines and ETL: teaches how to extract, transform, and load data using Python and Bash scripting, how to build and orchestrate pipelines with tools like Apache Airflow and Kafka, and relational DB administration and BI dashboards construction

- Project-driven curriculum: practical labs and projects include designing relational databases, querying real datasets with SQL, creating an Airflow+Kafka ETL pipeline, implementing a Spark ML model, and deploying a multi-database data platform

Differentiator: Broad, entry-level-friendly data engineering track (no prior coding required) from IBM, giving a job-ready foundation, while also introducing how generative AI tools can be used in data engineering workflows.

// 6. Data Analysis with Python

Platform: freeCodeCamp

Credential: Free certification

- Free, self-paced certification on Python for data analysis: fundamentals such as reading data from sources (CSV files, SQL databases, HTML) and using core libraries like NumPy, Pandas, Matplotlib, and Seaborn for processing and visualization

- Covers data manipulation and cleaning: introduces key techniques for handling data (cleaning duplicates, filtering) and performing basic analytics with Python tools, with learners practicing how to use Pandas for transforming data and Matplotlib/Seaborn for charting results

- Extensive hands-on exercises: includes many coding challenges and real-world projects embedded in Jupyter-style lessons, with projects such as “Page View Time Series Visualizer” and “Sea Level Predictor”

- Intermediate-level, in-depth curriculum: approximately 300 hours of content covering everything from basic Python through advanced data projects, designed for dedicated self-learners seeking a solid foundation in open-source data tools

Differentiator: Completely free and project-focused, with an emphasis on fundamental Python data libraries, and ideal for learners on a budget who want a thorough grounding in open-source data analysis tools without any enrollment fees.

// 7. Kaggle Learn Micro-Courses

Platform: Kaggle

Credential: Free certificates of completion

- Free, interactive micro-courses on the Kaggle platform covering a wide range of practical data topics (Python, Pandas, data visualization, SQL, machine learning, computer vision, etc.), with each course taking ~3–5 hours

- Highly practical and hands-on: each lesson is a notebook-style tutorial or short coding challenge; Pandas course emphasizes solving “short hands-on challenges to perfect your data manipulation skills”, data cleaning course focuses on real-world messy data

- Self-paced and bite-sized: designed to be fun and fast, as the content is concise with instant feedback

- Integrated with Kaggle’s community: learners can easily switch to Kaggle’s free notebook environment to practice on real datasets and even enter competitions

Differentiator: Offers a game-like, learning-by-doing approach on Kaggle’s own platform, and it one of the quickest ways to acquire practical data skills through short, challenge-driven modules and immediate coding feedback.

// 8. Lakehouse Fundamentals

Platform: Databricks Academy

Credential: Free digital badge

- Short, introductory self-paced course (~1 hour of video) on the Databricks Data Intelligence Platform

- Covers Databricks basics: explains the lakehouse architecture and key products, and shows how Databricks brings together data engineering, warehousing, data science, and AI in one platform

- No prerequisites: designed for absolute beginners with no prior Databricks or data platform experience

Differentiator: Fast, vendor-provided overview of Databricks’ lakehouse vision, and the quickest way to understand what Databricks offers for data and AI projects directly from the source.

// 9. Hands-On Snowflakes Essentials

Platform: Snowflake University

Credential: Free digital badges

- Collection of free, hands-on Snowflake workshops: for beginners, topics range from Data Warehousing and Data Lake fundamentals to advanced use-cases in Data Engineering and Data Science

- Very interactive learning: each workshop features short instructional videos plus practical labs, and you must submit lab work on the Snowflake platform, which is auto-graded

- Earnable badges: successful completion of each workshop grants you a digital badge (many are free) that you can share on LinkedIn

- Structured track: Snowflake recommends a learning path (starting with Data Warehousing and progressing through Collaboration, Data Lakes, etc.), ensuring a logical progression from basics to more specialized topics

Differentiator: Gamified, lab-centric training path with real-time assessment, standing out for its required hands-on lab submissions and shareable badges, making it ideal for learners who want concrete proof of Snowflake expertise.

// 10. AWS Skill Builder Generative AI Courses

Platform: AWS Skill Builder

Credentials: Digital badge (for select plans/assessments)

- Comprehensive set of generative AI courses and labs: aimed at various roles, the offerings span from fundamental overviews to hands-on technical training on AWS AI services

- Covers generative AI topics on AWS: e.g. foundational courses for executives, learning plans for developers and ML practitioners, and deep dives into AWS tools like Amazon Bedrock (foundational model service), LangChain integrations, and Amazon Q (an AI-powered assistant)

- Role-based learning paths: includes titles like “Generative AI for Executives”, “Generative AI Learning Plan for Developers”, “Building Generative AI Applications Using Amazon Bedrock”, and more, each tailored to prepare learners for building or using gen-AI solutions on AWS

- Hands-on practice: many AWS gen-AI courses come with labs to try out services (e.g. building a generative search with Q, deploying LLMs on SageMaker, or using bedrock APIs), with earned skills directly tied to AWS’s AI/ML ecosystem

Differentiator: Deep AWS integration, as these courses teach you how to leverage AWS’ latest generative AI tools and platforms, making them best suited for learners already in the AWS ecosystem who want to build production-ready gen-AI applications on AWS.

Matthew Mayo (@mattmayo13) holds a master’s degree in computer science and a graduate diploma in data mining. As managing editor of KDnuggets & Statology, and contributing editor at Machine Learning Mastery, Matthew aims to make complex data science concepts accessible. His professional interests include natural language processing, language models, machine learning algorithms, and exploring emerging AI. He is driven by a mission to democratize knowledge in the data science community. Matthew has been coding since he was 6 years old.

Books, Courses & Certifications

Build character consistent storyboards using Amazon Nova in Amazon Bedrock – Part 2

Although careful prompt crafting can yield good results, achieving professional-grade visual consistency often requires adapting the underlying model itself. Building on the prompt engineering and character development approach covered in Part 1 of this two-part series, we now push the consistency level for specific characters by fine-tuning an Amazon Nova Canvas foundation model (FM). Through fine-tuning techniques, creators can instruct the model to maintain precise control over character appearances, expressions, and stylistic elements across multiple scenes.

In this post, we take an animated short film, Picchu, produced by FuzzyPixel from Amazon Web Services (AWS), prepare training data by extracting key character frames, and fine-tune a character-consistent model for the main character Mayu and her mother, so we can quickly generate storyboard concepts for new sequels like the following images.

Solution overview

To implement an automated workflow, we propose the following comprehensive solution architecture that uses AWS services for an end-to-end implementation.

The workflow consists of the following steps:

- The user uploads a video asset to an Amazon Simple Storage Service (Amazon S3) bucket.

- Amazon Elastic Container Service (Amazon ECS) is triggered to process the video asset.

- Amazon ECS downsamples the frames, selects those containing the character, and then center-crops them to produce the final character images.

- Amazon ECS invokes an Amazon Nova model (Amazon Nova Pro) from Amazon Bedrock to create captions from the images.

- Amazon ECS writes the image captions and metadata to the S3 bucket.

- The user uses a notebook environment in Amazon SageMaker AI to invoke the model training job.

- The user fine-tunes a custom Amazon Nova Canvas model by invoking Amazon Bedrock

create_model_customization_jobandcreate_model_provisioned_throughputAPI calls to create a custom model available for inference.

This workflow is structured in two distinct phases. The initial phase, in Steps 1–5, focuses on preparing the training data. In this post, we walk through an automated pipeline to extract images from an input video and then generate labeled training data. The second phase, in Steps 6–7, focuses on fine-tuning the Amazon Nova Canvas model and performing test inference using the custom-trained model. For these latter steps, we provide the preprocessed image data and comprehensive example code in the following GitHub repository to guide you through the process.

Prepare the training data

Let’s begin with the first phase of our workflow. In our example, we build an automated video object/character extraction pipeline to extract high-resolution images with accurate caption labels using the following steps.

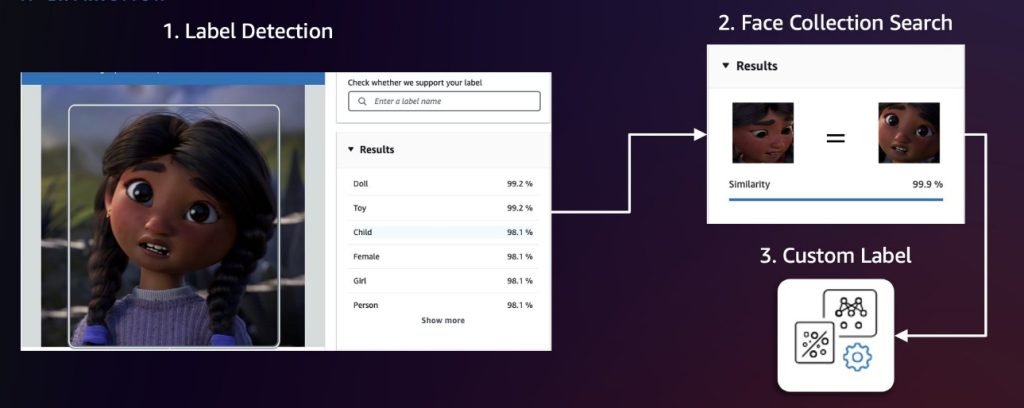

Creative character extraction

We recommend first sampling video frames at fixed intervals (for example, 1 frame per second). Then, apply Amazon Rekognition label detection and face collection search to identify frames and characters of interest. Label detection can identify over 2,000 unique labels and locate their positions within frames, making it ideal for initial detection of general character categories or non-human characters. To distinguish between different characters, we then use the Amazon Rekognition feature to search faces in a collection. This feature identifies and tracks characters by matching their faces against a pre-populated face collection. If these two approaches aren’t precise enough, we can use Amazon Rekognition Custom Labels to train a custom model for detecting specific characters. The following diagram illustrates this workflow.

After detection, we center-crop each character with appropriate pixel padding and then run a deduplication algorithm using the Amazon Titan Multimodal Embeddings model to remove semantically similar images above a threshold value. Doing so helps us build a diverse dataset because redundant or nearly identical frames could lead to model overfitting (when a model learns the training data too precisely, including its noise and fluctuations, making it perform poorly on new, unseen data). We can calibrate the similarity threshold to fine-tune what we consider to be identical images, so we can better control the balance between dataset diversity and redundancy elimination.

Data labeling

We generate captions for each image using Amazon Nova Pro in Amazon Bedrock and then upload the image and label manifest file to an Amazon S3 location. This process focuses on two critical aspects of prompt engineering: character description to help the FM identify and name the characters based on their unique attributes, and varied description generation that avoids repetitive patterns in the caption (for example, “an animated character”). The following is an example prompt template used during our data labeling process:

The data labeling output is formatted as a JSONL file, where each line pairs an image reference Amazon S3 path with a caption generated by Amazon Nova Pro. This JSONL file is then uploaded to Amazon S3 for training. The following is an example of the file:

Human verification

For enterprise use cases, we recommend incorporating a human-in-the-loop process to verify labeled data before proceeding with model training. This verification can be implemented using Amazon Augmented AI (Amazon A2I), a service that helps annotators verify both image and caption quality. For more details, refer to Get Started with Amazon Augmented AI.

Fine-tune Amazon Nova Canvas

Now that we have the training data, we can fine-tune the Amazon Nova Canvas model in Amazon Bedrock. Amazon Bedrock requires an AWS Identity and Access Management (IAM) service role to access the S3 bucket where you stored your model customization training data. For more details, see Model customization access and security. You can perform the fine-tuning task directly on the Amazon Bedrock console or use the Boto3 API. We explain both approaches in this post, and you can find the end-to-end code sample in picchu-finetuning.ipynb.

Create a fine-tuning job on the Amazon Bedrock console

Let’s start by creating an Amazon Nova Canvas fine-tuning job on the Amazon Bedrock console:

- On the Amazon Bedrock console, in the navigation pane, choose Custom models under Foundation models.

- Choose Customize model and then Create Fine-tuning job.

- On the Create Fine-tuning job details page, choose the model you want to customize and enter a name for the fine-tuned model.

- In the Job configuration section, enter a name for the job and optionally add tags to associate with it.

- In the Input data section, enter the Amazon S3 location of the training dataset file.

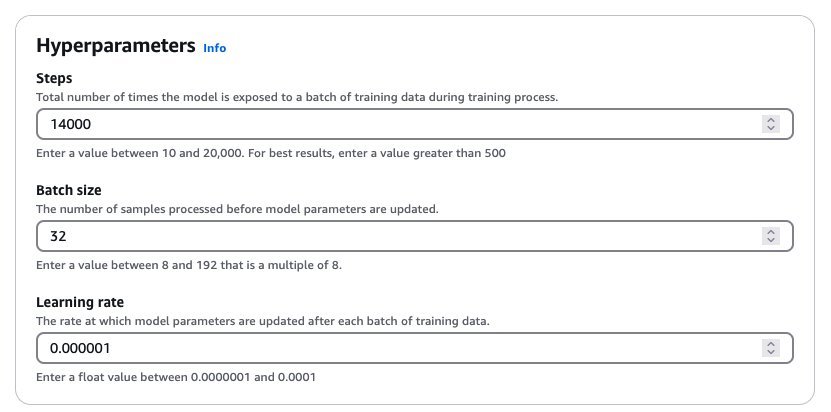

- In the Hyperparameters section, enter values for hyperparameters, as shown in the following screenshot.

- In the Output data section, enter the Amazon S3 location where Amazon Bedrock should save the output of the job.

- Choose Fine-tune model job to begin the fine-tuning process.

This hyperparameter combination yielded good results during our experimentation. In general, increasing the learning rate makes the model train more aggressively, which often presents an interesting trade-off: we might achieve character consistency more quickly, but it might impact overall image quality. We recommend a systematic approach to adjusting hyperparameters. Start with the suggested batch size and learning rate, and try increasing or decreasing the number of training steps first. If the model struggles to learn your dataset even after 20,000 steps (the maximum allowed in Amazon Bedrock), then we suggest either increasing the batch size or adjusting the learning rate upward. These adjustments, through subtle, can make a significant difference in our model’s performance. For more details about the hyperparameters, refer to Hyperparameters for Creative Content Generation models.

Create a fine-tuning job using the Python SDK

The following Python code snippet creates the same fine-tuning job using the create_model_customization_job API:

When the job is complete, you can retrieve the new customModelARN using the following code:

Deploy the fine-tuned model

With the preceding hyperparameter configuration, this fine-tuning job might take up to 12 hours to complete. When it’s complete, you should see a new model in the custom models list. You can then create provisioned throughput to host the model. For more details on provisioned throughput and different commitment plans, see Increase model invocation capacity with Provisioned Throughput in Amazon Bedrock.

Deploy the model on the Amazon Bedrock console

To deploy the model from the Amazon Bedrock console, complete the following steps:

- On the Amazon Bedrock console, choose Custom models under Foundation models in the navigation pane.

- Select the new custom model and choose Purchase provisioned throughput.

- In the Provisioned Throughput details section, enter a name for the provisioned throughput.

- Under Select model, choose the custom model you just created.

- Then specify the commitment term and model units.

After you purchase provisioned throughput, a new model Amazon Resource Name (ARN) is created. You can invoke this ARN when the provisioned throughput is in service.

Deploy the model using the Python SDK

The following Python code snippet creates provisioned throughput using the create_provisioned_model_throughput API:

Test the fine-tuned model

When the provisioned throughput is live, we can use the following code snippet to test the custom model and experiment with generating some new images for a sequel to Picchu:

|

|

|

| Mayu face shows a mix of nervousness and determination. Mommy kneels beside her, gently holder her. A landscape is visible in the background. | A steep cliff face with a long wooden ladder extending downwards. Halfway down the ladder is Mayu with a determined expression on her face. Mayu’s small hands grip the sides of the ladder tightly as she carefully places her feet on each rung. The surrounding environment shows a rugged, mountainous landscape. | Mayu standing proudly at the entrance of a simple school building. Her face beams with a wide smile, expressing pride and accomplishment. |

Clean up

To avoid incurring AWS charges after you are done testing, complete the cleanup steps in picchu-finetuning.ipynb and delete the following resources:

- Amazon SageMaker Studio domain

- Fine-tuned Amazon Nova model and provision throughput endpoint

Conclusion

In this post, we demonstrated how to elevate character and style consistency in storyboarding from Part 1 by fine-tuning Amazon Nova Canvas in Amazon Bedrock. Our comprehensive workflow combines automated video processing, intelligent character extraction using Amazon Rekognition, and precise model customization using Amazon Bedrock to create a solution that maintains visual fidelity and dramatically accelerates the storyboarding process. By fine-tuning the Amazon Nova Canvas model on specific characters and styles, we’ve achieved a level of consistency that surpasses standard prompt engineering, so creative teams can produce high-quality storyboards in hours rather than weeks. Start experimenting with Nova Canvas fine-tuning today, so you can also elevate your storytelling with better character and style consistency.

About the authors

Dr. Achin Jain is a Senior Applied Scientist at Amazon AGI, where he works on building multi-modal foundation models. He brings over 10+ years of combined industry and academic research experience. He has led the development of several modules for Amazon Nova Canvas and Amazon Titan Image Generator, including supervised fine-tuning (SFT), model customization, instant customization, and guidance with color palette.

Dr. Achin Jain is a Senior Applied Scientist at Amazon AGI, where he works on building multi-modal foundation models. He brings over 10+ years of combined industry and academic research experience. He has led the development of several modules for Amazon Nova Canvas and Amazon Titan Image Generator, including supervised fine-tuning (SFT), model customization, instant customization, and guidance with color palette.

James Wu is a Senior AI/ML Specialist Solution Architect at AWS. helping customers design and build AI/ML solutions. James’s work covers a wide range of ML use cases, with a primary interest in computer vision, deep learning, and scaling ML across the enterprise. Prior to joining AWS, James was an architect, developer, and technology leader for over 10 years, including 6 years in engineering and 4 years in marketing & advertising industries.

James Wu is a Senior AI/ML Specialist Solution Architect at AWS. helping customers design and build AI/ML solutions. James’s work covers a wide range of ML use cases, with a primary interest in computer vision, deep learning, and scaling ML across the enterprise. Prior to joining AWS, James was an architect, developer, and technology leader for over 10 years, including 6 years in engineering and 4 years in marketing & advertising industries.

Randy Ridgley is a Principal Solutions Architect focused on real-time analytics and AI. With expertise in designing data lakes and pipelines. Randy helps organizations transform diverse data streams into actionable insights. He specializes in IoT solutions, analytics, and infrastructure-as-code implementations. As an open-source contributor and technical leader, Randy provides deep technical knowledge to deliver scalable data solutions across enterprise environments.

Randy Ridgley is a Principal Solutions Architect focused on real-time analytics and AI. With expertise in designing data lakes and pipelines. Randy helps organizations transform diverse data streams into actionable insights. He specializes in IoT solutions, analytics, and infrastructure-as-code implementations. As an open-source contributor and technical leader, Randy provides deep technical knowledge to deliver scalable data solutions across enterprise environments.

-

Business1 week ago

Business1 week agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms4 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi