Ethics & Policy

10 Creepiest Opening Lines from Gothic Horror Novels

Gothic horror novels have long captured readers’ imaginations with their uncanny atmospheres, dark settings, and haunting tales. At the heart of their power to enthral are their engaging opening lines that immediately pull readers into unsettling worlds of suspense and dread. From cursed mansions to supernatural entities, these beginnings promise tales of horror that linger in the mind long after the final page. Here, we explore ten of the creepiest and most iconic opening lines from Gothic horror novels, each a portal to chilling tales of terror and the macabre.

1. “You will rejoice to hear that no disaster has accompanied the commencement of an enterprise which you have regarded with such evil forebodings.” –Frankenstein, by Mary Shelley

Frankenstein by Mary Shelley, first published in 1818, is a cornerstone of Gothic and science fiction literature. The novel follows Victor Frankenstein, an ambitious scientist who creates a human-like creature from reanimated body parts, only to face dire consequences. Told through letters and narratives, it explores themes of scientific ethics, monstrosity, and the dangers of playing god.

Set in Europe in the late 18th century, the novel’s evocative settings—icy Swiss Alps, and desolate landscapes—enhance its spooky tone. Frankenstein remains relevant even today, reflecting the scientific and cultural anxieties of its time, and raising questions about unchecked ambition and societal rejection of the “other.”

2. “Jonathan Harker’s Journal

3 May. Bistritz.—Left Munich at 8:35 P.M., on 1st May, arriving at Vienna early next morning; should have arrived at 6:46, but the train was an hour late.”- Dracula, by Bram Stoker

When Jonathan Harker travels to Transylvania to assist Count Dracula with purchasing a London house, he uncovers horrifying truths about his client. Soon, strange events unfold in England: a shipwreck off Whitby’s coast, a woman with mysterious neck punctures, and an asylum inmate raving about the ‘Master’s’ arrival.

In Dracula, Bram Stoker masterfully creates a horror classic, exploring the nightmare world of vampires and delving into Victorian sexuality and desire.

3. “Dead!” a woman screamed. “It’s the dead!”- Something Strange and Deadly, by Susan Dennard

Eleanor Fitt has enough problems: her brother is missing, her family is struggling, and her mother is desperate to marry her off. But the biggest threat is what she’s just read—the Dead are rising.

When a zombie delivers a letter from her brother, Eleanor learns he’s been taken by whoever controls the Dead army. She must team up with the Spirit-Hunters, the city’s protectors against the supernatural to save him. As danger grows and her connection to the infuriating yet captivating Daniel deepens, Eleanor realises her reputation—and her life—are at stake.

4. “It was a dark and stormy night; the rain fell in torrents…” – The Haunting of Hill House by Shirley Jackson

It is the tale of four adventurers who reach Hill House, a renownedly hostile pile: Eleanor, a lonely, frail young woman who is well-versed in poltergeists; Luke, the prospective heir of Hill House; Dr. Montague, an esoteric professor seeking concrete proof of a “haunting”; and Theodora, the playful assistant. Initially, it appears that their visit will only be a frightening experience with unexplainable occurrences. However, Hill House is amassing its capabilities and will select one to create its own shortly.

5. “The world had teeth and it could bite you with them anytime it wanted.”- The Girl Who Loved Tom Gordon by Stephen King

On a six-mile hike on the Appalachian Trail, nine-year-old Trisha McFarland tyres of her brother Pete’s bickering with their divorced mother. Wandering off and attempting a shortcut, Trisha becomes lost in a dangerous wilderness.

As night falls, Trisha relies on her ingenuity, courage, and faith to face mounting fears. For comfort, she tunes her Walkman to Boston Red Sox games, drawing strength from her hero, pitcher Tom Gordon. When the radio fades, Trisha imagines Gordon protecting her from a terrifying enemy that has left a trail of slaughtered animals and mangled trees in the dark woods.

6. “They’re all dead now.”- The Ruins by Scott Smith

A group of pals who are stuck in the Mexican wilderness discover a creeping terror that is unimaginable. Two young couples are enjoying a laid-back holiday in Mexico, complete with sun-filled days, and wild evenings, and forming friendships with other travellers. One of those buddies’ brother goes missing, so they start to search the bush for him. When they discover an ancient ruins site and the evil spirit that hides there, what began as a pleasant day trip gradually turns into a nightmare.

7. “The terror, which would not end for another twenty-eight years—if it ever did end—began, so far as I know or can tell, with a boat made from a sheet of newspaper floating down a gutter swollen with rain.”- It by Stephen King

It, a novel by Stephen King published in 1986, is a quintessential horror story exploring childhood terrors, trauma, and their lasting effects. Set in Derry, Maine, the story alternates between 1958 and 1985. A group of seven 11-year-olds, led by Bill Denbrough, battles a shape-shifting monster called “It,” which reappears every 27 years. Taking terrifying forms, most notably Pennywise the Clown, It commits horrific acts, including the murder of Bill’s younger brother, Georgie.

Believing they’ve destroyed It, the children vow to reunite if it returns. In 1985, the now-adults face the monster again. Written in King’s conversational style, the novel intertwines timelines and life stories, blending fantasy with visceral horror. It remains one of King’s most enduring works.

8. “When I stepped out into the bright sunlight from the darkness of the movie house, I had only two things on my mind: Paul Newman and a ride home.”- Carrie by Stephen King

Carrie White, a misfit high school girl, discovers she has telekinetic powers. Repressed by her domineering, ultra-religious mother and tormented by cruel classmates, Carrie struggles to fit in, culminating in a dramatic confrontation on prom night.

An outcast, Carrie never fit in—her awkwardness made her a target for teenage cruelty. Her only solace was a private “game,” moving small objects like marbles or candles with her mind. Yet, her mother condemned her power as sinful, forcing Carrie to suppress it.

A single act of kindness gave Carrie hope, but a moment of vicious cruelty at the prom turned her secret ability into a weapon of destruction. With her powers unleashed, horror consumed the night as doors locked and flames fell.

9. “No live organism can continue for long to exist sanely under conditions of absolute reality; even larks and katydids are supposed, by some, to dream.”- The Haunting of Hill House by Shirley Jackson

It is the tale of four adventurers who reach Hill House, a renownedly hostile pile: Eleanor, a lonely, frail young woman who is well-versed in poltergeists; Luke, the prospective heir of Hill House; Dr. Montague, an esoteric professor seeking concrete proof of a “haunting”; and Theodora, the playful assistant. Initially, it appears that their visit will only be a frightening experience with unexplainable occurrences. However, Hill House is amassing its capabilities and will select one to create its own shortly.

10. “Last night I dreamt I went to Manderley again.”- Rebecca by Daphne du Maurier

Our heroine is enchanted by the handsome widower Maxim de Winter and his unexpected marriage proposal at the start of the book in Monte Carlo. She is an orphan who works as a lady’s maid and is hardly able to comprehend her good fortune. She is unaware of the immense impact his late wife would have on their life until they get to his enormous country house, where she is confronted with a persistent evil that threatens to end their marriage from beyond the dead.

To conclude, the opening lines of Gothic horror novels are masterful in their ability to evoke unease, curiosity, and anticipation. They set the tone for unforgettable journeys through shadowed corridors, haunted landscapes, and the darker recesses of the human psyche. Whether it’s the foreboding start of Frankenstein, the enigmatic allure of Rebecca, or the sinister promise of It, these lines remind us why Gothic horror continues to captivate and terrify readers across generations. Let these chilling introductions serve as an invitation to explore the timeless dread that lies within their pages.

Ethics & Policy

AIs intersection with Sharia sciences: opportunities and ethics

The symposium was held on September 2-3, 2025, at the forum’s headquarters. The symposium brought together a select group of researchers and specialists in the fields of jurisprudence, law, and scientific research.

AI between opportunities and challenges

The symposium highlighted the role of AI as a strategic tool that holds both promising opportunities and profound challenges, calling for conscious thinking from an authentic Islamic perspective that balances technological development with the preservation of religious and human values.

AI in Sharia sciences

The first day of the symposium featured two scientific sessions. Professor Fatima Ali Al Hattawi, doctoral researcher, discussed the topic of artificial intelligence and its applications in Sharia sciences.

AI in law

The second session featured Professor Abdulaziz Saleh Saeed, faculty member at the Mohammed bin Zayed University for Humanities, who presented a research paper titled “The Impact of Artificial Intelligence on Law and Judicial Systems.”

Prof. Abdulaziz explained that these technologies represent a true revolution in the development of judicial systems, whether in terms of accelerating litigation, enhancing the accuracy of judicial decisions, or even supporting lawyers and researchers in accessing precedents and rulings.

Prof. Abdulaziz explained that AI-enabled transformations are rapidly shaping legal practices and procedures, and that these tools offer tremendous potential for harnessing opportunities and addressing challenges using advanced technologies. He also addressed the importance of regulatory and legislative frameworks for protecting the human and legal values associated with justice, emphasising that the future requires the development of cadres capable of reconciling technology and law.

AI and Fatwa

The second day featured two scientific sessions. On Wednesday morning, the symposium, “Artificial Intelligence and Its Impact on Sharia Sciences,” was held. The symposium was attended by a select group of researchers, academics, and those interested in modern technologies and their use in serving Sharia sciences.

The second day’s activities included two main sessions. The first session was presented by Dr Omar Al Jumaili, expert and preacher at the Islamic Affairs and Charitable Activities Department (IACAD) in Dubai, presented a paper titled “The Use of Artificial Intelligence in Jurisprudence and Fatwa.” He reviewed the modern applications of these technologies in the field of fatwa, their role in enhancing rapid access to jurisprudential sources and facilitating accurate legal research. He also emphasised the importance of establishing legal controls that preserve the reliability of fatwas and protect them from the risks of total reliance on smart systems.

Ethics of using AI

The second panel was addressed by Eng. Maher Mohammed Al Makhamreh, Head of the Training and Consulting Department at the French College, who addressed “The Ethics of Using Artificial Intelligence in Scientific Research.” In his paper, he emphasised that technological developments open up broad horizons for researchers, but require a firm commitment to ethical values to ensure the integrity of research. He reviewed the most prominent challenges facing academics in light of the increasing reliance on algorithms, pointing to future opportunities in employing artificial intelligence to support Sharia studies without compromising their objectivity and credibility.

It is worth noting that the symposium witnessed broad engagement from participants, who appreciated the solid scientific presentations of the lecturers and expressed great interest in the future prospects offered by artificial intelligence in developing the fields of jurisprudence and scientific research.

Ethics & Policy

What does it mean to build AI responsibly? Principles, practices and accountability

Dr. Foteini Agrafioti is the senior vice-president for data and artificial intelligence and chief science officer at Royal Bank of Canada.

Artificial intelligence continues to be one of the most transformative technologies affecting the world today. It’s hard to imagine an aspect of our lives that is not impacted by AI in some way. From assisting our web searches to curating playlists and providing recommendations or warning us when a payment is due, it seems not a day goes by without assistance from an AI insight or prediction.

It is also true that AI technology comes with risks, a fact that has been well documented and is widely understood by AI developers. The race to use generative AI in business may pose a risk to safe adoption. As Canadians incorporate these tools into their personal lives, it has never been more important for organizations to examine their AI practices and ensure they are ethical, fair and beneficial to society. In other words, to practice AI responsibly.

So, in an era where AI is dominating, where do we draw the line?

When everyone is pressing to adopt AI, my recommendation is do so and swiftly, but start with deciding what you are NOT going to use it for. What is your no-go zone for your employees and clients? Devising principles should be a critical component of any organization’s AI ecosystem, with an organized and measured approach to AI that balances innovation with risk mitigation, aligns with human values and has a focus on well-being for all. This is particularly pronounced in highly regulated sectors where client trust is earned not just through results but also with transparency and accountability.

Encouraging this conversation early may help inform important decisions on your approach. At RBC we had the opportunity to discuss these concepts early in our journey, when we hired AI scientists and academics in the bank and were faced with the opportunity to push boundaries in the way we interact with our clients. A key decision that we made a decade ago was that we wouldn’t prioritize speed over safety. This fundamental principle has shaped our approach and success to date. We have high standards for bias testing and model validation and an expectation that we fully understand every AI that is used in our business.

This cannot just be a philosophical conversation or debate.

The plan to build AI according to a company’s ethos needs to be pragmatic, actionable and available for education and training to all employees. Organizations of all sizes and in all sectors should develop a framework of responsible AI principles to ensure the technology minimizes harm and will be used to benefit clients and the broader society. For large companies, this can manifest in how they build their models, but even small businesses can establish ground rules for how employees are permitted to use for example, off-the-shelf GenAI tools.

RBC aims to be the leader in responsible AI for financial services and we continue to innovate while also respecting our clients, employees, partners, inclusion and human integrity. The two can go hand-in-hand when using AI responsibly is a corporate priority. Our use of AI is underscored by four pillars: accountability, fairness, privacy and security and transparency.

From senior leaders to the data analysts working on the projects, we put the clients at the centre of our AI work by asking the right and strategic questions to ensure it aligns with RBC’s values. When developing AI products or integrating with a third-party vendor, organizations should consider adopting policies and guidelines to understand third-party risks such as scheduled audits, in-depth due diligence, product testing, user feedback, validation and monitoring to ensure AI systems are developed and deployed in a responsible and transparent manner.

Employee training is an equally important but often overlooked element of responsible AI, ensuring employees know not only how to prompt effectively, but also how to supervise the outputs. This includes guidance on the use of external tools for work-related products, the kinds of information that can be uploaded or included in prompts and use cases that have the most to gain from what AI can deliver safely.

At RBC, all leaders and executives who have access to GenAI tools complete training that addresses responsible AI considerations, governance and decision-making to enable informed oversight and guidance on responsible AI initiatives. Employees who are granted access to GenAI support tools need to take general training, which includes an introduction to responsible AI principles, concepts and ethical frameworks. AI models are subjected to thorough testing, validation and monitoring before they are approved for use. This is not a risk-averse culture. It’s a culture that honours the existing partnership it has with its clients.

This is not once and done. Ongoing governance to review and assess the principles remains important. RBC’s responsible AI working group continues to ensure the principles remain relevant, address business and stakeholder needs and drive linkages between the organization’s responsible AI principles, practices and processes.

Another approach is to partner with leaders including educational institutions and think tanks in the AI space to provide strategic opportunities to help drive responsible AI adoption. RBC recently partnered with MIT to join a newly formed Fintech Alliance, focused on addressing pressing matters around the ethical use of AI in financial services.

This type of fulsome approach helps recruit in the AI space from leading educational programs globally, which brings together divergent opinions and different work experience, creating a diverse workforce which creates an environment where collaboration, learning and solutions thrive.

As modern AI systems advance, navigating platform ethics can be complex. Implementing a strong responsible AI framework, inclusive of principles and practices, will ensure challenges are addressed ethically and AI systems are adopted responsibly.

This column is part of Globe Careers’ Leadership Lab series, where executives and experts share their views and advice about the world of work. Find all Leadership Lab stories at tgam.ca/leadershiplab and guidelines for how to contribute to the column here.

Ethics & Policy

10 Steps for Safe AI Deployment

- Generative AI is a double-edged sword. It offers immense opportunities for growth and innovation. However, it also carries risks related to bias, security, and compliance

- Proactive governance is not optional. A responsible Generative AI deployment checklist helps leaders minimize risks and maximize trust.

- The checklist for Generative AI compliance assessment covers everything, including data integrity, ethical alignment, human oversight, continuous monitoring, and more.

- The ROI of responsible AI goes beyond compliance. This leads to increased consumer trust, reduced long-term costs, and a strong competitive advantage.

- Partner with AI experts like Appinventiv to turn Gen AI principles into a practical, actionable framework for your business.

The AI revolution is here, with generative models like GPT and DALL·E unlocking immense potential. In the blink of an eye, generative AI has moved from the realm of science fiction to a staple of the modern workplace. It’s no longer a question of if you’ll use it, but how and, more importantly, how well.

The potential is astounding: Statista’s latest research reveals that the generative AI market is projected to reach from $66.89 billion in 2025 to $442.07 billion by 2031. The race is on, and companies are deploying these powerful models to perform nearly every operational process. Businesses use Gen AI to create content, automate workflows, write code, analyze complex data, redefine customer experiences, and so on.

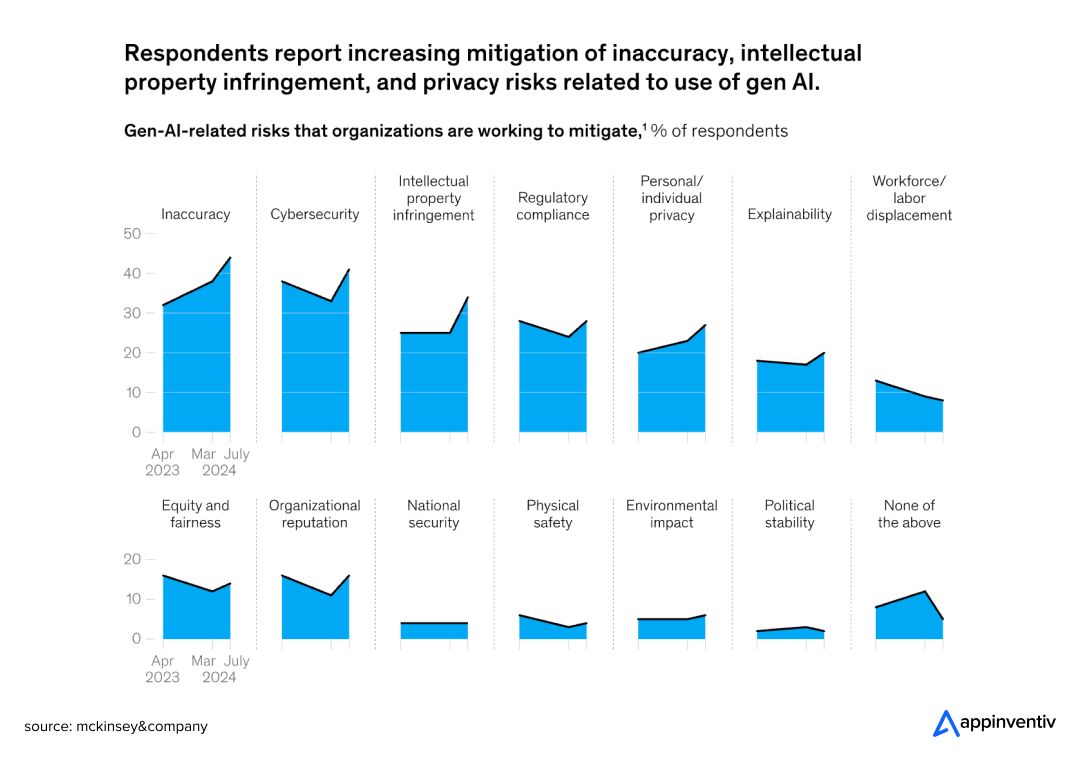

But as with any revolution, there’s a flip side. The same technology that can boost creativity can also introduce a host of unforeseen risks, from perpetuating biases to leaking sensitive data and creating “hallucinations” that are factually incorrect. The stake is truly high. According to McKinsey’s latest research, 44% of organizations have already experienced negative consequences from not evaluating generative AI risks.

As AI systems become integral to decision-making, the question arises: Are we ready to manage these gen AI risks effectively?

This is where the responsible AI checklist comes in. This blog covers the 10 critical AI governance questions every business leader must ask before deploying generative AI. These questions will help mitigate risks, build trust, and ensure that your AI deployment efforts align with legal, ethical, and operational standards.

AI Risks Are Real. Act Now!

Contact us for a comprehensive AI risk assessment to maximize AI benefits and minimize risks.

Why Businesses Across Industries Prioritize Responsible AI Deployment

Before we dive into the questions, let’s be clear: responsible AI isn’t an afterthought; it’s a core component of your business strategy. Without a responsible governance framework, you may risk technical failures, your brand image, your finances, and your very relationship with your customers. Here is more on why it’s essential for leaders to prioritize responsible AI practices:

Mitigating Risk

Responsible AI is not about slowing down innovation. It’s about accelerating it safely. Without following Generative AI governance best practices, AI projects can lead to serious legal, financial, and reputational consequences.

Companies that rush into AI deployment often spend months cleaning up preventable messes. On the other hand, companies that invest time upfront in responsible AI practices in their AI systems actually work better and cause fewer operational headaches down the road.

Enhancing Operational Efficiency

By implementing responsible AI frameworks, businesses can make sure their AI systems are secure and aligned with business goals. This approach helps them avoid costly mistakes and ensures the reliability of AI-driven processes.

The operational benefits are real and measurable. Teams stop worrying about whether AI systems will create embarrassing incidents, customers develop trust in automated decisions, and leadership can focus on innovation instead of crisis management.

Staying Compliant

The EU AI Act is now fully implemented, with fines reaching up to 7% of global revenue for serious violations. In the US, agencies like the EEOC are actively investigating AI bias cases. One major financial institution recently paid $2.5 million for discriminatory lending algorithms. Another healthcare company had to pull its diagnostic AI after privacy violations.

These are not isolated incidents. They are predictable outcomes when companies build and deploy AI models without a proper Generative AI impact assessment.

Upholding Ethical Standards

Beyond legal compliance, it is essential that AI systems operate in accordance with principles of fairness, transparency, and accountability. These ethical considerations are necessary to protect both businesses and their customers’ sensitive data.

Companies demonstrating responsible AI practices report higher customer trust scores and significantly better customer lifetime value. B2B procurement teams now evaluate Generative AI governance as standard vendor selection criteria.

Major business giants, including Microsoft, Google, and NIST, are already weighing in on Generative AI risk management and responsible AI practices. Now it’s your turn. With that said, here is a Generative AI deployment checklist for CTOs you must be aware of:

The Responsible AI Checklist: 10 Generative AI Governance Questions Leaders Must Ask

So, are you ready to deploy Generative AI? That’s great. But before you do, you must be aware of the Pre-deployment AI validation questions to make sure you’re building on a solid foundation, not quicksand. Here is a series of Generative AI risk assessment questions to ask your teams before, during, and after deploying a generative AI solution.

1. Data Integrity and Transparency

The Question: Do we know what data trained this AI, and is it free from bias, copyright issues, or inaccuracies?

Why It Matters: An AI model is only as good as its data. Most foundational models learn from a massive, and often messy, collection of data scraped from the internet. This can lead to the model making up facts, which we call “hallucinations,” or accidentally copying copyrighted material. It’s a huge legal and brand risk.

Best Practice: Don’t just take the vendor’s word for it. Demand thorough documentation of the training data. For any internal data you use, set clear sourcing policies and perform a detailed audit to check for quality and compliance.

2. Ethical Alignment

The Question: Does this AI system truly align with our company’s core values?

Why It Matters: This goes way beyond checking legal boxes. This question gets to the heart of your brand identity. Your AI doesn’t just process data; it becomes the digital face of your values. Every decision it makes, every recommendation it offers, represents your company.

Let’s say your company values fairness and equality. If your new AI hiring tool, through no fault of its own, starts to favor male candidates because of an undetected bias, that’s a direct contradiction of your values. It’s a fast track to a PR crisis and a loss of employee morale.

Best Practice: Create an internal AI ethics charter. Form a cross-functional ethics board with representatives from legal, marketing, and product teams to review and approve AI projects.

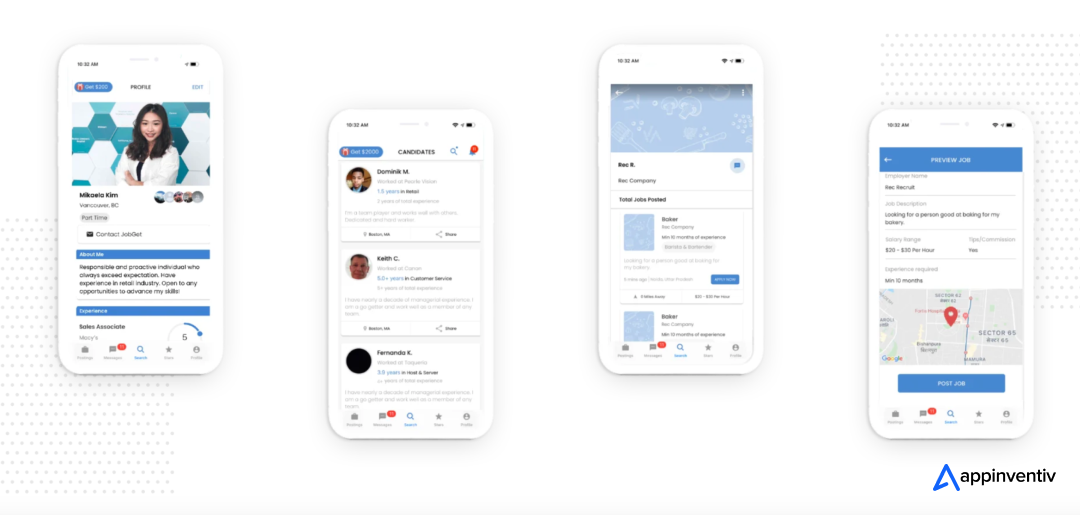

At Appinventiv, we have seen this principle in action. When developing JobGet, an AI-driven job search platform, we ensure AI matching algorithms promote equal opportunity for everyone, which aligns with our clients’ diversity commitments. This job search platform ensures that no candidate is unfairly disadvantaged by bias, creating a transparent and trustworthy platform for job seekers and employers alike.

3. Fairness and Bias Mitigation

The Question: How have we tested for and actively mitigated bias in the AI’s outputs?

Why It Matters: Bias in AI is not some abstract academic concern; it’s a business-killing reality that has already destroyed careers and companies. You might be aware of the facial recognition systems that couldn’t recognize darker skin tones and loan algorithms that treated identical applications differently based on zip codes that correlated with race.

Here’s what keeps business leaders awake at night: your AI might be discriminating right now, and you’d never know unless you specifically looked for it. These systems are brilliant at finding patterns, including patterns you never intended them to learn.

Best Practice: Test everything. Use datasets that actually represent the real world, not just convenient samples. Regularly audit AI models’ performance across different groups and discover uncomfortable truths about their systems.

Also Read: Benefits of Facial Recognition Software Development

4. Security and Privacy

The Question: What safeguards do we have against data breaches, prompt injection attacks, and sensitive data leaks?

Why It Matters: Generative AI creates new security weak points. An employee might paste confidential customer information into a public model to get a quick summary. That data is then out in the wild and misused by cyber fraudsters. As IBM’s 2024 report showed, the cost of a data breach jumped to $4.88 million in 2024 from $4.45 million in 2023, a 10% spike from the previous year. These are real vulnerabilities that require a proactive defense.

Best Practice: Implement strict data minimization policies and use encryption. Train employees on the risks and set up secure, private environments for any sensitive work.

5. Explainability and Transparency

The Question: Can we explain why our AI made that decision without sounding like we are making it up?

Why It Matters: Many advanced AI models operate as “black boxes”; they give you answers but refuse to show their work. That might sound impressive in a science fiction movie, but it’s a nightmare for real business applications.

Imagine explaining to an angry customer why your “black box” algorithm rejected their loan application. Good luck with that conversation. In high-stakes situations like medical diagnosis or financial decisions, “because the AI said so” isn’t going to cut it with regulators, customers, or your own conscience.

Best Practice: Use Explainable AI (XAI) tools to visualize and interpret the model’s logic. Ensure a clear audit trail for every output and decision the AI makes.

6. Human Oversight

The Question: Where does the human-in-the-loop fit into our workflow, and what are the escalation points?

Why It Matters: While AI can automate tasks, human judgment remains essential for ensuring accuracy and ethical behavior. Automation bias can lead people to blindly trust an AI’s output without critical review. The risk is especially high in creative or analytical fields where the AI is seen as a “co-pilot.”

Best Practice: Define specific moments when human expertise matters most. Train your staff to question AI outputs, not just approve them. And they create clear escalation paths for when things get complicated or controversial.

7. Compliance and Regulation

The Question: Are we prepared to comply with current and emerging AI regulations, and is our governance framework adaptable?

Why It Matters: The legal landscape for AI is a moving target. What’s perfectly legal today might trigger massive fines tomorrow. A rigid governance framework will quickly become outdated. Leaders need to build a system that can evolve with new laws, whether they are focused on data privacy, intellectual property, or algorithmic transparency.

For example, the EU AI Act was passed by the European Parliament in March 2024, introduces strict requirements for high-risk AI systems and has different timelines for various obligations, highlighting the need for a flexible governance model.

Best Practice: Assign someone smart to watch the regulatory horizon full-time. Conduct regular compliance audits and design your framework with flexibility in mind.

8. Accountability Framework

The Question: Who is ultimately accountable if the AI system causes harm?

Why It Matters: This is a question of legal and ethical ownership. If an AI system makes a critical error that harms a customer or leads to a business loss, who is responsible? Is it the developer, the product manager, the C-suite, or the model provider? Without a clear answer, you create a dangerous vacuum of responsibility.

Best Practice: Define and assign clear ownership roles for every AI system. Create a clear accountability framework that outlines who is responsible for the AI’s performance, maintenance, and impact.

9. Environmental and Resource Impact

The Question: How energy-intensive is our AI model, and are we considering sustainability?

Why It Matters: Training and running large AI models require massive amounts of power. For any company committed to ESG (Environmental, Social, and Governance) goals, ignoring this isn’t an option. It’s a reputational and financial risk that’s only going to grow.

Best Practice: Prioritize model optimization and use energy-efficient hardware. Consider green AI solutions and choose cloud models that run with sustainability in mind.

10. Continuous Monitoring and Risk Management

The Question: Do we have systems for ongoing monitoring, auditing, and retraining to manage evolving risks?

Why It Matters: The risks associated with AI don’t end at deployment. A model’s performance can “drift” over time as real-world data changes or new vulnerabilities can emerge. Without a system for Generative AI risk management and continuous monitoring, your AI system can become a liability without you even realizing it.

Best Practice: Implement AI lifecycle governance. Use automated tools to monitor for model drift, detect anomalies, and trigger alerts. Establish a schedule for regular retraining and auditing to ensure the model remains accurate and fair.

Have any other questions in mind? Worry not. We are here to address that and help you ensure responsible AI deployment. Contact us for a detailed AI assessment and roadmap creation.

Common Generative AI Compliance Pitfalls and How to Avoid Them

Now that you know the critical questions to ask before deploying AI, you are already ahead of most organizations. But even armed with the right questions, it’s still surprisingly easy to overlook the risks hiding behind the benefits. Many organizations dive in without a clear understanding of the regulatory minefield they are entering. Here are some of the most common generative AI compliance pitfalls you may encounter and practical advice on how to steer clear of them.

Pitfall 1: Ignoring Data Provenance and Bias

A major mistake is assuming your AI model is a neutral tool. In reality, it’s a reflection of the data it was trained on, and that data is often biased and full of copyright issues. This can lead to your AI system making unfair decisions or producing content that infringes on someone’s intellectual property. This is a critical area of Generative AI legal risks.

- How to avoid it: Before you even think about deployment, perform a thorough Generative AI risk assessment. Use a Generative AI governance framework to vet the training data.

Pitfall 2: Lack of a Clear Accountability Framework

When an AI system makes a costly mistake, who takes the blame? In many companies, the answer is not clear. Without a defined responsible AI deployment framework, you end up with a vacuum of responsibility, which can lead to finger-pointing and chaos during a crisis.

- How to avoid it: Clearly define who is responsible for the AI’s performance, maintenance, and impact from the start. An executive guide to AI governance should include a section on assigning clear ownership for every stage of the AI lifecycle.

Pitfall 3: Failing to Keep Up with Evolving Regulations

The regulatory landscape for AI is changing incredibly fast. What was permissible last year might land you in legal trouble today. Companies that operate with a static compliance mindset are setting themselves up for fines and legal action.

- How to avoid it: Treat your compliance efforts as an ongoing process, not a one-time project. Implement robust Generative AI compliance protocols and conduct a regular AI governance audit to ensure you are staying ahead of new laws. An AI ethics checklist for leaders should always include a review of the latest regulations.

Pitfall 4: Neglecting Human Oversight and Transparency

It’s tempting to let an AI handle everything, but this can lead to what’s known as “automation bias,” where employees blindly trust the AI’s output without question. The lack of a human-in-the-loop can be a major violation of Generative AI ethics for leaders and create a huge liability.

- How to avoid it: Ensure your Generative AI impact assessment includes a plan for human oversight. You need to understand how to explain the AI’s decisions, especially for high-stakes applications. This is all part of mitigating LLM risks in enterprises.

Don’t Let AI Risks Catch You Off Guard

Let our AI experts conduct a comprehensive AI governance audit to ensure your generative AI model is secure and compliant.

How to Embed Responsible AI Throughout Generative AI Development

You can’t just check the “responsible AI” box once and call it done. Smart companies weave these practices into every stage of their AI projects, from the first brainstorming session to daily operations. Here is how they do it right from start to end:

- Planning Stage: Before anyone touches code, figure out what could go wrong. Identify potential risks, understand the needs of different stakeholders, and detect ethical landmines early. It’s much easier to change direction when you’re still on paper than when you have a half-built system.

- Building Stage: Your developers should be asking “Is this fair?” just as often as “Does this work?” Build fairness testing into your regular coding routine. Test for bias like you’d test for bugs. Make security and privacy part of your standard development checklist, not something you bolt on later.

- Launch Stage: Deployment day isn’t when you stop paying attention; it’s when you start paying closer attention. Set up monitoring systems that actually work. Create audit schedules you’ll stick to. Document your decisions so you can explain them later when someone inevitably asks, “Why did you do it that way?”

- Daily Operations: AI systems change over time, whether you want them to or not. Data shifts, regulations updates, and business needs evolve. Thus, you must schedule regular check-ups for your AI just like you would for any critical business system. Update policies when laws change. Retrain models when performance drifts.

The Lucrative ROI of Responsible AI Adoption

Adhering to the Generative AI compliance checklist is not an extra step. The return on investment for a proactive, responsible AI strategy is immense. You’ll reduce long-term costs from lawsuits and fines, and by building trust, you’ll increase customer adoption and loyalty. In the short term, the long-term benefits of adopting responsible AI practices far outweigh the initial costs. Here’s how:

- Risk Reduction: Prevent costly legal battles and fines from compliance failures or misuse of data.

- Brand Loyalty: Companies that build trust by adhering to ethical AI standards enjoy higher customer retention.

- Operational Efficiency: AI systems aligned with business goals and ethical standards tend to be more reliable, secure, and cost-efficient.

- Competitive Advantage: Data-driven leaders leveraging responsible AI are better positioned to attract customers, investors, and talent in an increasingly ethical marketplace.

The Appinventiv Approach to Responsible AI: How We Turn Strategy Into Reality

At Appinventiv, we believe that innovation and responsibility go hand in hand. We stand by you not just to elevate your AI digital transformation efforts, but we stand by you at every step to do it right.

We help our clients turn this responsible AI checklist from a list of daunting questions into an actionable, seamless process. Our skilled team of 1600+ tech experts answers all the AI governance questions and follows the best practices to embed them in your AI projects. Our Gen AI services are designed to support you at every stage:

- AI Strategy & Consulting: Our Generative AI consulting services help you plan the right roadmap. We work with you to define your AI objectives, identify potential risks, and build a roadmap for responsible deployment.

- Secure AI Development: Our Generative AI development services are designed on a “privacy-by-design” principle, ensuring your models are secure and your data is protected from day one.

- Generative AI Governance Frameworks: We help you design and implement custom AI data governance frameworks tailored to your specific business needs. This approach helps ensure compliance and accountability.

- Lifecycle Support: AI systems aren’t like traditional software that you can deploy and forget about. They need regular check-ups, performance reviews, and updates to stay trustworthy and effective over time. At Appinventiv, we monitor, audit, and update your AI systems for lasting performance and trust.

How We Helped a Global Retailer Implement Responsible AI

We worked with a leading retailer to develop an AI-powered recommendation engine. By embedding responsible AI principles from the beginning, we ensured the model was bias-free, secure, and explainable, thus enhancing customer trust and compliance with privacy regulations.

Ready to build your responsible AI framework? Contact our AI experts today and lead with confidence.

FAQs

Q. How is governing generative AI different from traditional software?

A. Traditional software follows predictable, deterministic rules, but generative AI is different. Its outputs can be unpredictable, creating unique challenges around hallucinations, bias, and copyright. The “black box” nature of these large models means you have to focus more on auditing their outputs than just their code.

Q. What are the most common risks overlooked when deploying generative AI?

A. Many leaders overlook intellectual property infringement, since models can accidentally replicate copyrighted material. Prompt injection attacks are also often missed, as they can manipulate the model’s behavior. Lastly, the significant environmental impact and long-term costs of training and running these large models are frequently ignored.

Q. How do we measure the effectiveness of our responsible AI practices?

A. You can measure effectiveness both quantitatively and qualitatively. Track the number of bias incidents you have detected and fixed. Monitor the time it takes to resolve security vulnerabilities. You can also get qualitative feedback by conducting surveys to gauge stakeholder trust in your AI systems.

Q. What is a responsible AI checklist, and why do we need one?

A. A responsible AI checklist helps companies assess the risks and governance efficiently. The checklist is essential for deploying AI systems safely, ethically, and legally.

Q. What are the six core principles of Generative AI impact assessment?

A. Generative AI deployment checklist aligns with these six core principles:

- Fairness

- Reliability & Safety

- Privacy & Security

- Inclusiveness

- Transparency

- Accountability

-

Business5 days ago

Business5 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics